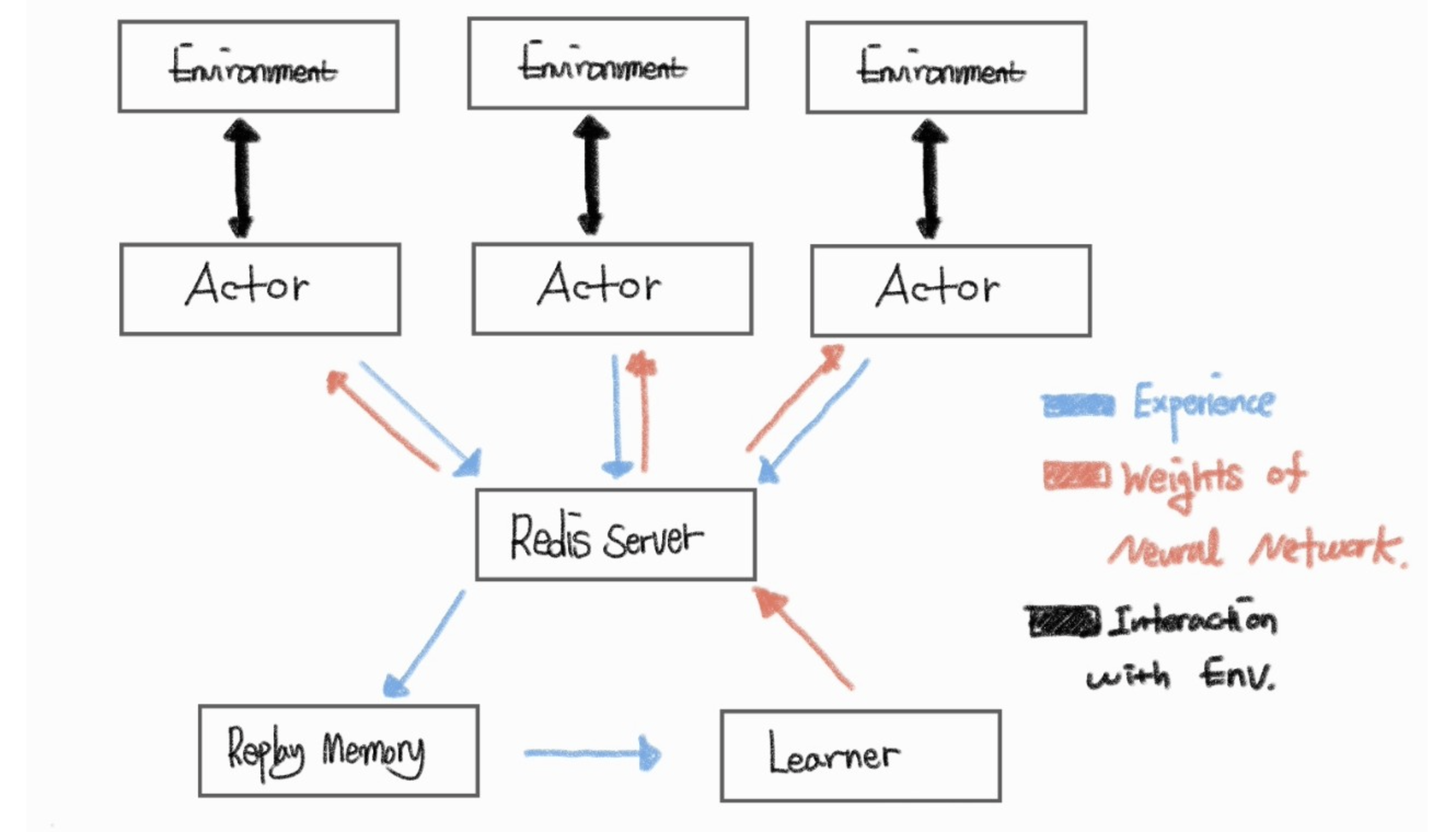

This Repo is for implementation of Distributed RL Algorithm,

This Repo is for implementation of Distributed RL Algorithm,

using by Pytorch, Ray and Redis.

Here is list of Algorithm I implemented (or will implement)

-

IMPALA

-

APE_X_DQN

-

R2D2

In APE-X DQN paper, the computer resources for experiment is below:

1. nCpus: 360+

2. RAM:256GB

3. GPU: V100

4. High Performance CPU for constructing data pipeline

Probably, Most pepole can't satsify above conditions.

Instead of buying it, I use Virtual Machine in GCP.

GCP supports not only for enough resoruces but also for redis-server.

Recommend you create the new development conda env for this repo.

conda create -n <env_name> python=3.7

git clone https://github.com/seungju-mmc/Distributed_RL.git

git submodule init

# pull submodule from git 'baseline'

# If you read Readme.md from baseline, understand what it is.

git submodule update

pip install -r requirements.txt

[Important] you must check ./cfg/.json. you can control the code by .json.

[Important] In configuration.py, set the path !!

# you need independent two terminals. Each one is for learner and actor.

sudo apt-get install tmux

tmux

python run_learner.py

# Crtl + b, then d

tmux

python run_actor.py --num-worker <n>

1. More Cache memory, Better performance

I observed that lower cache memory of intel i7 9700k (12Mb) can be bottleneck for constructing data pipeline in learer side.

In GCP, using Redis server supported by GCP, there is no bottlenect.

I recommend you use more than 30MB L3 cache memory or use GCP.

You can check cache memory specification using following line in terminal.

sudo lshw -C memory