Zhijun Pan†*

Fangqiang Ding†

Hantao Zhong†

Chris Xiaoxuan Lu

†Equal Contribution. *Project Lead.

Royal College of Art University of Edinburgh University of Cambridge

University College London

- [2024-03-22] We are working on integrating AB3DMOT evaluation scripts into the evaluation run. Stay tuned!

- [2024-03-13] Our paper demo video can be seen here 👉 video.

- [2024-03-13] We further improved RaTrack's overall performance. Please check Evaluation.

- [2024-01-29] Our paper can be read here 👉 arXiv.

- [2024-01-29] Our paper is accepted by ICRA 2024 🎉.

If you find our work useful in your research, please consider citing:

@InProceedings{pan2023moving,

author = {Pan, Zhijun and Ding, Fangqiang and Zhong, Hantao and Lu, Chris Xiaoxuan},

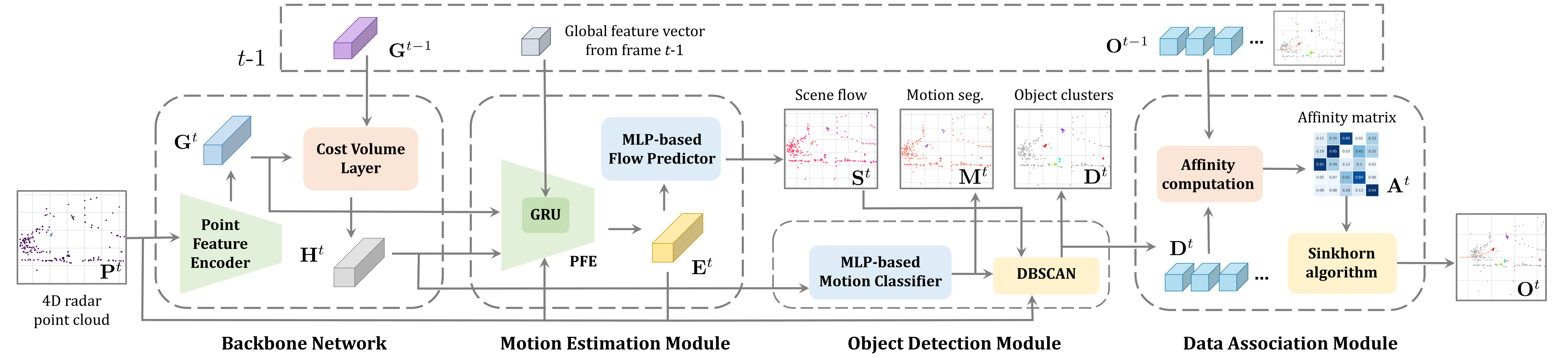

title = {Moving Object Detection and Tracking with 4D Radar Point Cloud},

booktitle = {Proceedings of the IEEE International Conference on Robotics and Automation (ICRA)},

year = {2024},

}Here are some GIFs to show our qualitative results on moving object detection and tracking based on 4D radar point clouds. Note that only moving objects with no less than five points. For more qualitative results, please refer to our demo video.

First, please request and download the View of Delft (VoD) dataset from the VoD official website. Unzip into the folder you prefer.

Please also obtain the tracking annotation from VoD Github. Unzip all the .txt tracking annotation files into the path: PATH_TO_VOD_DATASET/view_of_delft_PUBLIC/lidar/training/label_2_tracking/

The dataset folder structure should look like this:

view_of_delft_PUBLIC/

├── lidar

│ ├── ImageSets

│ ├── testing

│ └── training

│ ├── calib

│ ├── image_2

│ ├── label_2

│ ├── 00000.txt

│ ├── 00001.txt

│ ├── ...

│ ├── label_2_tracking

│ ├── 00000.txt

│ ├── 00001.txt

│ ├── ...

│ ├── pose

│ └── velodyne

├── radar

│ ├── testing

│ └── training

│ ├── calib

│ └── velodyne

├── radar_3frames

│ ├── testing

│ └── training

│ └── velodyne

└── radar_5frames

├── testing

└── training

└── velodyne

Please ensure you running with an Ubuntu machine with Nvidia GPU (at least 2GB VRAM). The code is tested with Ubuntu 22.04, and CUDA 11.8 with RTX 4090. Any other machine is not guaranteed to work.

To start, please ensure you have miniconda installed by following the official instructions here.

First, clone the repository with the following command and navigate to the root directory of the project:

git clone git@github.com:LJacksonPan/RaTrack.git

cd RaTrackCreate a RaTrack environment with the following command:

conda env create -f environment.ymlThis will setup a conda environment named RaTrack with CUDA 11.8, PyTorch2.2.0.

Installing the pointnet2 pytorch dependencies:

cd lib

python setup.py installTo train the model, please run:

python main.pyThis will use the configuration file config.yaml to train the model.

To evaluate the model and generate the model predictions, please run:

python main.py --config configs_eval.yamlTo evaluate with the trained RaTrack model, please open the configs_eval.yaml and change the model_path to the path of the trained model.

model_path: 'checkpoint/track4d_radar/models/model.last.t7'Then run the following command:

python main.py --config configs_eval.yamlThis will only generate the predictions in the results folder. We are currently working on integrating our point-based version of AB3DMOT evaluation scripts into the evaluation run.

The evaluation results of the provided trained RaTrack model are following:

| Method | SAMOTA | AMOTA | AMOTP | MOTA | MODA | MT | ML |

|---|---|---|---|---|---|---|---|

| RaTrack | 74.16 | 31.50 | 60.17 | 67.27 | 77.83 | 42.65 | 14.71 |

| RaTrack (Improved) | 80.33 | 34.58 | 59.37 | 62.80 | 77.07 | 54.41 | 13.24 |

If you are interested in evaluating the predictions with our version of AB3DMOT evaluation, please contact us.

We use the following open-source projects in our work:

- Pointnet2.Pytorch: We use the pytorch cuda implmentation of pointnet2 module.

- view-of-delft-dataset: We the documentation and development kit of the View of Delft (VoD) dataset to develop the model.

- AB3DMOT: we use AB3DMOT for evaluation metrics.

- OpenPCDet: we use OpenPCDet for baseline detetion model training and evaluating.