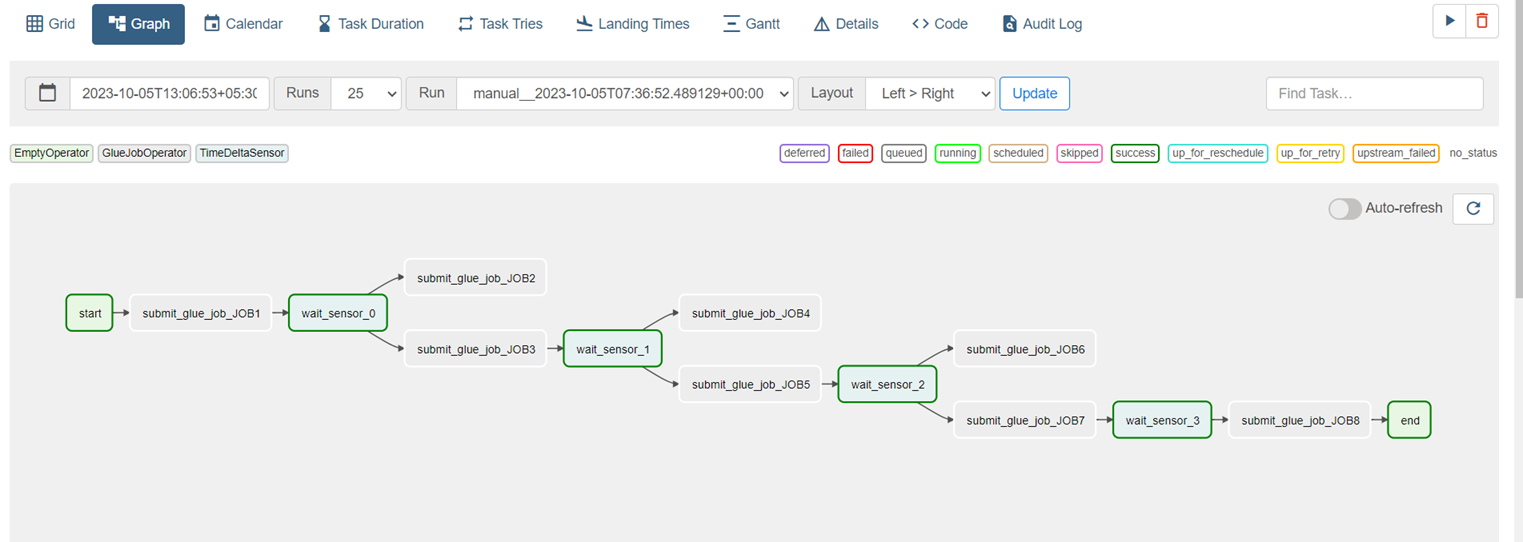

This is an Apache Airflow DAG (Directed Acyclic Graph) script designed to automate and orchestrate the execution of Glue ETL jobs. It leverages Airflow's scheduling and dependency management capabilities to ensure that Glue jobs run in a specific order, with waiting periods in between.

Before using this DAG, make sure you have the following set up:

- AWS Access Key ID and Secret Access Key for the IAM user with necessary permissions.

- AWS Glue jobs with corresponding Python scripts uploaded to an S3 bucket.

- An AWS Glue IAM Role with the required permissions to execute Glue jobs.

- AWS Glue Job names and S3 bucket information correctly configured in the script.

- The Airflow environment properly set up, including the AWS connection.

- Docker Compose YAML File: You should have a Docker Compose YAML file for setting up Airflow with necessary dependencies. This YAML file should include configurations for Airflow, its web server, scheduler, and any other required services.

- The DAG consists of several Glue job tasks that are executed sequentially.

- A

TimeDeltaSensortask is used to introduce waiting periods between Glue job executions. - An

EmptyOperatortask is used to signify the start and end points of the DAG.

- Sending the email whenever there is a pipeline failure.

- The Failure can takes place whenever any of the job fails. This cam lead to failure in pipeline.

- So whenever the job is failed respected to that task_id automated email will be generated for the particular job.

Docker Compose YAML File: The Docker Compose YAML file is used to set up an Airflow environment with all the necessary services, including the web server, scheduler, and workers. This file is crucial for running Airflow in a containerized environment and should be configured to meet your specific requirements.

- Configure the script with the necessary AWS and Glue job information, as described in the prerequisites.

- Deploy the script to your Airflow environment.

- Airflow will schedule and execute the Glue jobs according to the defined dependencies.

Apache Airflow is an open-source platform used for orchestrating complex workflows and data pipelines. It allows you to define, schedule, and monitor workflows as DAGs. Here's a brief summary of Airflow's usability:

-

Workflow Automation: Airflow helps automate and manage workflows that involve multiple tasks, dependencies, and data processing steps.

-

Schedule Flexibility: You can set custom schedules for task execution, whether it's daily, hourly, or based on a specific trigger.

-

Dependency Management: Airflow ensures that tasks run in the correct order, with dependencies defined, allowing you to build complex workflows.

-

Extensibility: Airflow offers a variety of operators and sensors for tasks, and you can extend its functionality with custom operators and plugins.

-

Monitoring and Logging: Airflow provides a web interface for monitoring task status, logs, and historical runs, making it easy to troubleshoot and debug.

-

Parallel Execution: Airflow can run tasks in parallel, enabling efficient data processing and ETL pipelines.

-

Integration: It has integrations with various services and databases, including AWS, Google Cloud, and more.

-

Scalability: Airflow can scale to handle large and complex workflows, making it suitable for enterprise-level data processing.

-

Community and Ecosystem: Airflow has a thriving open-source community, and there are many plugins and resources available to enhance its capabilities.

In summary, Airflow is a powerful tool for managing, scheduling, and monitoring data workflows, making it a popular choice for ETL, data engineering, and automation tasks.