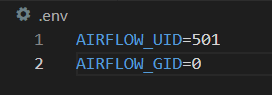

add a .env file with following contents:

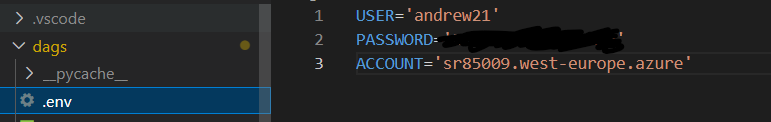

Also add another .env file, this time in your dags folder with your snowflake user, password and account data:

Run docker-compose up airflow-init

Then docker-compose up

Go to localhost:8080 Use the following username and password user: airflow password: airflow

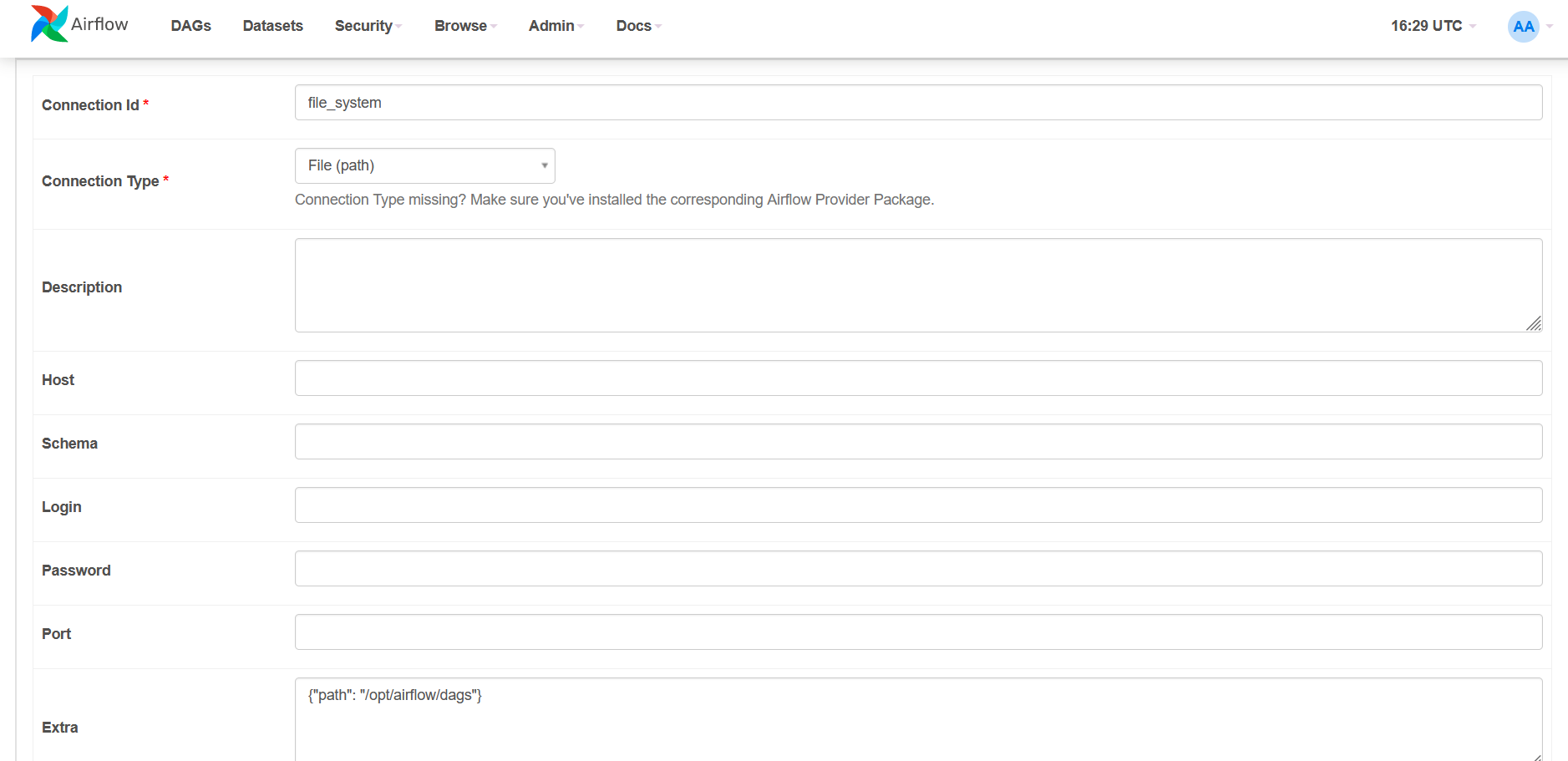

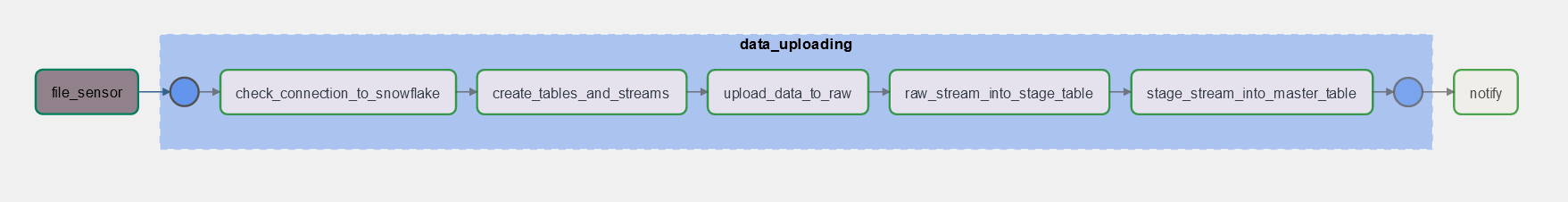

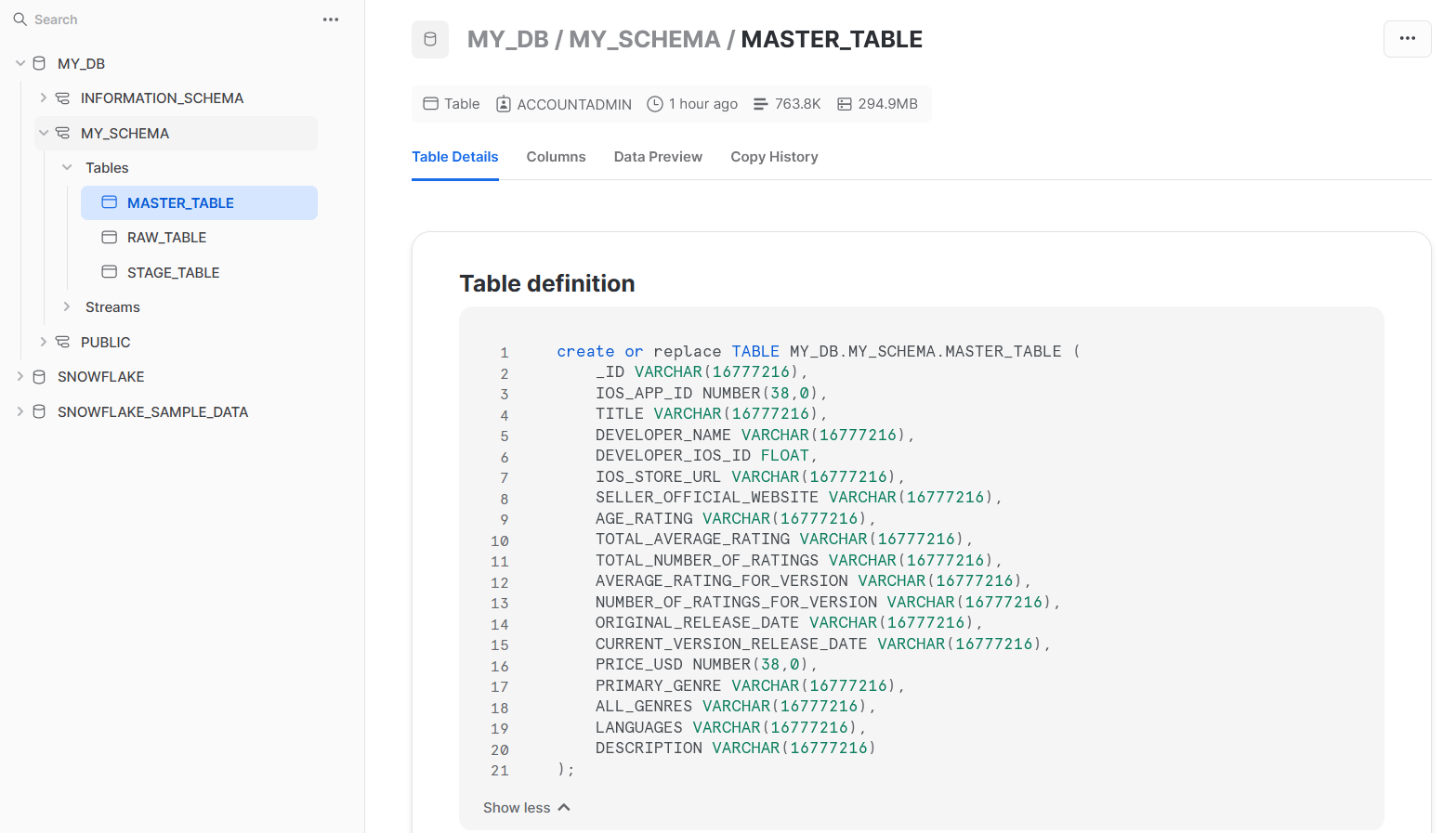

Before executing the dag, you should create a warehouse with the name 'MY_WH', database with the name 'MY_DB' and lastly the schema named 'MY_SCHEMA'. Add the following connections: Now you can run the dag by pressing the corresponding button. (Below is the dag for the reference)