This is a PyTorch Tutorial to Text Classification.

This is the fourth in a series of tutorials I plan to write about implementing cool models on your own with the amazing PyTorch library.

Basic knowledge of PyTorch, recurrent neural networks is assumed.

If you're new to PyTorch, first read Deep Learning with PyTorch: A 60 Minute Blitz and Learning PyTorch with Examples.

Questions, suggestions, or corrections can be posted as issues.

I'm using PyTorch 1.1 in Python 3.6.

27 Jan 2020: Working code for two new tutorials has been added — Super-Resolution and Machine Translation

To build a model that can label a text document as one of several categories.

We will be implementing the Hierarchial Attention Network (HAN), one of the more interesting and interpretable text classification models.

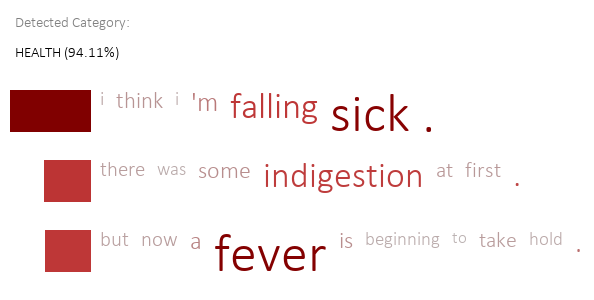

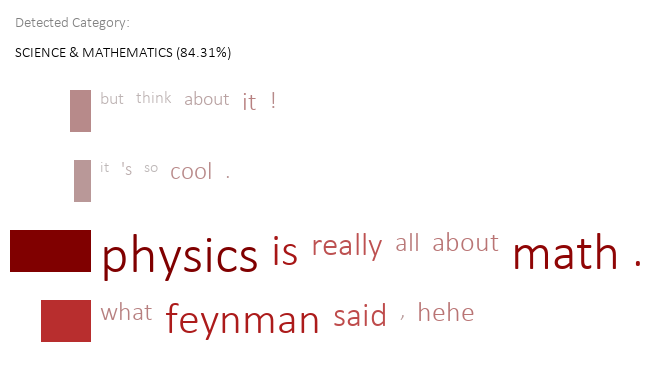

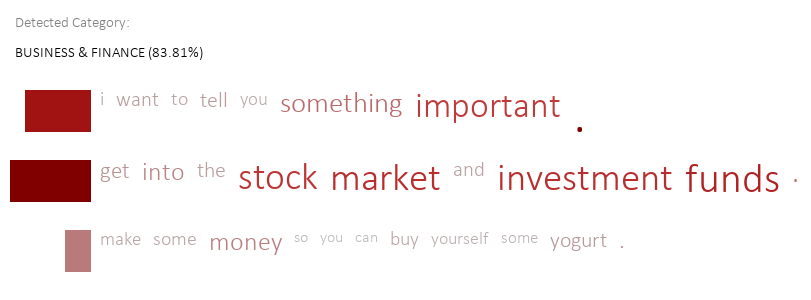

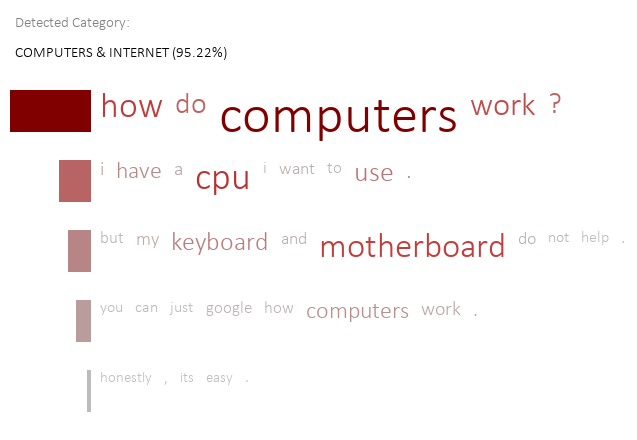

This model not only classifies a document, but also chooses specific parts of the text – sentences and individual words – that it thinks are most important.

"I think I'm falling sick. There was some indigestion at first. But now a fever is beginning to take hold."

"But think about it! It's so cool. Physics is really all about math. What Feynman said, hehe."

"I want to tell you something important. Get into the stock market and investment funds. Make some money so you can buy yourself some yogurt."

"How do computers work? I have a CPU I want to use. But my keyboard and motherboard do not help."

"You can just google how computers work. Honestly, its easy."

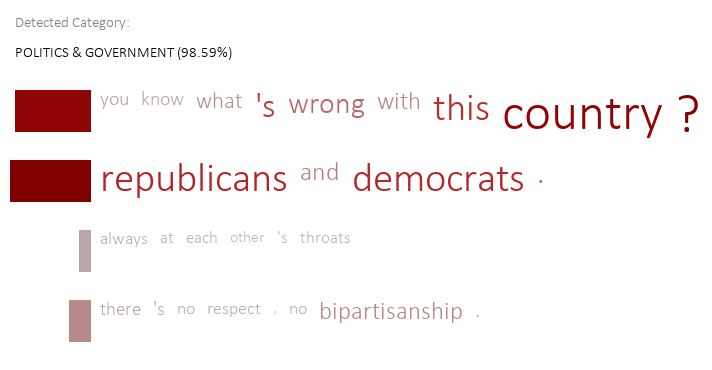

"You know what's wrong with this country? Republicans and democrats. Always at each other's throats"

"There's no respect, no bipartisanship."

I am still writing this tutorial.

In the meantime, you could take a look at the code – it works!

We achieve an accuracy of 75.1% (against 75.8% in the paper) on the Yahoo Answer dataset.