A Saliency-Guided Street View Image Inpainting Framework for Efficient Last-Meters Wayfinding [Paper]

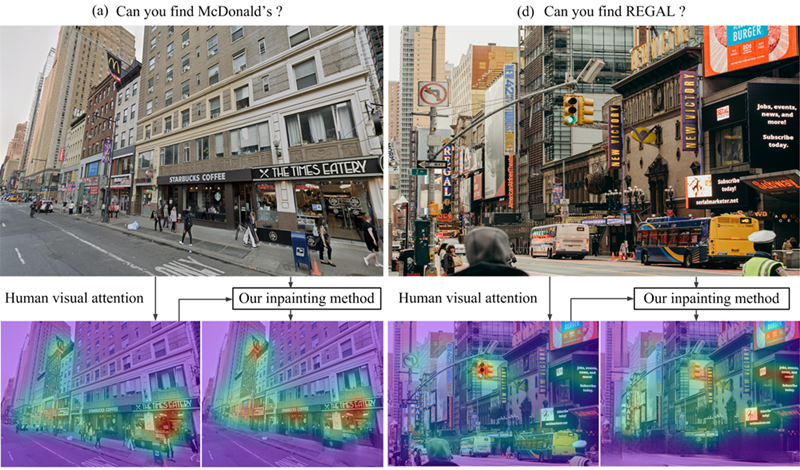

We propose a saliency-guided street view image inpainting method, which can remove distracting objects to redirect human visual attention to static landmarks.

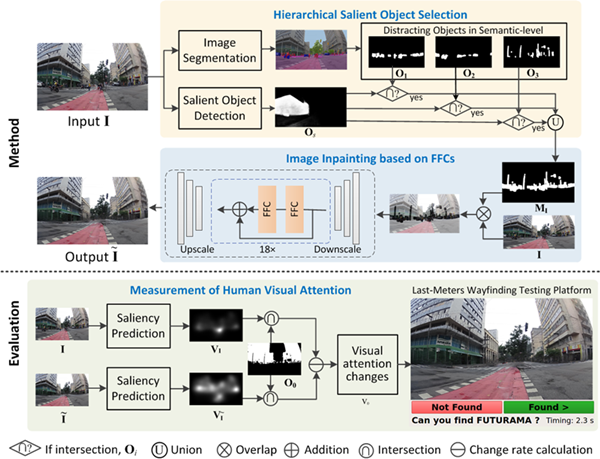

Overview of the proposed saliency-guided street view image inpainting framework. It consists of three building blocks: hierarchical salient object selection, saliency-guided image inpainting based on fast Fourier convolutions (FFCs), and measurement of human visual attention by visual attention changes and a self-developed last-meters wayfinding testing platform. Note that modeling the interaction between saliency detection and image inpainting leads to effective removal of distracting objects for last-meters wayfinding.

Hierarchical salient object selection based on Image Segemmentation (DeepLabv3+, Model) and Salient Object Detection (U^2Net).

Finetuned on LaMa model (link)

Evaluation of human visual changes based on UNISAL network (link) and a self-developed human labelling program.

For more details please refer to our paper:

@article{hu2022saliency,

title={A Saliency-Guided Street View Image Inpainting Framework for Efficient Last-Meters Wayfinding},

author={Hu, Chuanbo and Jia, Shan and Zhang, Fan and Li, Xin},

journal={arXiv preprint arXiv:2205.06934},

year={2022}

}