This repository serves primarily as codebase for training, evaluation and inference of CTR.

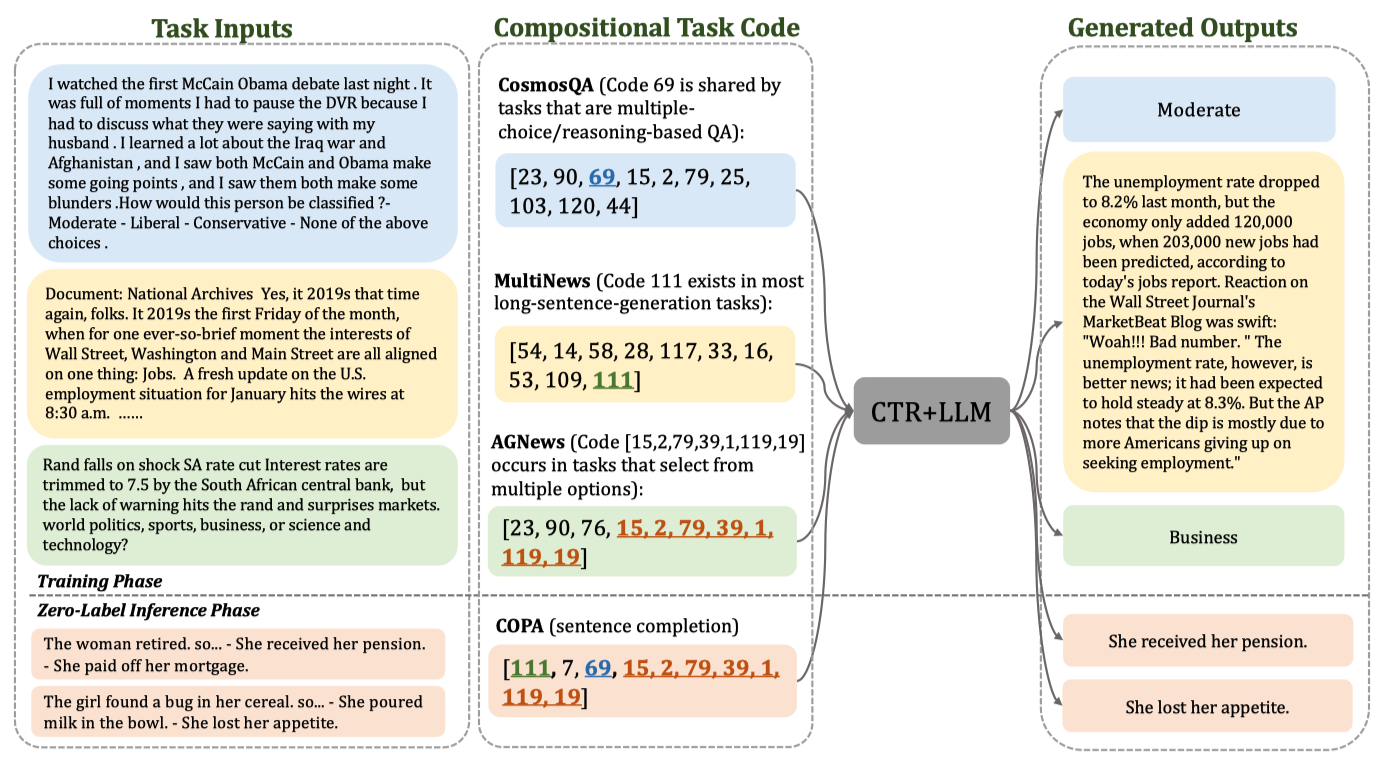

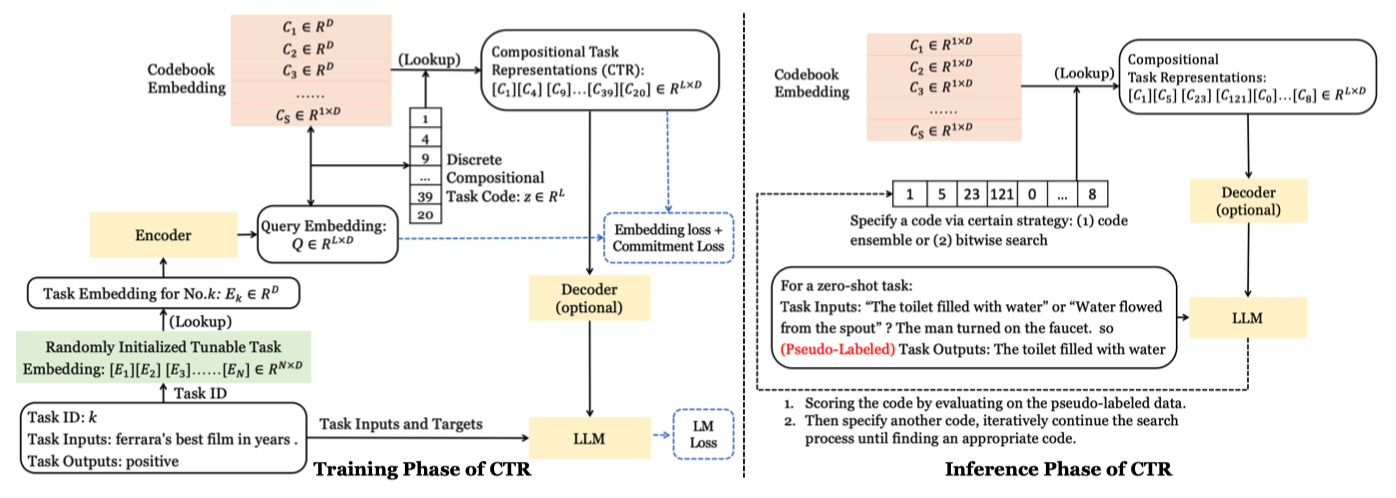

In this paper, we propose the Compositional Task Representations (CTR) method that learns a discrete compositional codebook for tasks and generalizes to new unseen tasks by forming new compositions of the task codes. For the inference of CTR, we propose two algorithms - Code Ensemble and Bitwise Search, respectively for zero-label and few-shot settings.

Please find more details of this work in our paper.

- Run

git clone https://github.com/shaonan1993/CTR.gitto download the repo. - Run

cd CTRto navigate to root directory of the repo. - Build experimental environment:

- Download Download docker env from Google Driver or Baidu Driver

- run command

sudo docker run -itd --name codebook -v /[YOU_DIR]:/share --gpus all --shm-size 8gb zxdu20/glm-cuda115 /bin/bash - run command

sudo docker exec -it codebook bash - run command

pip install torch-1.11.0+cu115-cp38-cp38-linux_x86_64.whl - run command

pip install transformers==4.15 - run command

pip install datasets==1.16

- Download processed training data:

cd data- Download

t0_combined_raw_data_no_prompt.zipfrom Google Driver or Baidu Driver - Download

t0_combined_raw_data.zipfrom Google Driver or Baidu Driver unzip t0_combined_raw_data_no_prompt.zipunzip t0_combined_raw_data.zip

- Download and preprocess pretrained model:

cd pretrained_models- Download t5-large-lm-adapt

cd ../python utils/move_t5.py

- (Optional) Download our existing checkpoint from Google Driver or Baidu Driver

- (Optional) Download our generated P3 dataset:

- Manual Prompt Version: Baidu Driver

- No Prompt Version(inputs are a direct concatenation of multiple text fields): Baidu Driver

- Note our generated P3 dataset is slight different from original P3 dataset. We remove some unused dataset, drop duplicate examples and fix some bugs.

Tips: We highly recommend everyone to run our code in the same experimental environment, or the results may unexpected due to the loss of precision derived from bfloat16.

- Run

sh scripts/run_pretrain_t0_large.shto train a T0-Large baseline from scratch. - Run

sh scripts/run_eval_t0_large.shto evaluate the T0-Large baseline. - Run

python stat_result.pyto print all results in your terminal.

Note: For T0-Large baseline, we use the same task prompts from PromptSource.

- Run

sh scripts/run_pretrain_t0_vae_large_no_prompt.shto train CTR for the first stage. - Run

sh scripts/run_pretrain_t0_vae_large_no_prompt_pipe.shto train CTR for the second stage.

Note:

- For model with CTR, we do not use manual prompt.

- If you want to generate you P3 dataset from scratch, please refer to

./utils/preprocess_for_P3.py(not recommend, extremely tedious and time-consuming).

Run sh scripts/run_zerolabel_t0_vae_large_no_prompt.sh to select a task code with the highest pseudo-label accuracy.

- Run

sh scripts/run_fewshot_discrete_search.shto find best task code of train tasks. - Run

sh scripts/run_fewshot_bitwise_search.shselect the task code with the minimal loss at first step as an initialization, and then bitwise search a better task code.

If you find this resource useful, please cite the paper introducing CTR:

@inproceedings{

shao2023compositional,

title={Compositional Task Representations for Large Language Models},

author={Nan Shao and Zefan Cai and Hanwei xu and Chonghua Liao and Yanan Zheng and Zhilin Yang},

booktitle={The Eleventh International Conference on Learning Representations },

year={2023},

url={https://openreview.net/forum?id=6axIMJA7ME3}

}