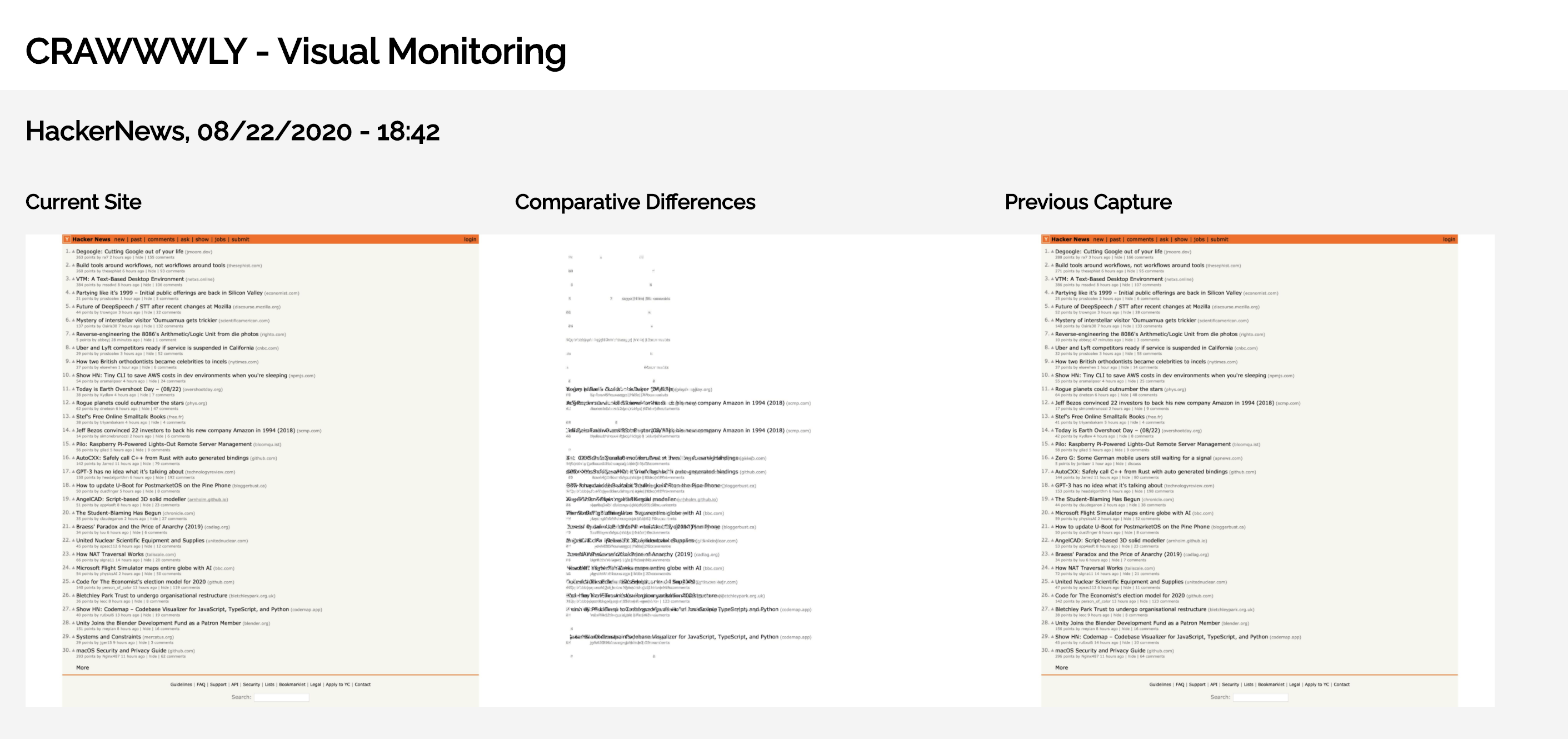

Example report: https://sharedphysics.github.io/Crawwwly/

This script is designed to help with visually-driven comparative monitoring. Crawwwly will go to a site, take a full screenshot, and compare that against the last screenshot captured to identify differences.

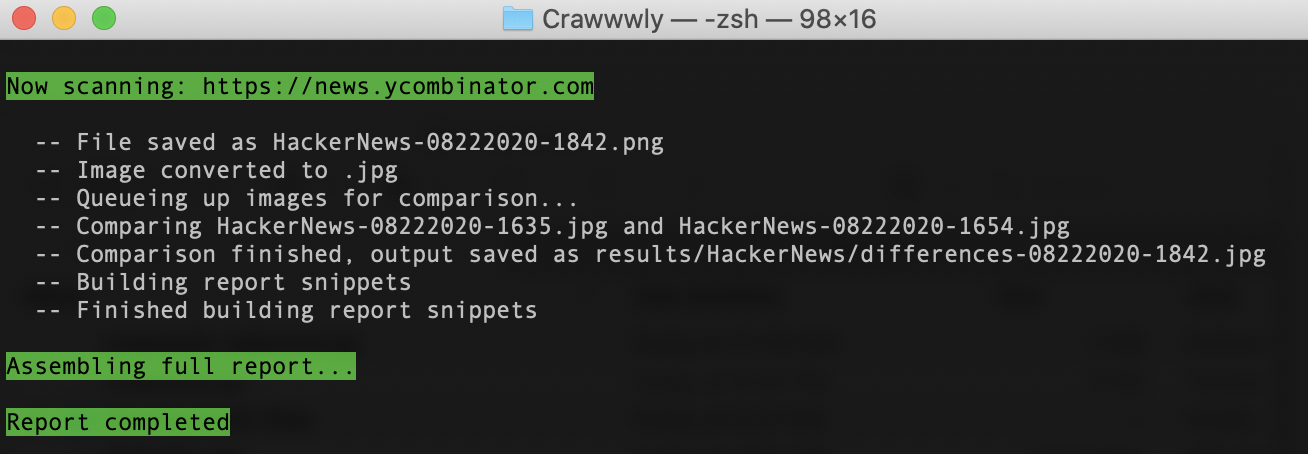

Crawwwly goes through a multi-step process to capture, analyze, and report on data:

- It parses a

.CSVfile to create a list of domains to scan - For each domain, a directory is created to begin capturing images for analysis

Selenium+Firefoxopen the site and capture a full-page screenshot- That image is compared against the previously captured image, as sorted by name (which means that if you're running Crawwwly multiple times a day, it may not compare correct images). If you're scanning a domain for the first time, the image is compared against itself. This is done through

Pillow - An html snippet is generated for displaying the comparisons

- Once all of the domains have been scanned, a full report is assembled into a single page from all of the snippets

- The report is auto-opened for reading pleasure

Crawly requires FireFox, Python3, Selenium, and Pillow. All of these (except Firefox) can be downloaded and configured from the requirements.txt file.

Assuming you have Python3 already installed and running:

-

Download the GitHub .zip package and open it in a local folder.

-

Install

GeckoDriver. This will be the driver to run a headless firefox browser on. The code here is designed to run with Firefox, and the driver I used is included. But you're welcome to install the latest package from here: https://github.com/mozilla/geckodriver/releases (make sure to choose the correct OS version). Once you download the drive, extract it and copy it to yourbinfolder by running the following terminal script in the directory you saved the download in. Make sure to switch in the correct distro file in your execution.

tar -xvf geckodriver-v0.27.0-macos.tar.gz

mv geckodriver /usr/local/bin/

-

You'll also need to make sure FireFox is installed and that you've allowed permissions for the GeckoDriver to run.

-

Install the requirements.txt file by going to your Crawwwly folder and running

pip3 install -r requirements.txt

Running Crawwwwly is really easy:

- Modify the

domains.csvfile, adding the full URLs that you want to monitor in column 1, and the appropriate "simple name" (no punctuation or spaces) in column 2. This will be used to set the web paths for scanning and then how those images and directories will be created. - In your terminal, enter the directory where Crawwwly was saved

- Run the script as

python3 crawwwwly.py

After that, you can run just keep running the script as python3 crawwwwly.py

The real value of this script is to set up once and have it running on a schedule. If you're running Crawwwly locally, you can refer to: https://medium.com/better-programming/https-medium-com-ratik96-scheduling-jobs-with-crontab-on-macos-add5a8b26c30 otherwise, you'll need to refer to your server/hosting to figure out their cron-scheduling solution.

I recommend running the script weekly for best results, but no more than daily.

Note, if you're running Crawwwly on a cron, you may want to comment out line 197 (opening the report on completion): webbrowser.open('file://' + os.path.realpath("Report.html"))

Please note, the development files folder includes the entirety of the app broken into individual components for running and refining the process.