VSCode extension ・ Backend API ・ Frontend dashboard ・ Documentation

You can run the API containers using this command:

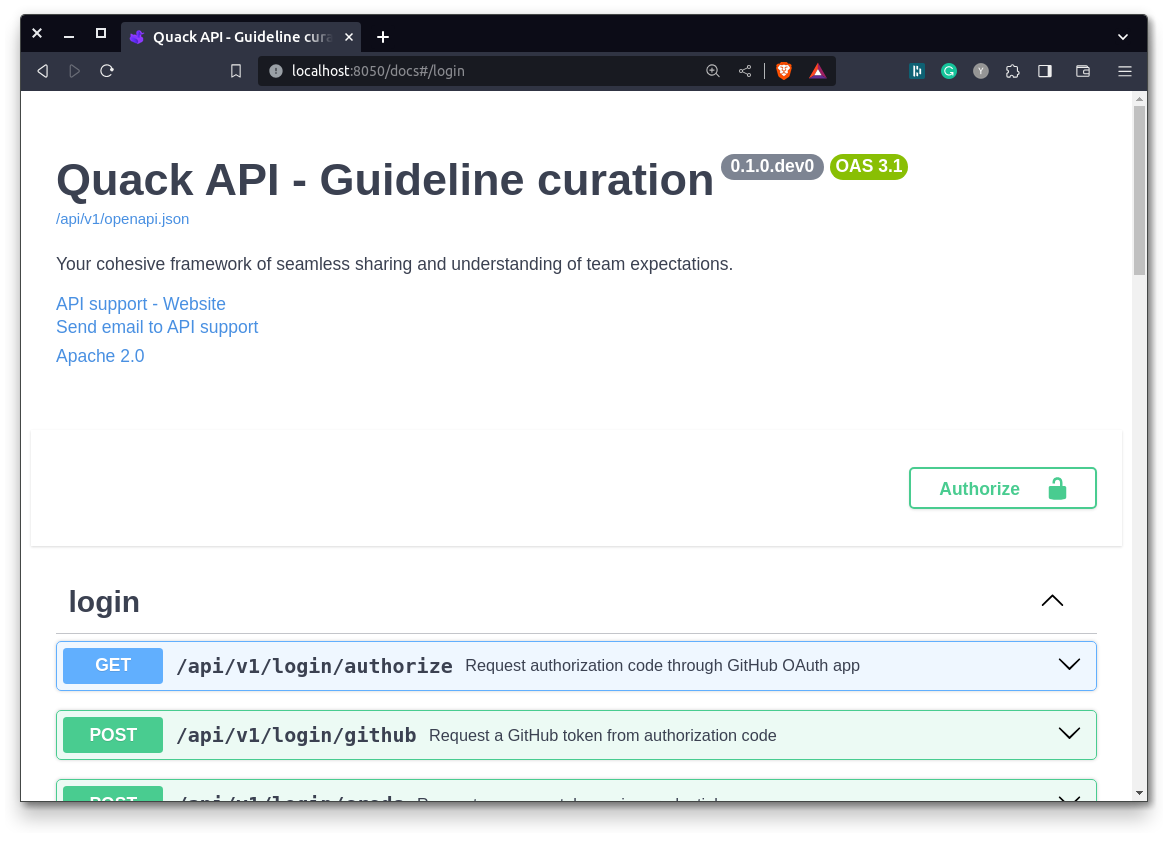

make runYou can now navigate to http://localhost:8050/docs to interact with the API (or do it through HTTP requests) and explore the documentation.

In order to stop the service, run:

make stopYou crave for perfect codde suggestions, but you don't know whether it fits your needs in terms of latency? In the table below, you will find a latency benchmark for all tested LLMs from Ollama:

| Model | Ingestion mean (std) | Generation mean (std) |

|---|---|---|

| tinyllama:1.1b-chat-v1-q4_0 | 2014.63 tok/s (±12.62) | 227.13 tok/s (±2.26) |

| dolphin-phi:2.7b-v2.6-q4_0 | 684.07 tok/s (±3.85) | 122.25 toks/s (±0.87) |

| dolphin-mistral:7b-v2.6 | 291.94 tok/s (±0.4) | 60.56 tok/s (±0.15) |

This benchmark was performed over 20 iterations on the same input sequence, on a laptop to better reflect performances that can be expected by common users. The hardware setup includes an Intel(R) Core(TM) i7-12700H for the CPU, and a NVIDIA GeForce RTX 3060 for the laptop GPU.

You can run this latency benchmark for any Ollama model on your hardware as follows:

python scripts/evaluate_ollama_latency.py dolphin-mistral:7b-v2.6-dpo-laser-q4_0 --endpoint http://localhost:3000All script arguments can be checked using python scripts/evaluate_ollama_latency.py --help

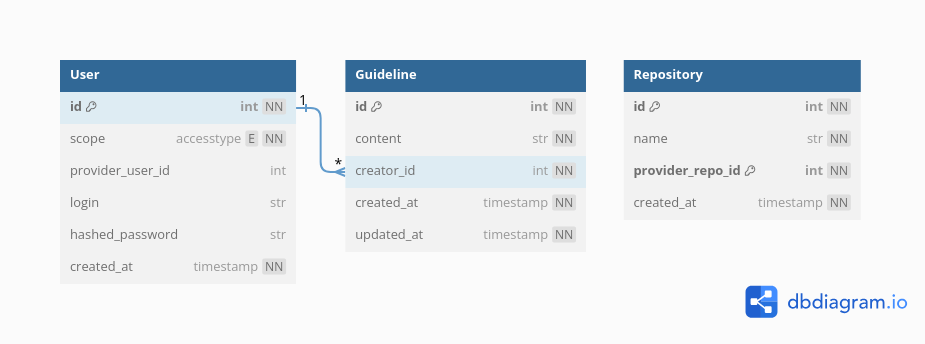

The back-end core feature is to interact with the metadata tables. For the service to be useful for codebase analysis, multiple tables/object types are introduced and described as follows:

- Users: stores the hashed credentials and access level for users & devices.

- Repository: metadata of installed repositories.

- Guideline: metadata of curated guidelines.

- Docker

- Docker compose

- Make (optional)

The project was designed so that everything runs with Docker orchestration (standalone virtual environment), so you won't need to install any additional libraries.

In order to run the project, you will need to specific some information, which can be done using a .env file.

This file will have to hold the following information:

POSTGRES_DB*: a name for the PostgreSQL database that will be createdPOSTGRES_USER*: a login for the PostgreSQL databasePOSTGRES_PASSWORD*: a password for the PostgreSQL databaseSUPERADMIN_GH_PAT: the GitHub token of the initial admin access (Generate a new token on GitHub, with no extra permissions = read-only)SUPERADMIN_PWD*: the password of the initial admin accessGH_OAUTH_ID: the Client ID of the GitHub Oauth app (Create an OAuth app on GitHub, pointing to your Quack dashboard w/ callback URL)GH_OAUTH_SECRET: the secret of the GitHub Oauth app (Generate a new client secret on the created OAuth app)

* marks the values where you can pick what you want.

Optionally, the following information can be added:

SECRET_KEY*: if set, tokens can be reused between sessions. All instances sharing the same secret key can use the same token.OLLAMA_MODEL: the model tag in Ollama library that will be used for the API.SENTRY_DSN: the DSN for your Sentry project, which monitors back-end errors and report them back.SERVER_NAME*: the server tag that will be used to report events to Sentry.POSTHOG_KEY: the project API key for PostHog PostHog.SLACK_API_TOKEN: the App key for your Slack bot (Create New App on Slack, go to OAuth & Permissions and generate a bot User OAuth Token).SLACK_CHANNEL: the Slack channel where your bot will post events (defaults to#general, you have to invite the App to your channel).SUPPORT_EMAIL: the email used for support of your API.DEBUG: if set to false, silence debug logs.OPENAI_API_KEY**: your API key for Open AI (Create new secret key on OpenAI)

** marks the deprecated values.

So your .env file should look like something similar to .env.example

The file should be placed in the folder of your ./docker-compose.yml.

Any sort of contribution is greatly appreciated!

You can find a short guide in CONTRIBUTING to help grow this project! And if you're interested, you can join us on

Copyright (C) 2023-2024, Quack AI.

This program is licensed under the Apache License 2.0. See LICENSE or go to https://www.apache.org/licenses/LICENSE-2.0 for full license details.

.png)