This is an implementation of a tool designed to scrape the content of the latest news articles from The Economist website.

- First of all, I examined the website's structure to gain a comprehensive understanding of its layout. Upon realizing that scraping the website using HTML tags could be time-consuming and inefficient, particularly due to potential changes over time, I opted to explore alternative approaches. My objective was to locate a suitable API that would enable me to fetch and parse the desired data. Fortunately, I discovered that The Economist provides an RSS feed that I could utilize. Consequently, I focused my efforts on parsing the RSS feed to extract the latest news section, which proved to be a more reliable and efficient method.

- Secondly, I selected "The World This Week" section as the source for the latest news.

- In the next phase, I came across an XML parser called pydantic-xml, which offers support for the lxml backend (it has a better performance because it is written in C). This parser allows for the seamless deserialization of XML data into Python objects.

- I encountered another challenge when working with XML files, specifically the issue of handling empty fields such as description. To address this, I had to utilize the Union data type. Surprisingly, the documentation of pydantic-xml failed to provide any information or guidance on this matter.

-

In order to prevent duplication within my data structure, I opted to utilize the set data structure. This decision required me to implement the

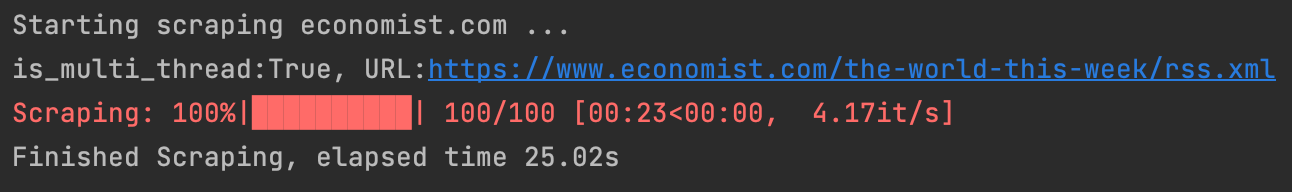

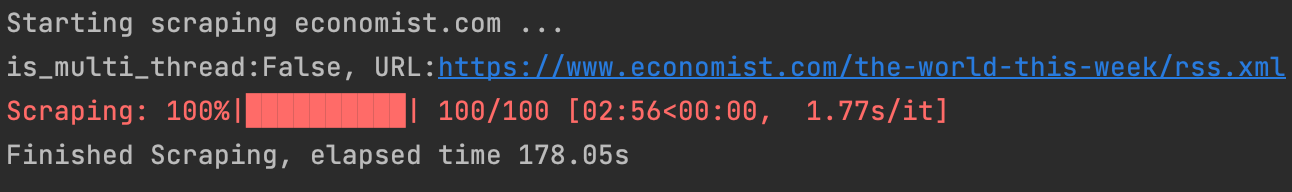

__eq__and__hash__functions within the Item schema class. By doing so, I was able to ensure proper comparison and hashing functionality for the set. - I added support for multithreading to improve the performance of the scraping process. Since scraping is an I/O bound task, using a single thread can result in slow execution. By utilizing multithreading, I was able to observe significant improvements in the results section.

- Install requirements:

- Install "python >= 3.8"

- Install "virtualenv"

pip install virtualenv -

Create the virtual environment using following command:

virtualenv .env - Active virtualenv:

- For linux:

source .env/bin/activate - For windows:

.\.env\Scripts\activate

- For linux:

- Now you can install libraries and dependencies listed in requirements file:

pip install -r ./requirements.txt -

You can exit from virtual environment using following command:

deactivate

python app.py