Purpose: Use OSM vector data to train a convolutional neural network (CNN) in AWS Sagemaker for building, road, (etc) object detection.

Inputs: Location of HOT-OSM task OR city/state/country of interest & a web-url to DG Cloud Optimized Geotif (COG).

Outputs: TMS (slippy map) training data using the OSM vectors + AWS Sagemaker model endpoint.

There are TWO parts to this workflow. The first is best illustrated by checking out the ipynb tutorial that will walk you through the OSM vector data to ML training data. Once the traing data is generated, you can use the following scripts to create a virtual environment for AWS Sagemaker training.

To use this tutorial, a good starting point will be the two ipynb (part I and part II).

This tutorial uses a Python3 virtual environment. See details below and in the setup.sh file.

You can also run the notebooks via docker. See Docker Install and Run below.

- setup a virtual environnment:

ubuntu$ virtualenv -p python3 sagemaker_trans

ubuntu$ source sagemaker_trans/bin/activate

(sagemaker_trans) ubuntu$ cd sagemaker_trans/- Clone this repo onto your local machine.

(sagemaker_trans) ubuntu$ git clone https://github.com/shaystrong/sagely.git

(sagemaker_trans) ubuntu$ cd sagely/- Run the setup. It will install necessary libraries

(sagemaker_trans) ubuntu$ sh setup.sh(sagemaker_trans) ubuntu$ sh get_data.shThis will download the mxnet .rec files generated at the end of the part I ipynb. This will also download the full set of DG tiles that we will infer against later.

You can start from here to run sagemaker (part II), or you can follow the label generation process from the start in part I. The part II notebook is strictly running a Sagemaker training event and creating an endpoint.

Start the Jupyter notebook server in the background with nohup. This creates a 'nohup.out' file where all cli logging is sent.

(sagemaker_trans) ubuntu$ nohup jupyter notebook &Pre-requisites: Install docker

Build the docker image (will take a up to 10 minutes to run):

docker build -t sagely .Run the docker container:

docker run -it -p 8888:8888 sagelyThe above command should return a url like:

http://127.0.0.1:8888/?token=a41763eea9bf3ce73104e38dbddc161dafc175e83106eaa3

which you can copy / paste into a browser to open up jupyter notebook and run the notebooks described below.

Run the notebooks once your web browser pops open the Jupyter Notebook site. This is a local site running only on your machine.

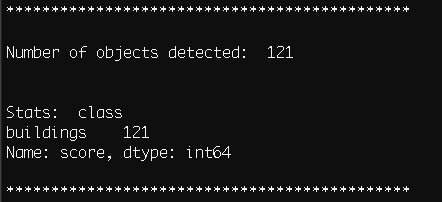

Assuming you either have created an endpoint, you can predict labels using the endpoint. Edit the test.sh script to include your endpoint (mod), data location path (pa),and AWS S3 role (ro). The threshold for object detection (t) may also be configured but is set for 0.6 for this test.

python3 endpoint_infer_slippygeo.py -mod <your-endpoint> \

-c buildings \

-pa tiles/ \

-ro <role> \

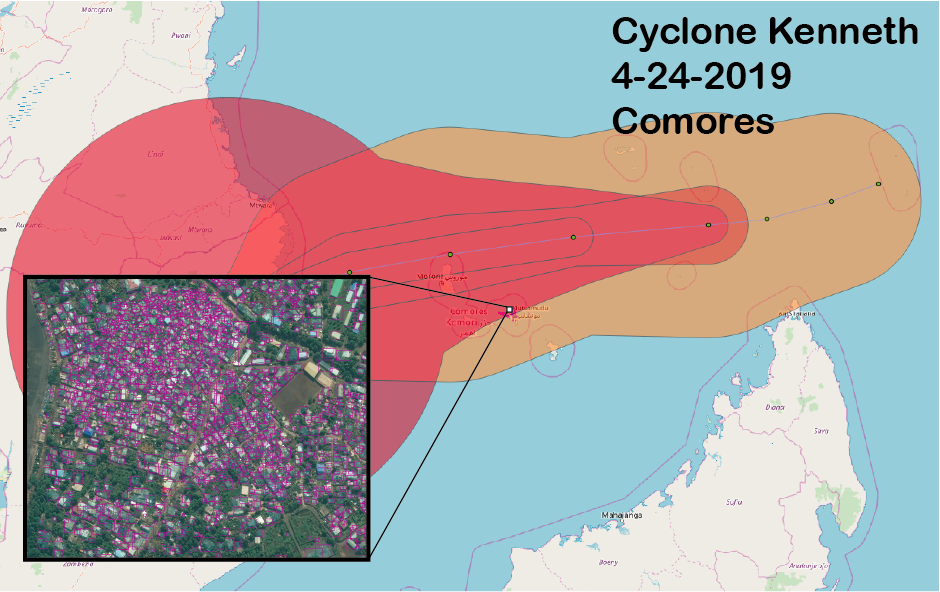

-t 0.6(sagemaker_trans) ubuntu$ sh test.shUsing the demo data discussed in the tutorial ipynb and limited rec files provided, this is the output I get. You can test my endpoint for consistency (Shay to provide).

deactivate

rm -rf /path/to/venv/sagemaker_trans/See the OSM ML, Part II

Watch you model training on Sagemaker! You can login to the AWS console and see the progression of the learning as well as all your parameters.

Your OSM vector data may be messy, and or may not align with the imagery. It is up to you to manually inspect, modify, cull the training data generated for optimal model performance. There is no step presented here to do this for you. In fact, it is a critical step as a Data Scientist that you own that element.