Code for Self-Exploring Language Models: Active Preference Elicitation for Online Alignment.

Authors: Shenao Zhang¹, Donghan Yu², Hiteshi Sharma², Ziyi Yang², Shuohang Wang², Hany Hassan², Zhaoran Wang¹.

¹Northwestern University, ²Microsoft

🤗 Zephyr Models

🤗 Llama-3 Models

🤗 Phi-3 Models

To run the code in this project, first, create a Python virtual environment using e.g. Conda:

conda create -n selm python=3.10 && conda activate selmYou can then install the remaining package dependencies as follows:

python -m pip install .You will also need Flash Attention 2 installed, which can be done by running:

python -m pip install flash-attn==2.3.6 --no-build-isolationNext, log into your Hugging Face account as follows:

huggingface-cli loginFinally, install Git LFS so that you can push models to the Hugging Face Hub:

sudo apt-get install git-lfsTo train SELM on Meta-Llama-3-8B-Instruct, you need to first apply for the access. To train SELM on Phi-3-mini-4k-instruct, upgrade vllm by pip install vllm==0.4.2.

Replace HF_USERNAME in train_zephyr.sh, train_llama.sh, train_phi.sh with your huggingface username.

After the above preparation, run the following commands:

Train SELM on Zephyr-SFT:

sh run_zephyr.shTrain SELM on Meta-Llama-3-8B-Instruct:

sh run_llama.shTrain SELM on Phi-3-mini-4k-instruct:

sh run_phi.sh@article{zhang2024self,

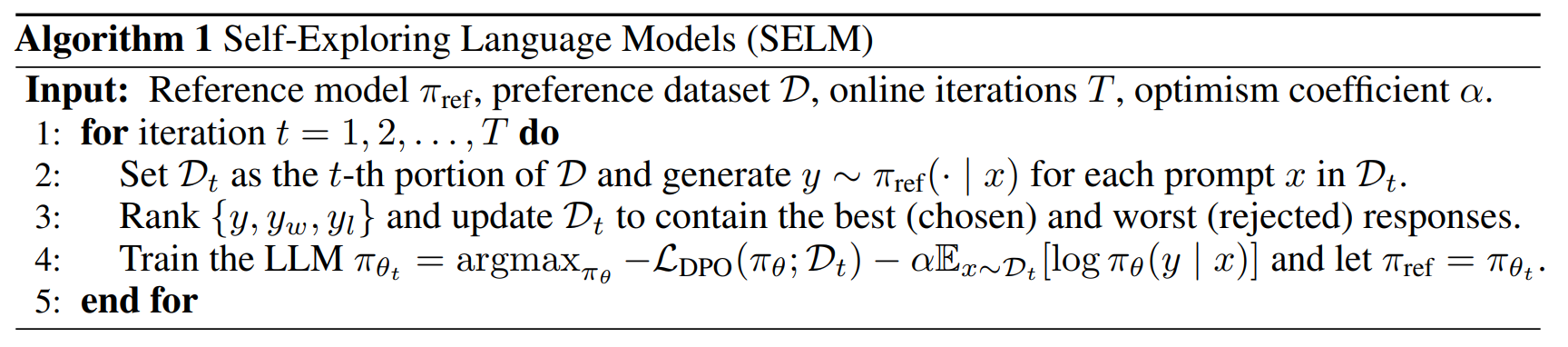

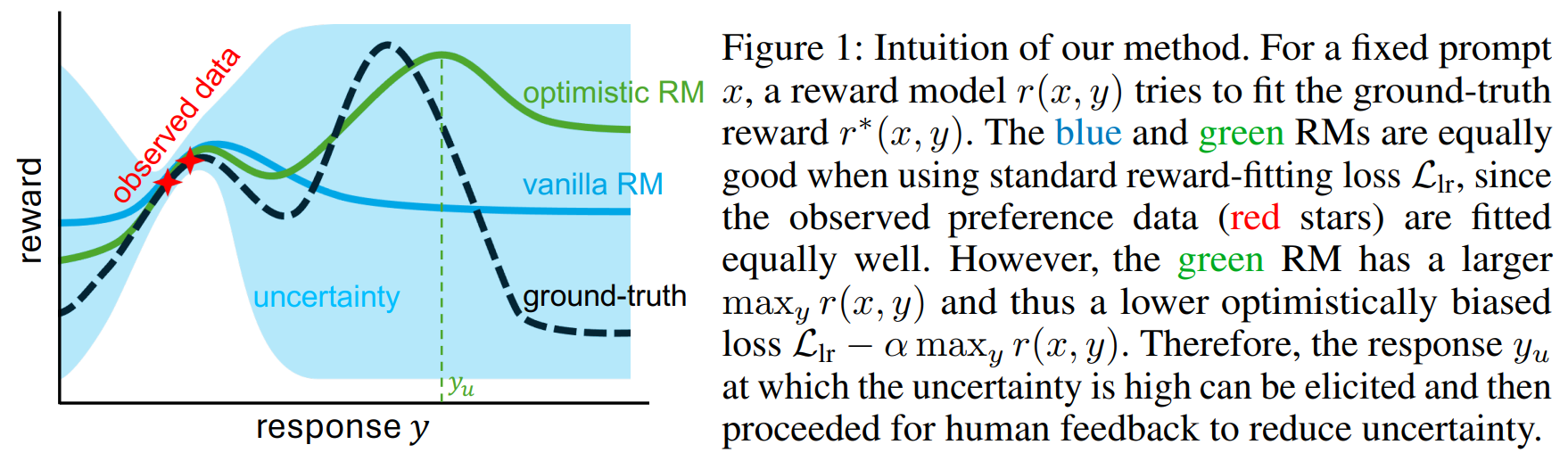

title={Self-Exploring Language Models: Active Preference Elicitation for Online Alignment},

author={Zhang, Shenao and Yu, Donghan and Sharma, Hiteshi and Yang, Ziyi and Wang, Shuohang and Hassan, Hany and Wang, Zhaoran},

journal={arXiv preprint arXiv:2405.19332},

year={2024}

}This repo is built upon The Alignment Handbook. We thank the authors for their great work.