updating....

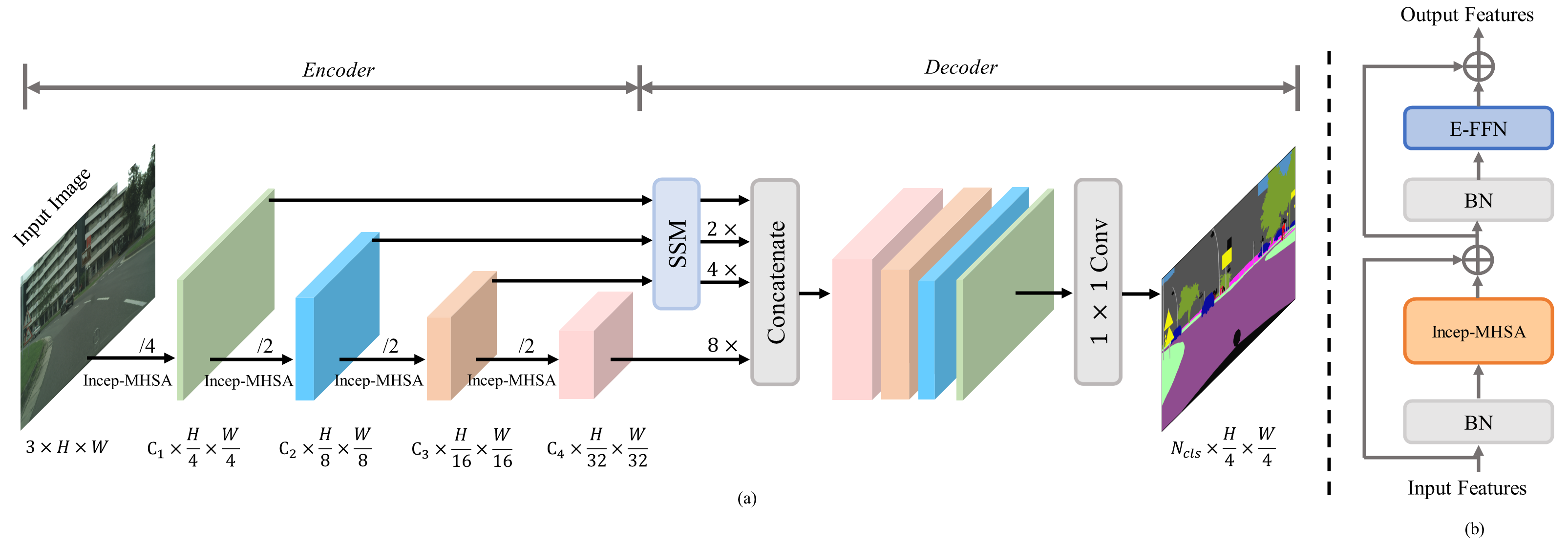

IncepFormer: Efficient Inception Transformer with Spatial Selection Decoder for Semantic Segmentation

We use MMSegmentation v0.29.0 as the codebase.

For install and data preparation, please refer to the guidelines in MMSegmentation v0.29.0.

Other requirements:

pip install timm==0.4.12

An example (works for me): CUDA 11.0 and pytorch 1.7.0

pip install torchvision==0.8.0

pip install timm==0.4.12

pip install mmcv-full==1.5.3

pip install opencv-python==4.6.0.66

cd IncepFormer && pip install -e .

Download weights

(

google drive

)

pretrained on ImageNet-1K, and put them in a folder pretrained/.

Example: train IncepFormer-T on ADE20K:

# Single-gpu training

python tools/train.py local_configs/incepformer/Tiny/tiny_ade_512×512_160k.py

# Multi-gpu training

./tools/dist_train.sh local_configs/incepformer/Tiny/tiny_ade_512×512_160k.py <GPU_NUM>

Example: evaluate IncepFormer-T on ADE20K:

# Single-gpu testing

python tools/test.py local_configs/incepformer/Tiny/tiny_ade_512×512_160k.py /path/to/checkpoint_file

# Multi-gpu testing

./tools/dist_test.sh local_configs/incepformer/Tiny/tiny_ade_512×512_160k.py /path/to/checkpoint_file <GPU_NUM>

# Multi-gpu, multi-scale testing

tools/dist_test.sh local_configs/incepformer/Tiny/tiny_ade_512×512_160k.py /path/to/checkpoint_file <GPU_NUM> --aug-test