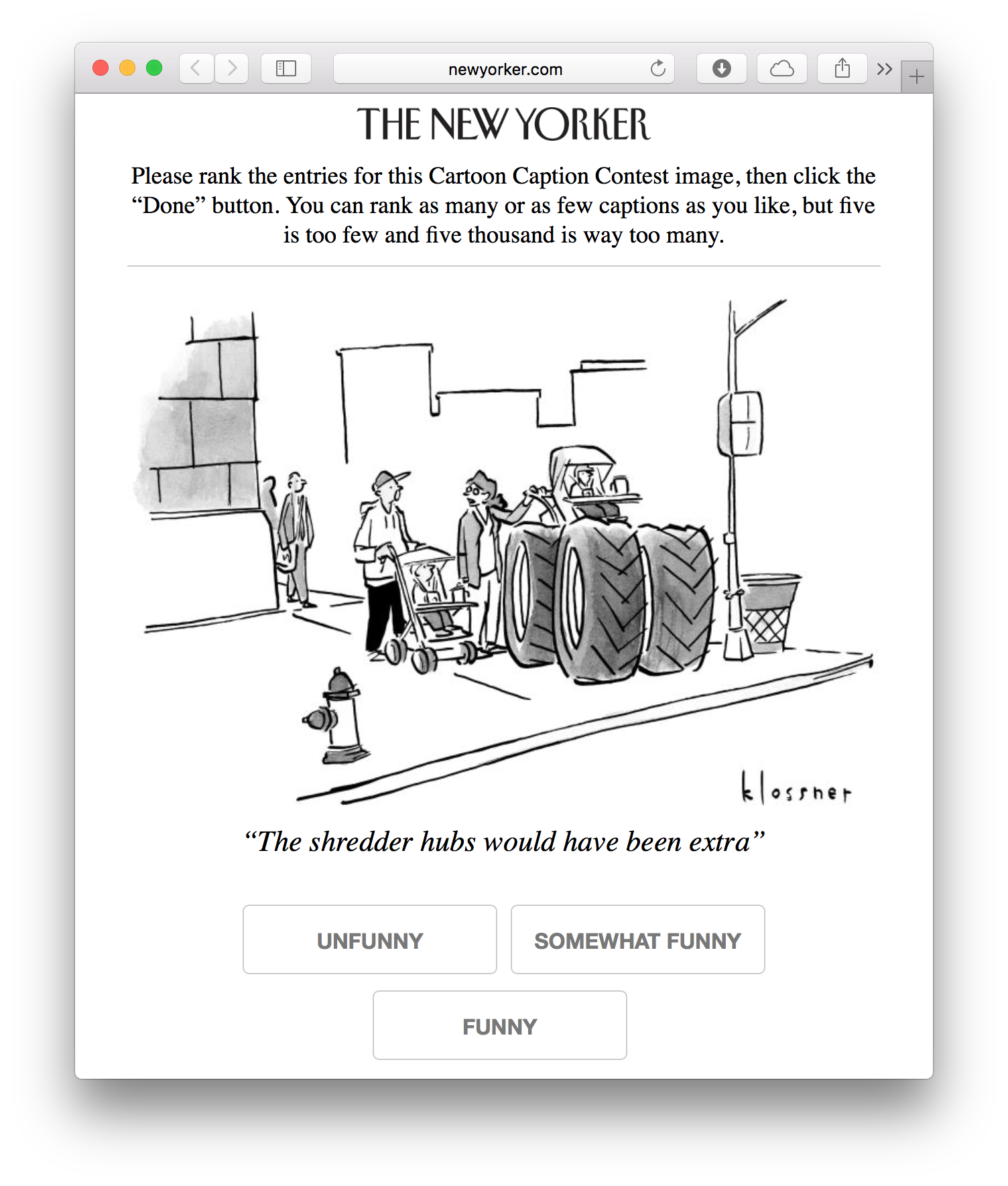

This repository contains data gathered on the NEXT active machine learning system for the New Yorker caption contest. The user sees a similar question to the one below and we provide information to the below questions/the answer/etc:

| New Yorker hosting | "How funny is this caption?" | "Which caption is funnier?" |

|---|---|---|

|

|

|

| Cardinal bandits | Cardinal bandits | Dueling bandits |

We provide about 33 million ratings on 441,479 unique captions during 88 contests after publishing 598 on 2018-01-07.

For each response, we record

- information on the user (participant ID, response time, timestamp, network delay, etc)

- information on the question (caption(s) present, user answer, algorithm generating query)

The data for an individual experiment is in the contests/ directory. For

example, caption contest 505 lives in contests/random+adaptive/505/. More

description and detail is given in the individual folder.

These datasets are part of the "cartoon caption contest" where given a cartoon users are supposed to write a funny caption. Our algorithms help determine the funniest algorithms (and we also provide unbiased data).

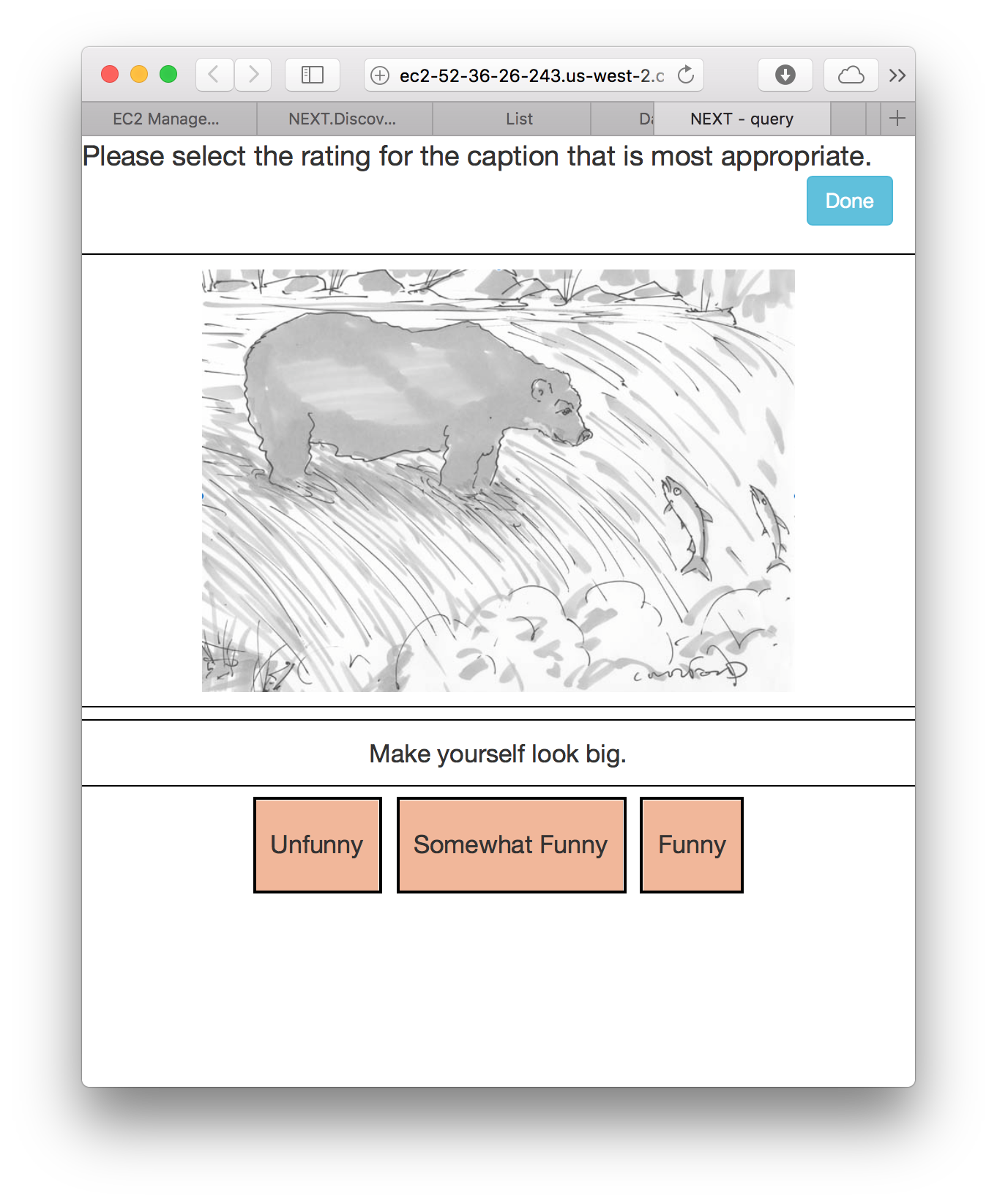

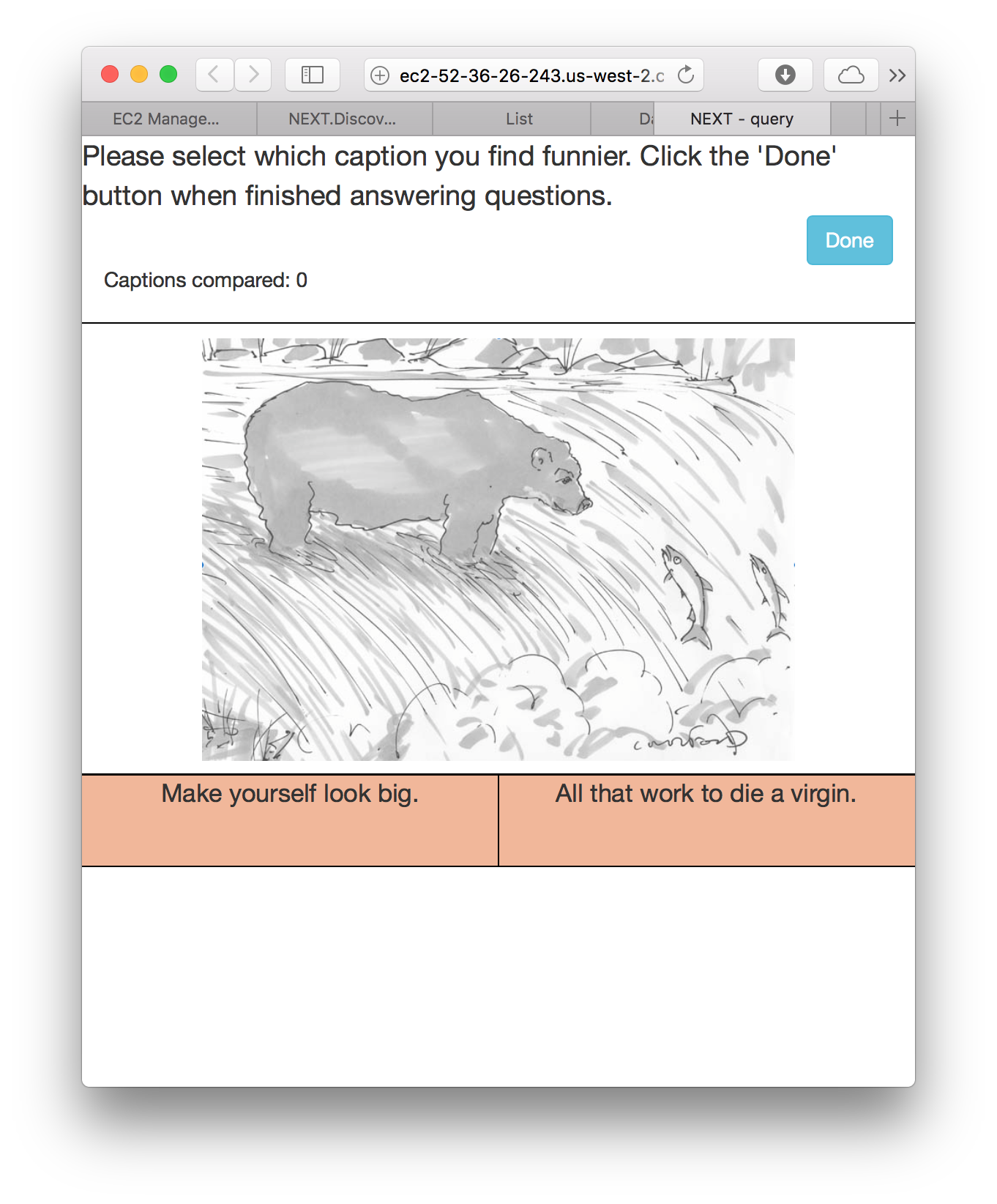

We ask users to rate caption funniness in some way (more detail is provided in each folder). This could be questions like "Is the funnier caption on the left or right?" or "How funny is this comic -- 'unfunny', 'somewhat funny' or 'funny'?"

More detail is given in each folder. Each folder's name corresponds to which caption contest it appeared in.

We provide response data for each user. We provide the details on what each query consists of as well as their answer/other information.

We provide

- simplified response data as a CSV with header described below

- full response data as a JSON object. This file is parsed by

get_responses_from_next/json_parse.py - all possible captions

- the cartoon the captions were being written for

- Partipipant ID: The ID assigned to each participant. Note this is assigned when the page is visited; if the same user visits the page twice, they will get two participant IDs.

- Response Time (s): How long the participant took to respond to the question. Network delay is accounted for

- Network delay (s): How long the question took to load.

- Timestamp: When the query was generated (and not when the query was answered)

- Alg label: The algorithm responsible for showing the query. The random sampling is unbiased while "Lil_UCB" adaptively chooses the funniest caption.

Queries of the form "which caption is funnier?"

- Left and Right (both headers appear): The caption that appeared on the left/right.

- Answer: What the user selected as the funnier caption.

Queries of the form "how funny is this caption?"

- Target: The caption the user is asked to rate.

- Rating: What the user rated the caption as. This can be either 1, 2 or 3 depending on if the joke was unfunny, somewhat funny or funny respectively.

This data is available on data.world as stsievert/caption-contest-data. To obtain this data, follow their install instructions use a data.world integration, which includes interfaces for different languages. For example, here's their Python integration with these data:

>>> import datadotworld as dw

>>> d = dw.DataDotWorld()

>>> data = d.load_dataset('stsievert/caption-contest-data')

>>> print(data.tables['orginal/546_summary_LilUCB.csv'][0])

... OrderedDict([('', '0'),

('rank', '1'),

('caption', 'We never should have applauded.'),

('score', '1.8421052631578947'),

('precision', '0.04554460512094434'),

('count', '285.0'),

('unfunny', '110.0'),

('somewhat_funny', '110.0'),

('funny', '65.0')])- trimmed participant responses, as a CSV (headers included above)

- a trimmed CSV for each collection scheme, as detailed below.

- the full participant response data as a

.jsonfile. The files to parse this file are included in this repo (json_parse.py).

We decided to generate queries in several ways, either adaptively or randomly. The random data makes no choices based on previous responses on which queries to ask. The adaptive method does make choices based on previous responses to decide which queries to ask.

We consider the randomly collected data to be unbiased and the adaptively collected data to be biased. The randomly collected data is collected under the "RandomSampling" and "RoundRobin" algorithms while the adaptive schemes are with the "LilUCB" algorithm.

This dataset would not be possible without The New Yorker and their Caption Contest as well as The New Yorker cartoonists. More of their work is available at Condé Nast: https://condenaststore.com/conde-nast-brand/cartoonbank

In earlier experiments, sample queries are also shown (minus 509). Other experiments have specified that the experiment setup being run was either "Cardinal" or "Dueling". In these two experiments, example captions are below:

The user is given a URL and after visiting the URL, the user is presented with a series of queries similar to the one above. There may be variations on the query, described in detail on each experiment.