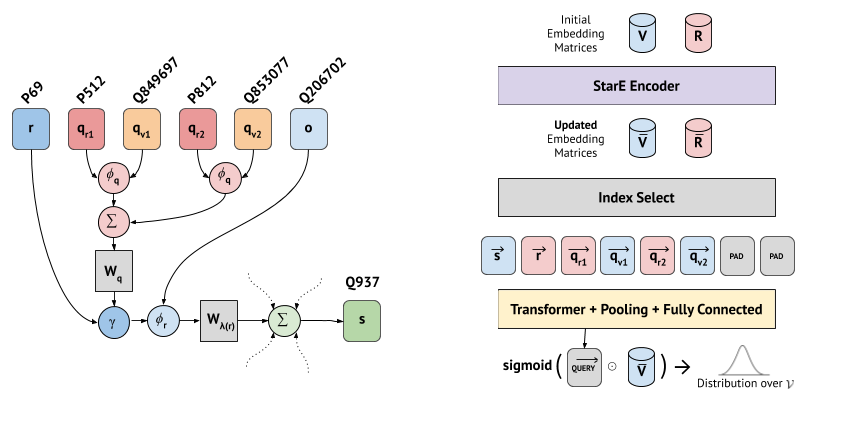

StarE encodes hyper-relational fact by first passing Qualifier pairs through a composition function and then summed and transformed by

.

The resulting vector is then merged via

, and

with the relation and object vector, respectively. Finally, node Q937 aggregates messages from this and other hyper-relational edges. Please refer to the paper for details.

- Python>=3.9

- PyTorch 2.1.1

- torch-geometric 2.4.0

- torch-scatter 2.1.2

- tqdm

- wandb

Create a new conda environment and execute setup.sh.

Alternatively

pip install -r requirements.txt

The dataset can be found in data/clean/wd50k.

Its derivatives can be found there as well:

wd50k_33- approx 33% of statements have qualifierswd50k_66- approx 66% of statements have qualifierswd50k_100- 100% of statements have qualifiers

More information available in dataset README

Specified as MODEL_NAME in the running script

stare_transformer- main model StarE (H) + Transformer (H) [default]stare_stats_baseline- baseline model Transformer (H)stare_trans_baseline- baseline model Transformer (T)

Specified as DATASET in the running script

jf17kwikipeoplewd50k[default]wd50k_33wd50k_66wd50k_100

It is advised to run experiments on a GPU otherwise training might take long.

Use DEVICE cuda to turn on GPU support, default is cpu.

Don't forget to specify CUDA_VISIBLE_DEVICES before python if you use cuda

Currently tested on cuda==12.1

Three parameters control triple/hyper-relational nature and max fact length:

STATEMENT_LEN:-1for hyper-relational [default],3for triplesMAX_QPAIRS: max fact length (3+2*quals), e.g.,15denotes a fact with 5 qualifiers3+2*5=15.15is default forwd50kdatasets andjf17k, set7for wikipeople, set3for triples (in combination withSTATEMENT_LEN 3)SAMPLER_W_QUALIFIERS:Truefor hyper-relational models [default],Falsefor triple-based models only

The following scripts will train StarE (H) + Transformer (H) for 400 epochs and evaluate on the test set:

- StarE (H) + Transformer (H)

python run.py DATASET wd50k

- StarE (H) + Transformer (H) with a GPU.

CUDA_VISIBLE_DEVICES=0 python run.py DEVICE cuda DATASET wd50k

- You can adjust the dataset with a higher ratio of quals by changing

DATASETwith the available above names

python run.py DATASET wd50k_33

- On JF17K

python run.py DATASET jf17k CLEANED_DATASET False

- On WikiPeople

python run.py DATASET wikipeople CLEANED_DATASET False MAX_QPAIRS 7 EPOCHS 500

Triple-based models can be started with this basic set of params:

python run.py DATASET wd50k STATEMENT_LEN 3 MAX_QPAIRS 3 SAMPLER_W_QUALIFIERS False

More hyperparams are available in the CONFIG dictionary in the run.py.

If you want to adjust StarE encoder params prepend GCN_ to the params in the STAREARGS dict, e.g.,

python run.py DATASET wd50k GCN_GCN_DIM 80 GCN_QUAL_AGGREGATE concat

will construct StarE with hidden dim of 80 and concat as gamma function from the paper.

It's there out of the box! Create an account on WANDB Then, make sure you install the latest version of the package

pip install wandb

Locate your API_KEY in the user settings and activate it:

wandb login <api_key>

Then just use the CLI argument WANDB True, it will:

- Create a

wikidata-embeddingsproject in your active team - Create a run with a random name and log results there

@inproceedings{StarE,

title={Message Passing for Hyper-Relational Knowledge Graphs},

author={Galkin, Mikhail and Trivedi, Priyansh and Maheshwari, Gaurav and Usbeck, Ricardo and Lehmann, Jens},

booktitle={EMNLP},

year={2020}

}

For any further questions, please contact: mikhail.galkin@iais.fraunhofer.de