Pytorch implementation of paper

Explainable and Explicit Visual Reasoning over Scene Graphs

Jiaxin Shi, Hanwang Zhang, Juanzi Li

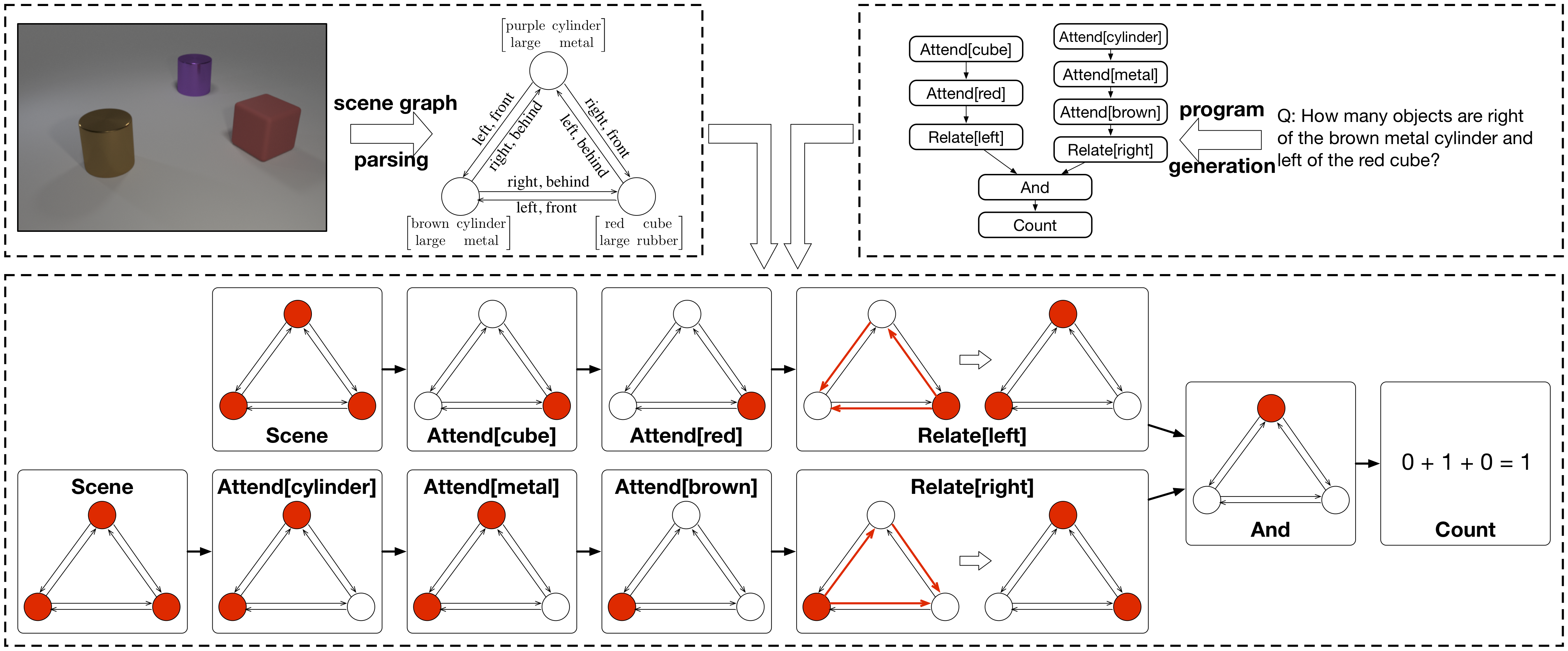

Flowchart of our model:

A visualization of our reasoning process:

If you find this code useful in your research, please cite

@inproceedings{shi2019explainable,

title={Explainable and Explicit Visual Reasoning over Scene Graphs},

author={Jiaxin Shi, Hanwang Zhang, Juanzi Li},

booktitle={CVPR},

year={2019}

}- python==3.6

- pytorch==0.4.0

- h5py

- tqdm

- matplotlib

We have 4 experiment settings:

- CLEVR dataset, Det setting (i.e., using detected scene graphs). Codes are in the directory

./exp_clevr_detected. - CLEVR dataset, GT setting (i.e., using ground truth scene graphs), attention is computed by softmax function over the label space. Codes are in

./exp_clevr_gt_softmax. - CLEVR dataset, GT setting, attention is computed by sigmoid function. Codes are in

./exp_clevr_gt_sigmoid. - VQA2.0 dataset, detected scene graphs. Codes are in

./exp_vqa.

We have a separate README for each experiment setting as an instruction to reimplement our reported results. Feel free to contact me if you have any problems: shijx12@gmail.com