Install all required python dependencies:

pip install -r requirements.txt

pip install diffusers

Download the image files from Google Drive and unzip all the images (train, dev, test) in the same folder (). The structure should be:

ScienceQA

├──test

└──test

├── 1

└── image.png

├── 2

└── image.png

├──train

└──train

├── 1

└── image.png

├── 2

└── image.png

├──val

└──val

├── 1

└── image.png

├── 2

└── image.png

Download the problems.json from the following repository:

https://github.com/lupantech/ScienceQA/tree/main/data/scienceqa

Place the file in folder ScienceQA

# rationale generation

CUDA_VISIBLE_DEVICES=0,1 python main.py \

--model allenai/unifiedqa-t5-base \

--user_msg rationale --img_type png \

--bs 2 --eval_bs 4 --eval_acc 10 --output_len 512 \

--final_eval --prompt_format QCM-LE

# answer inference

CUDA_VISIBLE_DEVICES=0,1 python main.py \

--model allenai/unifiedqa-t5-base \

--user_msg answer --img_type png \

--bs 8 --eval_bs 4 --eval_acc 10 --output_len 64 \

--final_eval --prompt_format QCMG-A \

--eval_le models/rationale/predictions_ans_eval.json \

--test_le models/rationale/predictions_ans_test.json \

--evaluate_dir models/answer

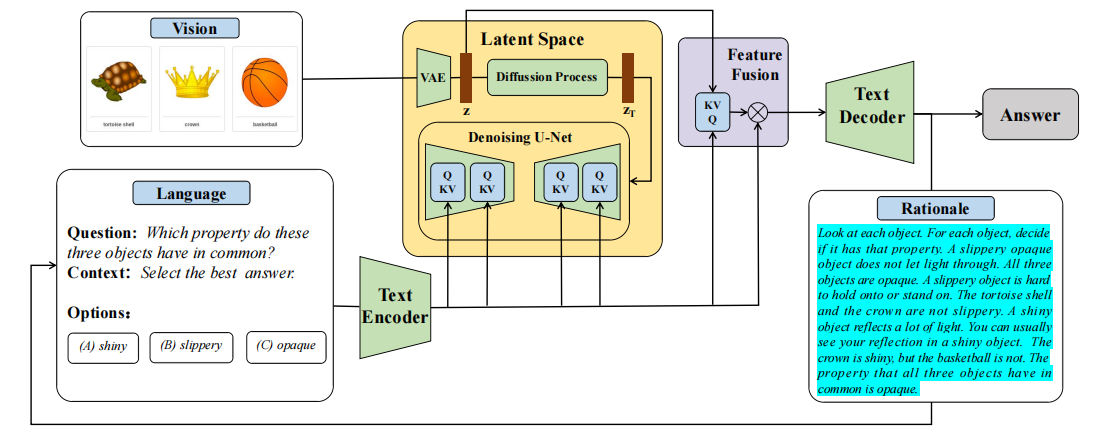

@article{he2023multi,

title={Multi-modal Latent Space Learning for Chain-of-Thought Reasoning in Language Models},

author={He, Liqi and Li, Zuchao and Cai, Xiantao and Wang, Ping},

journal={arXiv preprint arXiv:2312.08762},

year={2023}

}