pybuc (Python Bayesian Unobserved Components) is a version of R's Bayesian structural time

series package, bsts, written by Steven L. Scott. The source paper can be found

here or in the papers

directory of this repository. While there are plans to expand the feature set of pybuc, currently there is no roadmap

for the release of new features. The syntax for using pybuc closely follows statsmodels' UnobservedComponents

module.

The current version of pybuc includes the following options for modeling and

forecasting a structural time series:

- Stochastic or non-stochastic level

- Damped level

- Stochastic or non-stochastic trend

- Damped trend *

- Multiple stochastic or non-stochastic periodic-lag seasonality

- Multiple damped periodic-lag seasonality

- Multiple stochastic or non-stochastic "dummy" seasonality

- Multiple stochastic or non-stochastic trigonometric seasonality

- Regression with static coefficients**

* pybuc dampens trend differently than bsts. The former assumes an AR(1) process without

drift for the trend state equation. The latter assumes an AR(1) with drift. In practice this means that the trend,

on average, will be zero with pybuc, whereas bsts allows for the mean trend to be non-zero. The reason for

choosing an autoregressive process without drift is to be conservative with long horizon forecasts.

** pybuc estimates regression coefficients differently than bsts. The former uses a standard Gaussian

prior. The latter uses a Bernoulli-Gaussian mixture commonly known as the spike-and-slab prior. The main

benefit of using a spike-and-slab prior is its promotion of coefficient-sparse solutions, i.e., variable selection, when

the number of predictors in the regression component exceeds the number of observed data points.

Fast computation is achieved using Numba, a high performance just-in-time (JIT) compiler for Python.

pip install pybuc

See pyproject.toml and poetry.lock for dependency details. This module depends on NumPy, Numba, Pandas, and

Matplotlib. Python 3.9 and above is supported.

The Seasonal Autoregressive Integrated Moving Average (SARIMA) model is perhaps the most widely used class of statistical time series models. By design, these models can only operate on covariance-stationary time series. Consequently, if a time series exhibits non-stationarity (e.g., trend and/or seasonality), then the data first have to be stationarized. Transforming a non-stationary series to a stationary one usually requires taking local and/or seasonal time-differences of the data, but sometimes a linear trend to detrend a trend-stationary series is sufficient. Whether to stationarize the data and to what extent differencing is needed are things that need to be determined beforehand.

Once a stationary series is in hand, a SARIMA specification must be identified. Identifying the "right" SARIMA

specification can be achieved algorithmically (e.g., see the Python package pmdarima) or through examination of a

series' patterns. The latter typically involves statistical tests and visual inspection of a series' autocorrelation

(ACF) and partial autocorrelation (PACF) functions. Ultimately, the necessary condition for stationarity requires

statistical analysis before a model can be formulated. It also implies that the underlying trend and seasonality, if

they exist, are eliminated in the process of generating a stationary series. Consequently, the underlying time

components that characterize a series are not of empirical interest.

Another less commonly used class of model is structural time series (STS), also known as unobserved components (UC). Whereas SARIMA models abstract away from an explicit model for trend and seasonality, STS/UC models do not. Thus, it is possible to visualize the underlying components that characterize a time series using STS/UC. Moreover, it is relatively straightforward to test for phenomena like level shifts, also known as structural breaks, by statistical examination of a time series' estimated level component.

STS/UC models also have the flexibility to accommodate multiple stochastic seasonalities. SARIMA models, in contrast, can accommodate multiple seasonalities, but only one seasonality/periodicity can be treated as stochastic. For example, daily data may have day-of-week and week-of-year seasonality. Under a SARIMA model, only one of these seasonalities can be modeled as stochastic. The other seasonality will have to be modeled as deterministic, which amounts to creating and using a set of predictors that capture said seasonality. STS/UC models, on the other hand, can accommodate both seasonalities as stochastic by treating each as distinct, unobserved state variables.

With the above in mind, what follows is a comparison between statsmodels' SARIMAX' module, statsmodels'

UnobservedComponents module, and pybuc. The distinction between statsmodels.UnobservedComponents and pybuc is

the former is a maximum likelihood estimator (MLE) while the latter is a Bayesian estimator. The following code

demonstrates the application of these methods on a data set that exhibits trend and multiplicative seasonality.

The STS/UC specification for statsmodels.UnobservedComponents and pybuc includes stochastic level, stochastic trend

(trend), and stochastic trigonometric seasonality with periodicity 12 and 6 harmonics.

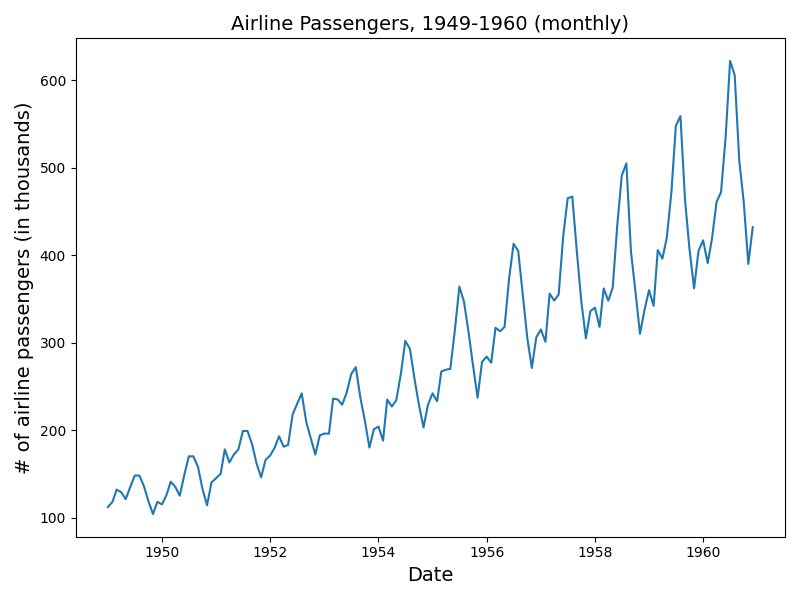

A canonical data set that exhibits trend and seasonality is the airline passenger data used in Box, G.E.P.; Jenkins, G.M.; and Reinsel, G.C. Time Series Analysis, Forecasting and Control. Series G, 1976. See plot below.

This data set gave rise to what is known as the "airline model", which is a SARIMA model with first-order local and seasonal differencing and first-order local and seasonal moving average representations. More compactly, SARIMA(0, 1, 1)(0, 1, 1) without drift.

To demonstrate the performance of the "airline model" on the airline passenger data, the data will be split into a training and test set. The former will include all observations up until the last twelve months of data, and the latter will include the last twelve months of data. See code below for model assessment.

from pybuc import buc

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from statsmodels.tsa.statespace.sarimax import SARIMAX

from statsmodels.tsa.statespace.structural import UnobservedComponents

# Convenience function for computing root mean squared error

def rmse(actual, prediction):

act, pred = actual.flatten(), prediction.flatten()

return np.sqrt(np.mean((act - pred) ** 2))

# Import airline passenger data

url = "https://raw.githubusercontent.com/devindg/pybuc/master/examples/data/airline-passengers.csv"

air = pd.read_csv(url, header=0, index_col=0)

air = air.astype(float)

air.index = pd.to_datetime(air.index)

hold_out_size = 12

# Create train and test sets

y_train = air.iloc[:-hold_out_size]

y_test = air.iloc[-hold_out_size:]

''' Fit the airline data using SARIMA(0,1,1)(0,1,1) '''

sarima = SARIMAX(y_train, order=(0, 1, 1),

seasonal_order=(0, 1, 1, 12),

trend=[0])

sarima_res = sarima.fit(disp=False)

print(sarima_res.summary())

# Plot in-sample fit against actuals

plt.plot(y_train)

plt.plot(sarima_res.fittedvalues)

plt.title('SARIMA: In-sample')

plt.xticks(rotation=45, ha="right")

plt.show()

# Get and plot forecast

sarima_forecast = sarima_res.get_forecast(hold_out_size).summary_frame(alpha=0.05)

plt.plot(y_test)

plt.plot(sarima_forecast['mean'])

plt.fill_between(sarima_forecast.index,

sarima_forecast['mean_ci_lower'],

sarima_forecast['mean_ci_upper'], alpha=0.2)

plt.title('SARIMA: Forecast')

plt.legend(['Actual', 'Mean', '95% Prediction Interval'])

plt.show()

# Print RMSE

print(f"SARIMA RMSE: {rmse(y_test.to_numpy(), sarima_forecast['mean'].to_numpy())}")

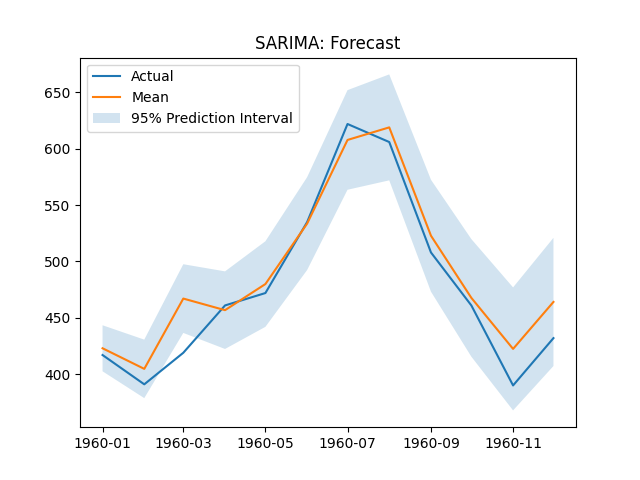

SARIMA RMSE: 21.09028021383853

The SARIMA(0, 1, 1)(0, 1, 1) forecast plot.

''' Fit the airline data using MLE unobserved components '''

mle_uc = UnobservedComponents(y_train, exog=None, irregular=True,

level=True, stochastic_level=True,

trend=True, stochastic_trend=True,

freq_seasonal=[{'period': 12, 'harmonics': 6}],

stochastic_freq_seasonal=[True])

# Fit the model via maximum likelihood

mle_uc_res = mle_uc.fit(disp=False)

print(mle_uc_res.summary())

# Plot in-sample fit against actuals

plt.plot(y_train)

plt.plot(mle_uc_res.fittedvalues)

plt.title('MLE UC: In-sample')

plt.show()

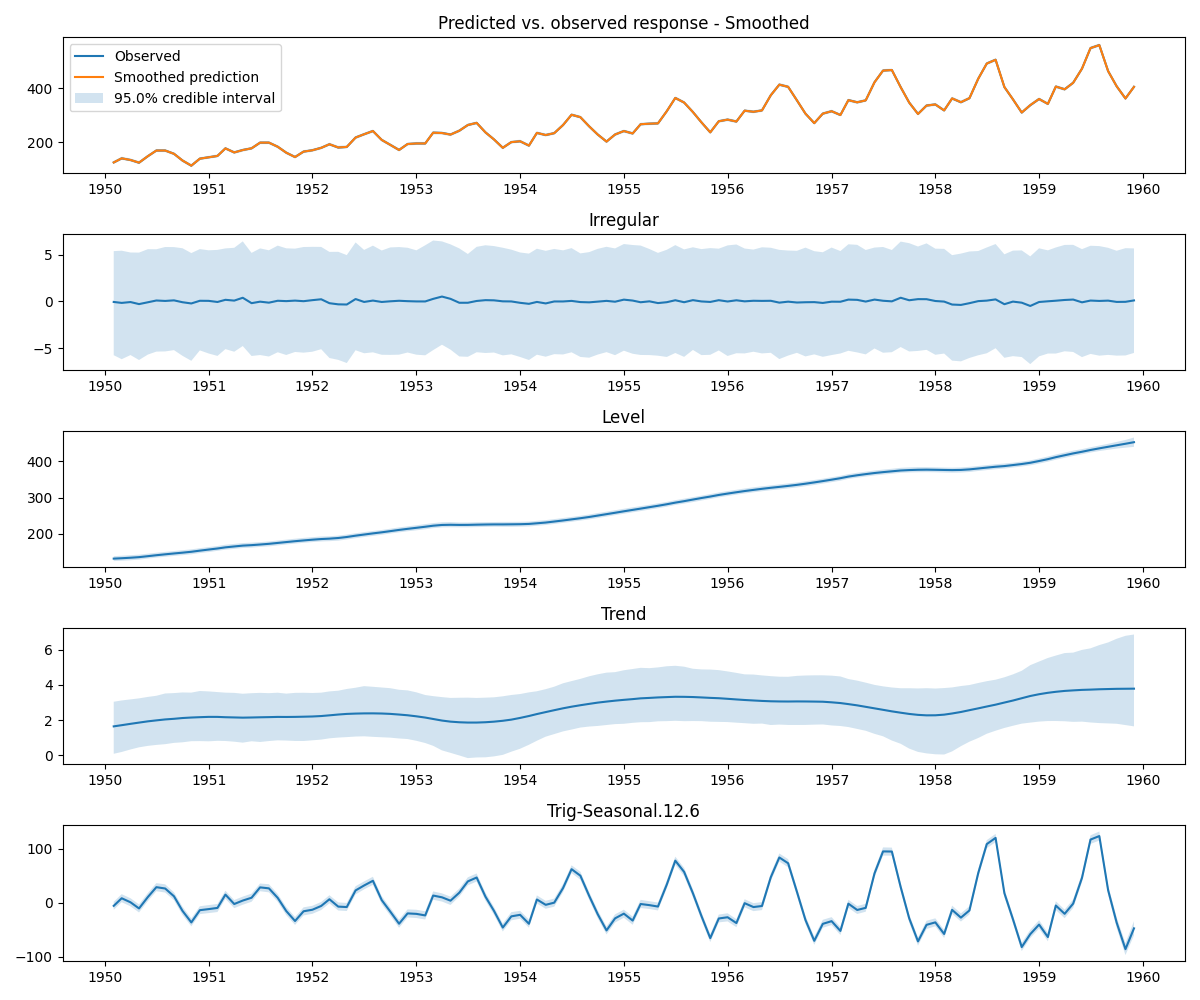

# Plot time series components

mle_uc_res.plot_components(legend_loc='lower right', figsize=(15, 9), which='smoothed')

plt.show()

# Get and plot forecast

mle_uc_forecast = mle_uc_res.get_forecast(hold_out_size).summary_frame(alpha=0.05)

plt.plot(y_test)

plt.plot(mle_uc_forecast['mean'])

plt.fill_between(mle_uc_forecast.index,

mle_uc_forecast['mean_ci_lower'],

mle_uc_forecast['mean_ci_upper'], alpha=0.2)

plt.title('MLE UC: Forecast')

plt.legend(['Actual', 'Mean', '95% Prediction Interval'])

plt.show()

# Print RMSE

print(f"MLE UC RMSE: {rmse(y_test.to_numpy(), mle_uc_forecast['mean'].to_numpy())}")

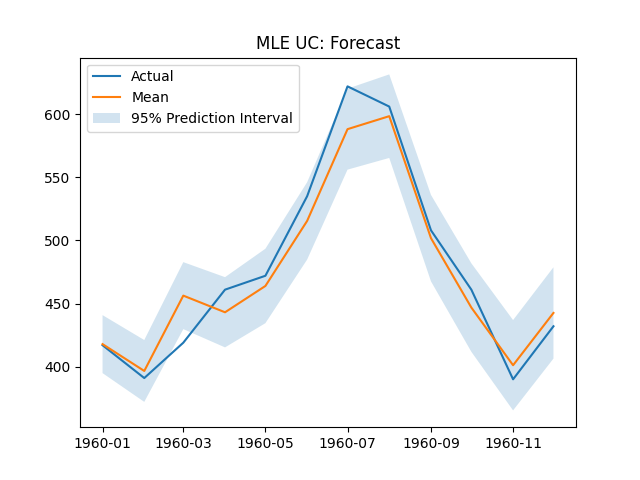

MLE UC RMSE: 17.961873327622694

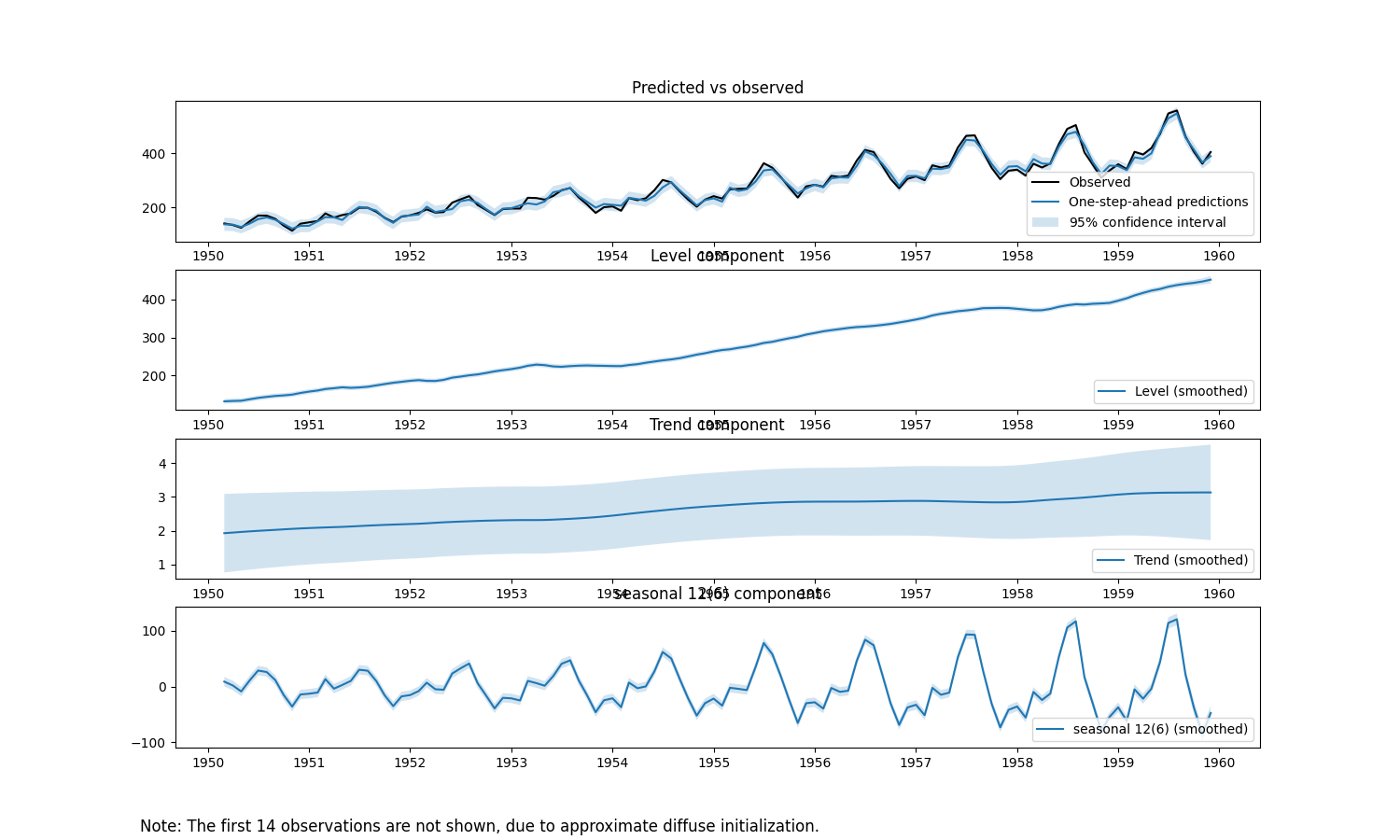

The MLE Unobserved Components forecast and component plots.

As noted above, a distinguishing feature of STS/UC models is their explicit modeling of trend and seasonality. This is illustrated with the components plot.

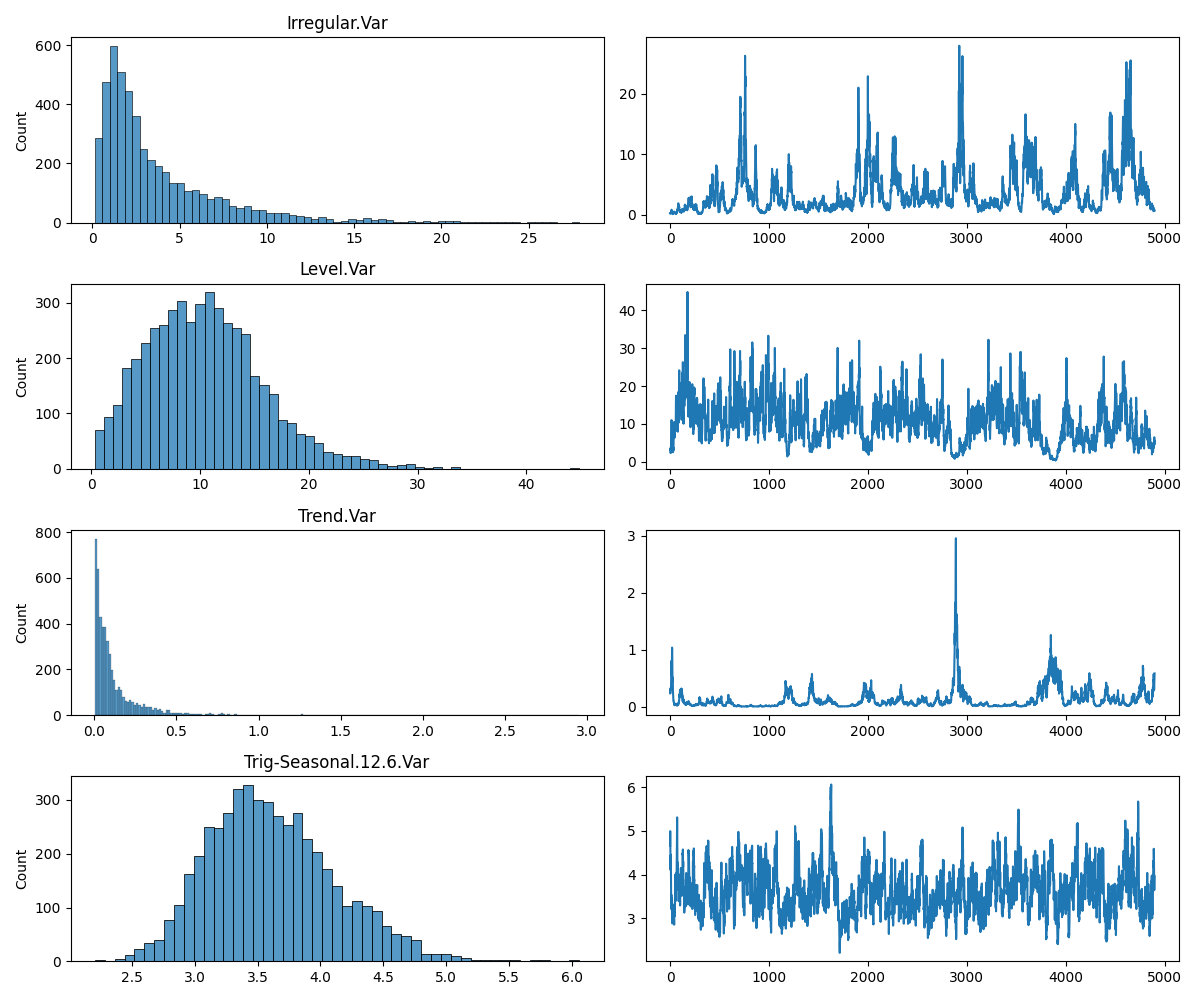

Finally, the Bayesian analog of the MLE STS/UC model is demonstrated. Default parameter values are used for the priors corresponding to the variance parameters in the model. See below for default priors on variance parameters.

Note that because computation is built on Numba, a JIT compiler, the first run of the code could take a while. Subsequent runs (assuming the Python kernel isn't restarted) should execute considerably faster.

''' Fit the airline data using Bayesian unobserved components '''

bayes_uc = buc.BayesianUnobservedComponents(response=y_train,

level=True, stochastic_level=True,

trend=True, stochastic_trend=True,

trig_seasonal=((12, 0),), stochastic_trig_seasonal=(True,),

seed=123)

post = bayes_uc.sample(5000)

mcmc_burn = 100

# Print summary of estimated parameters

for key, value in bayes_uc.summary(burn=mcmc_burn).items():

print(key, ' : ', value)

# Plot in-sample fit against actuals

bayes_uc.plot_post_pred_dist(burn=mcmc_burn)

plt.title('Bayesian UC: In-sample')

plt.show()

# Plot time series components

bayes_uc.plot_components(burn=mcmc_burn, smoothed=True)

plt.show()

# Plot trace of posterior

bayes_uc.plot_trace(burn=mcmc_burn)

plt.show()

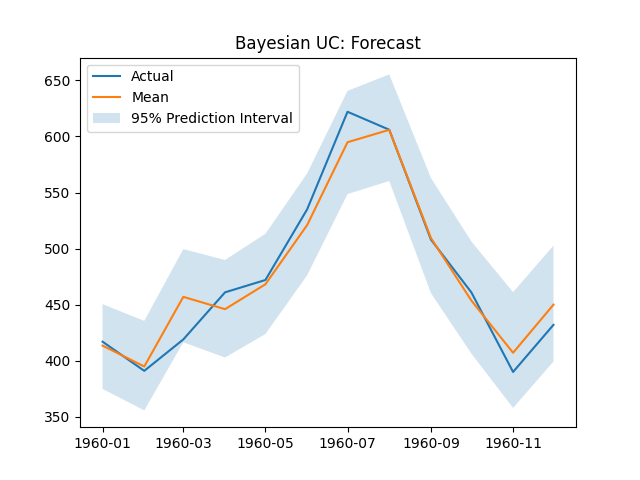

# Get and plot forecast

forecast, _ = bayes_uc.forecast(hold_out_size, mcmc_burn)

forecast_mean = np.mean(forecast, axis=0)

forecast_l95 = np.quantile(forecast, 0.025, axis=0).flatten()

forecast_u95 = np.quantile(forecast, 0.975, axis=0).flatten()

plt.plot(y_test)

plt.plot(bayes_uc.future_time_index, forecast_mean)

plt.fill_between(bayes_uc.future_time_index, forecast_l95, forecast_u95, alpha=0.2)

plt.title('Bayesian UC: Forecast')

plt.legend(['Actual', 'Mean', '95% Prediction Interval'])

plt.show()

# Print RMSE

print(f"BAYES-UC RMSE: {rmse(y_test.to_numpy(), forecast_mean)}")

BAYES-UC RMSE: 16.620400857034113

The Bayesian Unobserved Components forecast plot, components plot, and RMSE are shown below.

A structural time series model with level, trend, seasonal, and regression components takes the form:

where

The unobserved level evolves according to the following general transition equations:

where

The parameters

Note that if

For a given periodicity

where

This specification for seasonality is arguably the most robust representation (relative to dummy and trigonometric) because its structural assumption on periodicity is the least complex.

Another way is known as the "dummy" variable approach. Formally, the seasonal effect on the outcome

where

The final way to model seasonality is through a trigonometric representation, which exploits the periodicity of sine and cosine functions. Specifically, seasonality is modeled as

where

where frequency

Accordingly, if

There are two ways to configure the model matrices to account for a regression component with static coefficients.

The canonical way (Method 1) is to append

While both methods can be accommodated by the Kalman filter, Method 1 is a direct extension of the Kalman filter as it

maintains the observability of

The unobservability of

where

pybuc uses Method 2 for estimating static coefficients.

If no priors are given for variances corresponding to stochastic states (i.e., level, trend, and seasonality), the following defaults are used:

The level prior matches the default level prior in R's bsts package. However, the default seasonal and trend priors

are different. While the default trend prior in bsts is the same as the level and seasonal priors, pybuc makes a

more conservative assumption about the variance associated with trend. This is reflected by a standard deviation

that is one-tenth (one-hundredth) the magnitude of the level (seasonal) standard deviation. In other words,

this prior assumes that variation in trend is small relative to variation in level and seasonality. The objective is to

mitigate the impact that noise in the data could have on producing an overly aggressive trend.

In a similar vein, the default seasonal prior is different from bsts's in that it allows for more flexibility in scale.

Specifically, the seasonal standard deviation is ten (one-hundred) times larger than the standard deviation for level

(trend). Intuitively, changes from one cycle to another, as opposed to one period to the next, are likely to be larger.

The default prior for irregular variance is:

Damping can be applied to level, trend, and periodic-lag seasonality state components. By default, if no prior is given for an autoregressive (i.e., AR(1)) coefficient, the prior takes the form

where

The default prior for regression coefficients is

where zellner_prior_obs in the sample() method. If Zellner's g-prior is not

desired, then a custom precision matrix can be passed to the argument reg_coeff_prec_prior. Similarly, if a zero-mean

prior is not wanted, a custom mean prior can be passed to reg_coeff_mean_prior.

The unobserved components model can be rewritten in state space form. For example, suppose level, trend, seasonal,

regression, and irregular components are specified, and the seasonal component takes a trigonometric form with

periodicity

There are

Given the definitions of

where

and

pybuc mirrors R's bsts with respect to estimation method. The observation vector, state vector, and regression

coefficients are assumed to be conditionally normal random variables, and the error variances are assumed to be

conditionally independent inverse-Gamma random variables. These model assumptions imply conditional conjugacy of the

model's parameters. Consequently, a Gibbs sampler is used to sample from each parameter's posterior distribution.

To achieve fast sampling, pybuc follows bsts's adoption of the Durbin and Koopman (2002) simulation smoother. For

any parameter

- Draw

$\boldsymbol{\alpha}(s)$ from$p(\boldsymbol{\alpha} | \mathbf y, \boldsymbol{\sigma}^2_\eta(s-1), \boldsymbol{\beta}(s-1), \sigma^2_\epsilon(s-1))$ using the Durbin and Koopman simulation state smoother, where$\boldsymbol{\alpha}(s) = (\boldsymbol{\alpha}_ 1(s), \boldsymbol{\alpha}_ 2(s), \cdots, \boldsymbol{\alpha}_ n(s))^\prime$ and$\boldsymbol{\sigma}^2_\eta(s-1) = \mathrm{diag}(\boldsymbol{\Sigma}_\eta(s-1))$ . Note thatpybucimplements a correction (based on a potential misunderstanding) for drawing$\boldsymbol{\alpha}(s)$ per "A note on implementing the Durbin and Koopman simulation smoother" (Marek Jarocinski, 2015). - Draw

$\boldsymbol{\sigma}^2(s) = (\sigma^2_ \epsilon(s), \boldsymbol{\sigma}^2_ \eta(s))^\prime$ from$p(\boldsymbol{\sigma}^2 | \mathbf y, \boldsymbol{\alpha}(s), \boldsymbol{\beta}(s-1))$ using Durbin and Koopman's simulation disturbance smoother. - Draw

$\boldsymbol{\beta}(s)$ from$p(\boldsymbol{\beta} | \mathbf y^ *, \boldsymbol{\alpha}(s), \sigma^2_\epsilon(s))$ , where$\mathbf y^ *$ is defined above.

By assumption, the elements in