Puneet Mangla*, Shivam Chandhok*, Milan Aggarwal, Vineeth N Balasubramanian, Balaji Krishnamurthy (*Equal Contribution)

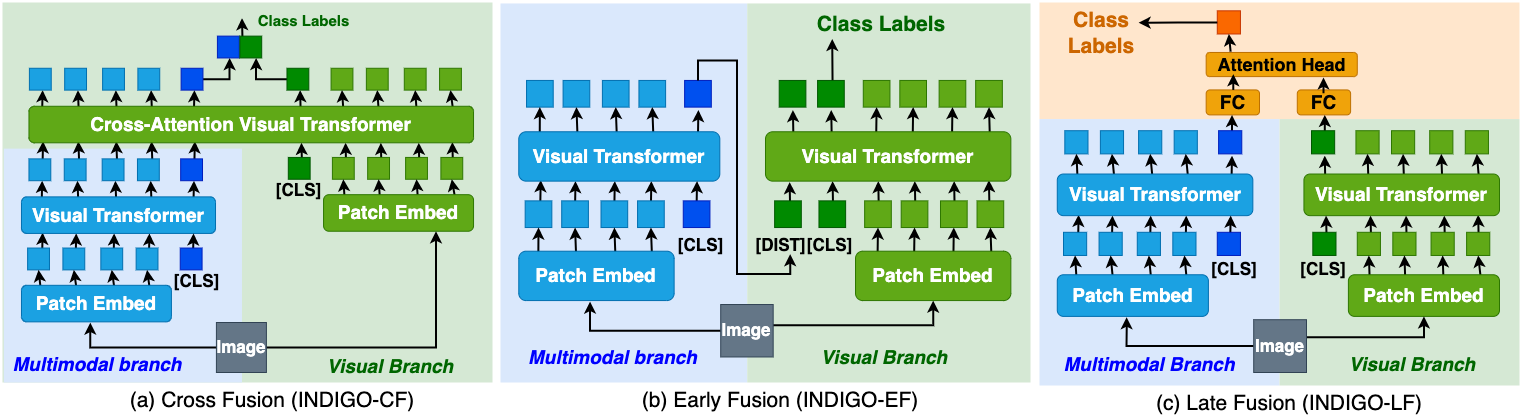

Abstract: For models to generalize under unseen domains (a.k.a do- main generalization), it is crucial to learn feature represen- tations that are domain-agnostic and capture the underlying semantics that makes up an object. Recent advances towards weakly supervised vision-language models (such as CLIP) have shown their ability on object understanding by captur- ing semantic characteristics that generalize under different domains. Hence, it becomes important to investigate how the semantic knowledge present in their representations can be effectively incorporated and utilized for domain generaliza- tion. Motivated from this, we study how semantic informa- tion from existing pre-trained multimodal networks can be leveraged in an ”intrinsic” way to make systems generalize under unseen domains. We propose IntriNsic multimodality for DomaIn GeneralizatiOn (INDIGO), a simple and elegant framework that leverages the intrinsic modality present in pre-trained multimodal networks to enhance generalization to unseen domains at test-time. We experiment on several Do- main Generalization settings (ClosedDG, OpenDG, and Lim- ited sources) and show state-of-the-art generalization perfor- mance on unseen domains. Further, we provide a thorough analysis to develop a holistic understanding of INDIGO.

Refer to requirements.txt for installing all python dependencies.

Install CLIP

pip install ./CLIP

--target <Target Domain>

--name <Name of experiment>

--model <Backbone model>

--teacher <Teacher model >

CUDA_VISIBLE_DEVICES=2,3 python main.py --dg --target clipart --config_file configs/zsl+dg/clipart.json --dataset domainnet --name test --runs 5 --method class_token_distill --model vit_small_hybrid --teacher clip_vit_b

Load teacher model using main.py and then run

CUDA_VISIBLE_DEVICES=2,3 python main.py --dg --target clipart --config_file configs/zsl+dg/clipart.json --dataset domainnet --name test --runs 5 --method distill --model vit_small_hybrid

CUDA_VISIBLE_DEVICES=2,3 python main.py --dg --target clipart --config_file configs/zsl+dg/clipart.json --dataset domainnet --name test --runs 5 --method standard --model vit_small_hybrid

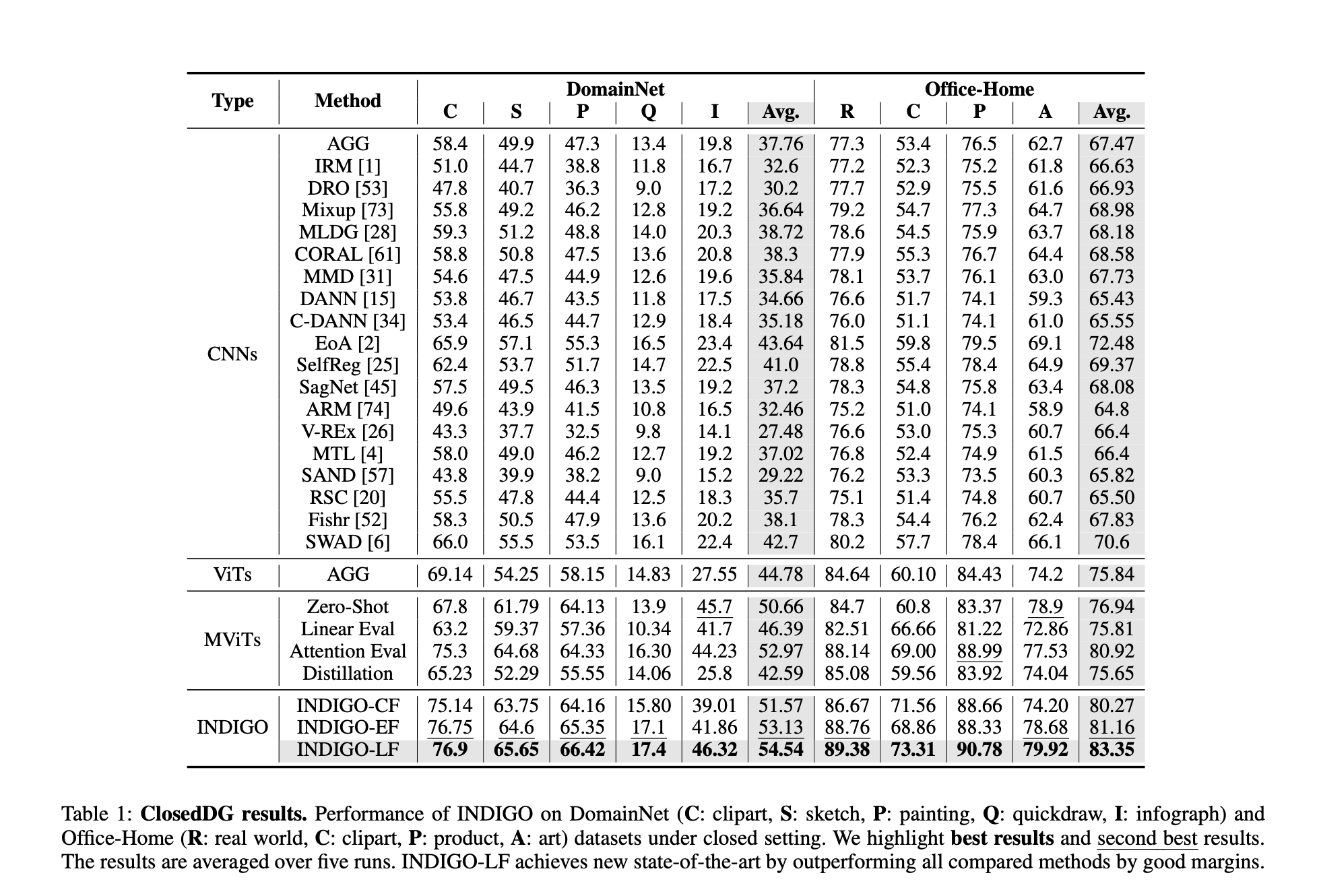

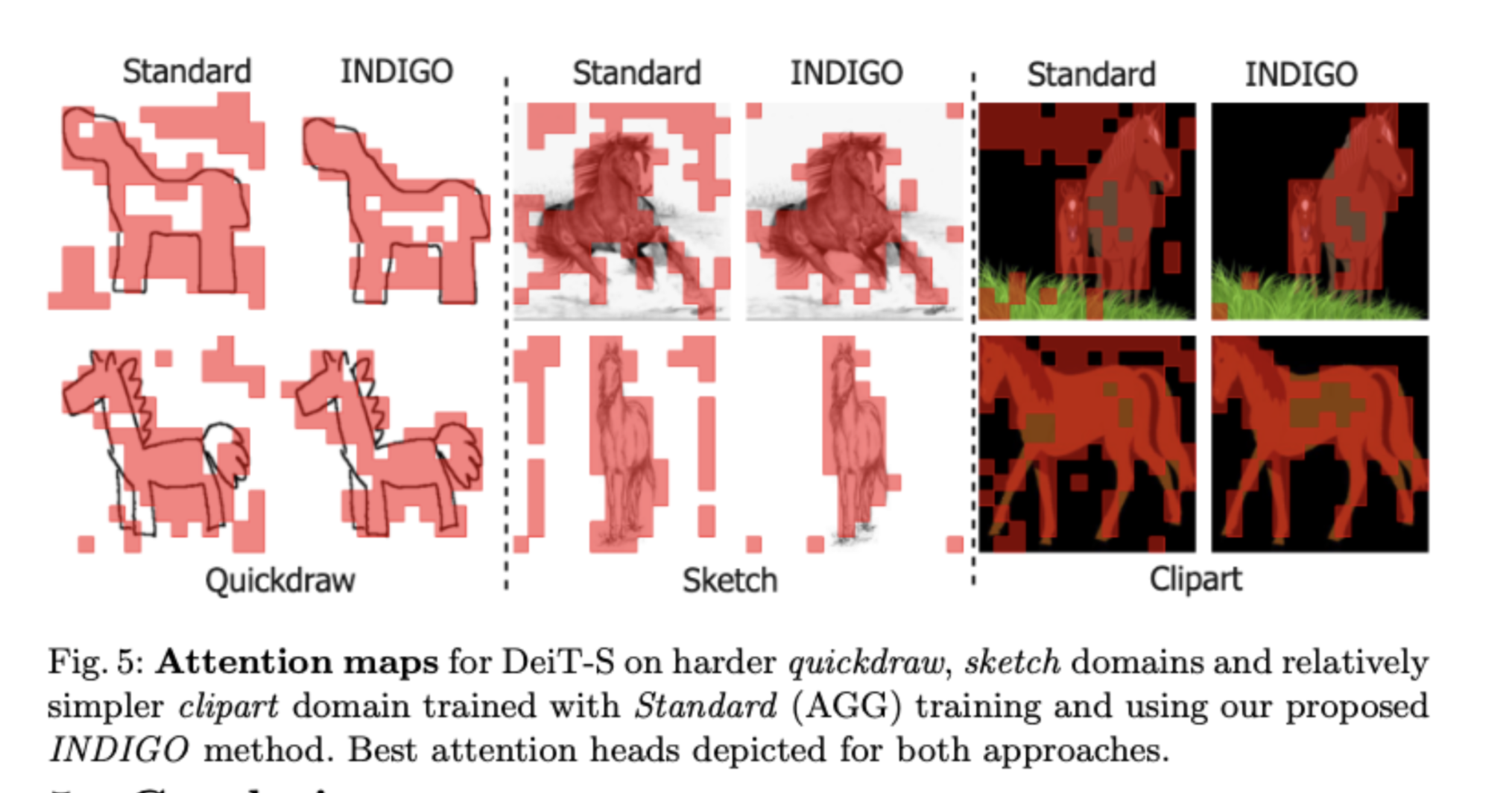

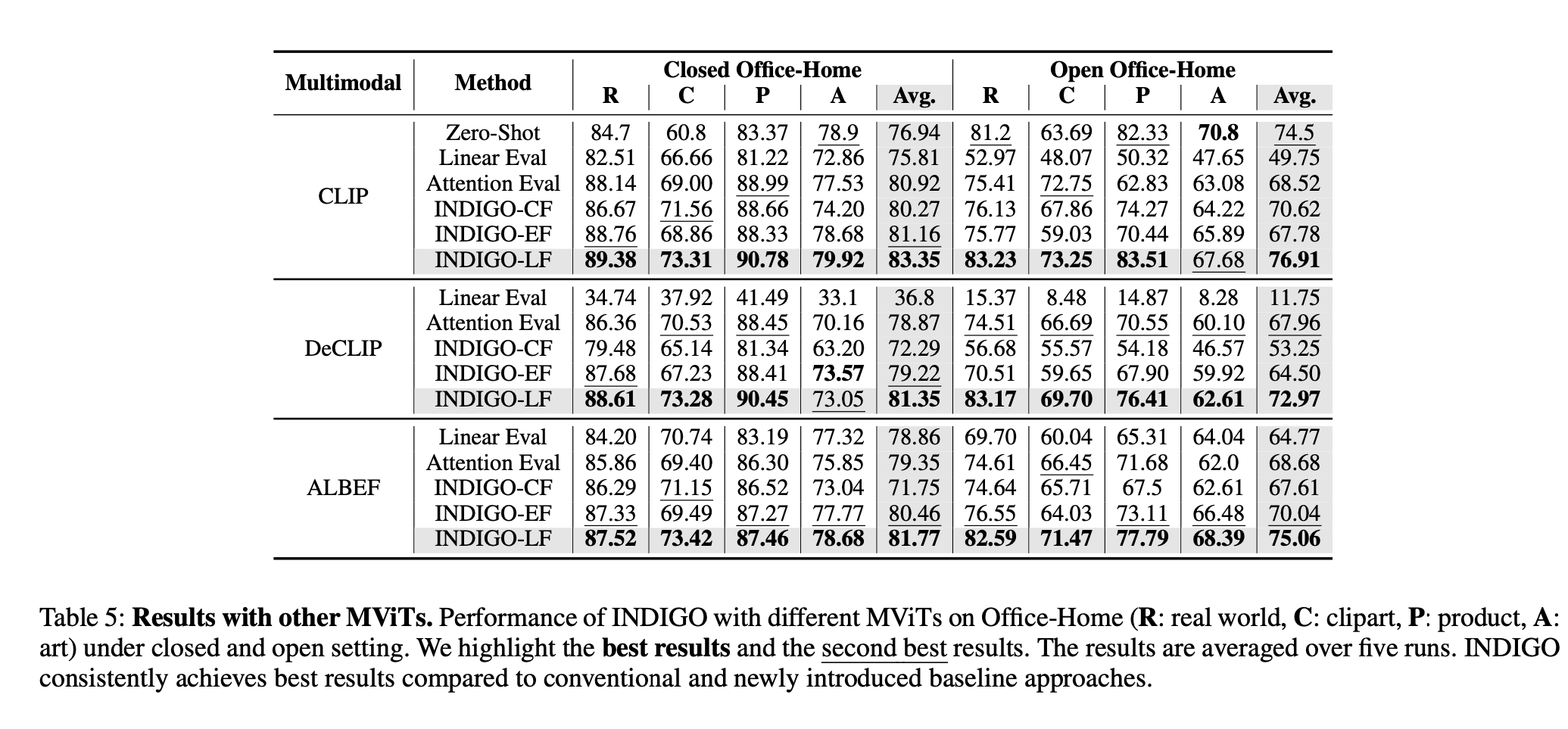

Results of our proposed method INDIGO on Domain Generalization and OpenDG along with attention visualizations and analysis

Domain Generalization (ClosedDG) performance of INDOGO in comparison with state-of art Domain Generalization methods on DomainNet and Office-Home Datasets.

Attention Visualizations

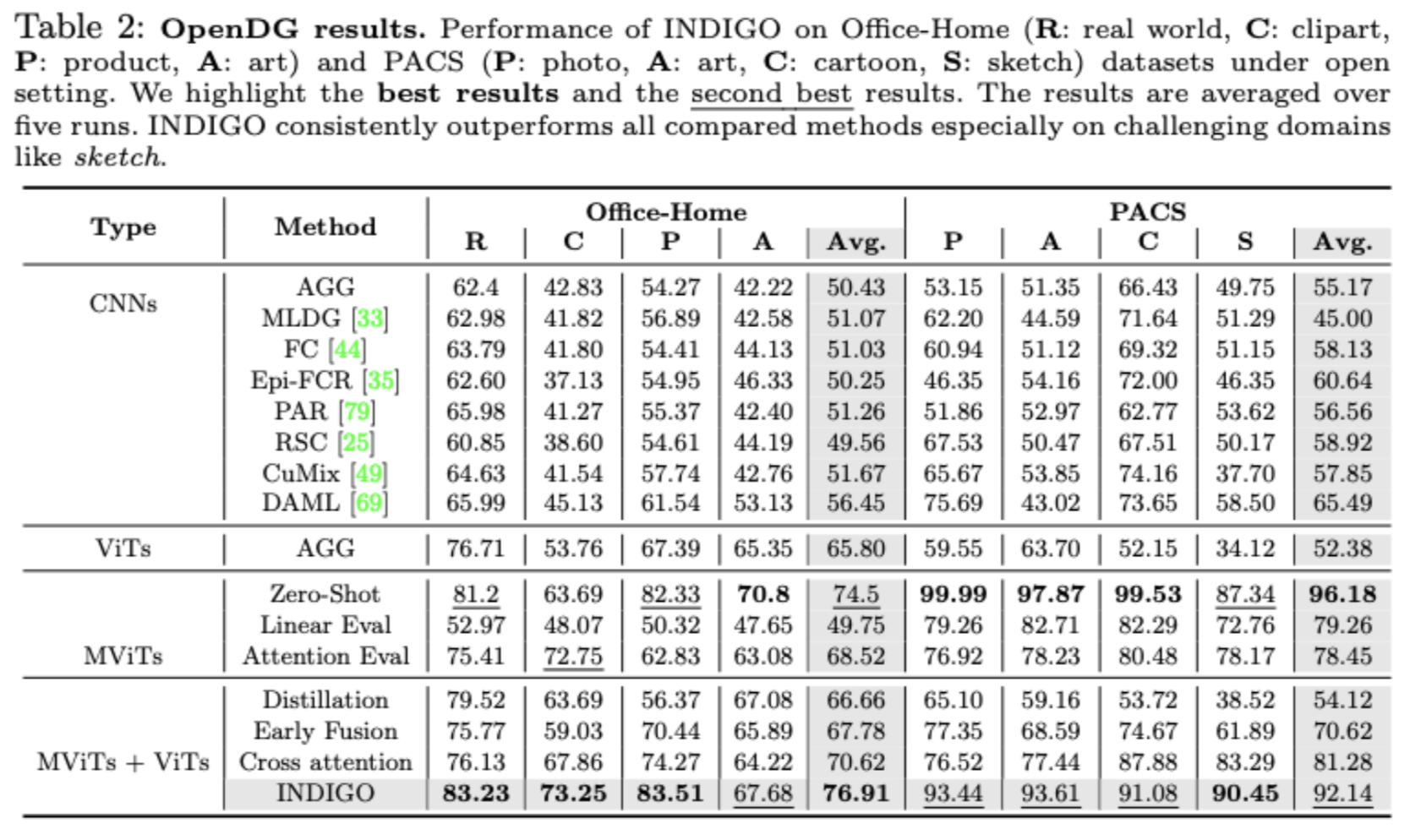

Open Domain Generalization (OpenDG) performance of INDOGO in comparison with state-of art Domain Generalization methods on benchmark datasets

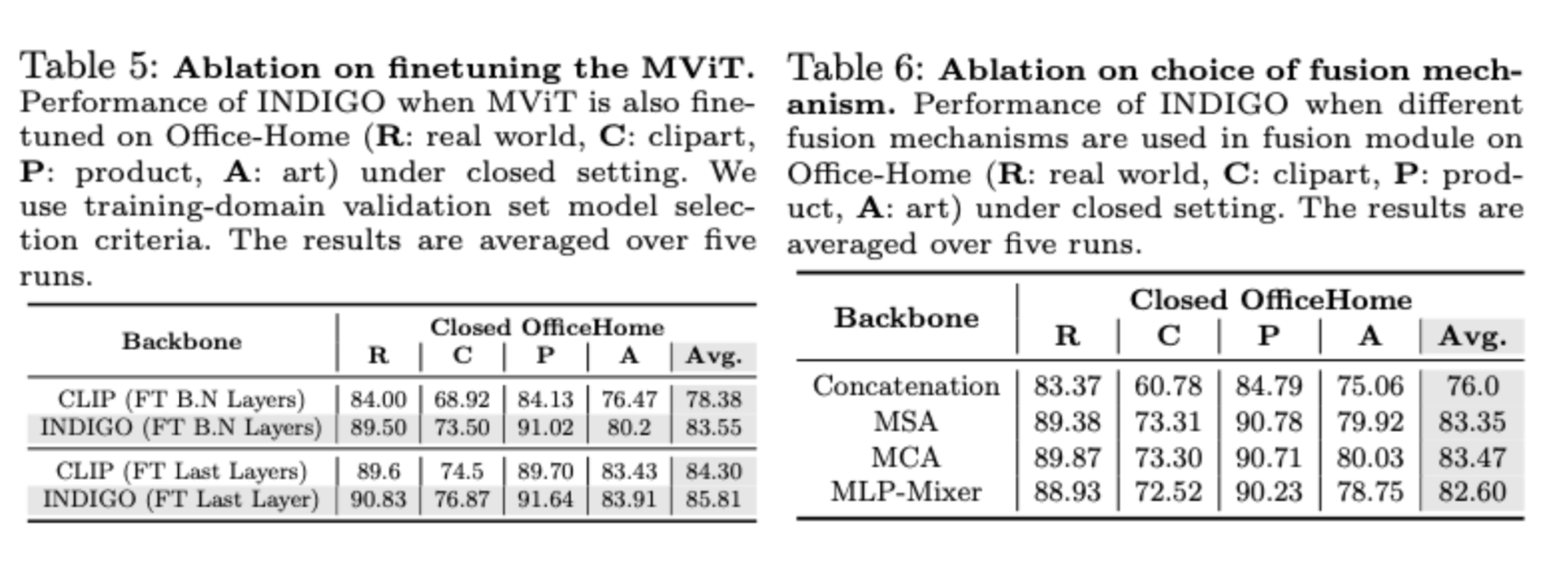

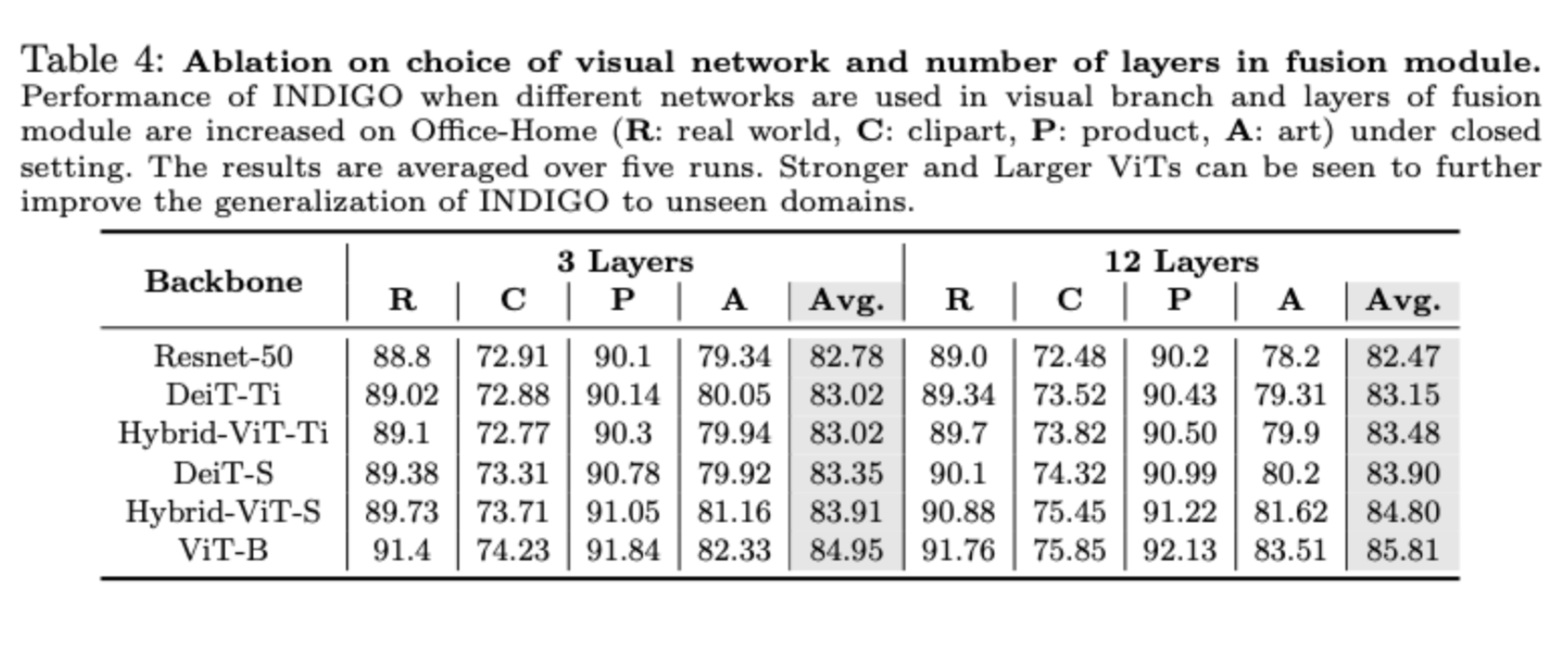

Analysis and Ablation Studies(OpenDG)

@inproceedings{indigo2022,

title={INDIGO: Intrinsic Multimodality for Domain Generalization},

author={P Mangla and S Chandhok and M Aggarwal and VN Balasubramanian and B Krishnamurthy},

booktitle={ECCV'22- OOD-CV Workshop},

month = {June},

year={2022}

}Our code is based on CuMix and timm repositories. We thank the authors for releasing their code. If you use our model, please consider citing these works as well.