Checkout the test video of this Rover challenge on my YouTube playlist.

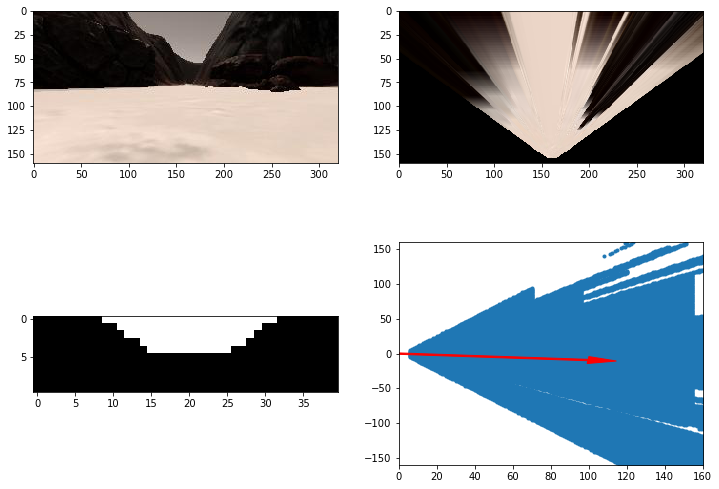

1. Ran the functions provided in the well designed Jupyter Notebook that helped even afterwards to rapidly design solutions for the autonomous driving. Defined functions for:

- Navigation thresholding

- Obstacle thresholding

- Rock Sample thresholding

2. The process_image() function is populated with the appropriate analysis steps to identify the pixels corresponding to

-

Navigable terrain using

color_thresh_navigable -

Obstacle (inversion of the navigable terrain) using the

obstacle_thresh_obstacle -

Golden Rock sample using the

color_thresh_goldento find the pixels within the RGB range of the golden rocks.

Finally, map them onto the ground truth map of the world. The output images from the process_image() are used to create video output using the moviepy functions.

1. The perception_step is derived from the process_image function in the Notebook. It gave a 30% improvement when I considered only a cropped out middle portion of the vision_image rather than the whole image.

h, w = navigable.shape[:2]

delta = 50

region_of_interest = navigable[h - delta: h,\

int(w/2) - delta : int(w/2) + delta ]

navigable_x , navigable_y = rover_coords(region_of_interest)steer_offset = -15

Rover.steer = np.clip(np.mean(Rover.nav_angles * 180/np.pi + steer_offset) \

, -15, 15)2.Detect if the rover is stuck by checking if the Rover state variable Rover.stuck if above the defined threshold Rover.stuck_time_threshold and start turning right to get out of stuck state.

if Rover.vel < 0.1 and Rover.vel > -1.0:

Rover.stuck_time_threshold += 1

Rover.throttle = Rover.throttle_set

if Rover.stuck_time_threshold > 100:

Rover.stuck = True

if Rover.stuck:

Rover.throttle = 0

# Set brake to stored brake value

Rover.brake = Rover.brake_set

Rover.steer = 0

Rover.mode = 'stop'self.stop_forward = 150 # Initiate stopping sooner to prevent bumping into obstacle

self.go_forward = 400 # Threshold to go forward again

self.throttle_set = 0.6 # Increased throttle setting when accelerating for faster navigationcv2.putText(map_add," Nav_angle len: "+str((len(Rover.nav_angles))), (0, 155), \

cv2.FONT_HERSHEY_COMPLEX, 0.4, (255, 255, 255), 1)

cv2.putText(map_add," Mode: "+str(Rover.mode), (0, 145), \

cv2.FONT_HERSHEY_COMPLEX, 0.4, (255, 255, 255), 1)2. Launching in autonomous mode the rover can navigate and map autonomously. It can be improved by using:

-

HSV thresholding.

-

Using A* for path planning.

-

Better way to manage the state of the

Rover. -

PID tuning for the constants

threshold_set,go_forward,stop_forward.

-

Resolution:

1024 x 768 -

Graphis quality: Good

-

Mapping: 99.9% -

Fidelity: 75.1% -

Time Taken: 420 seconds @ 20

Frames per second