This is the implementation of paper: "Logic-RAG: Augmenting Large Multimodal Models with Visual-Spatial Knowledge for Road Scene Understanding" (accepted to ICRA2025) [Link]

Test Logic-RAG on Google Colab:

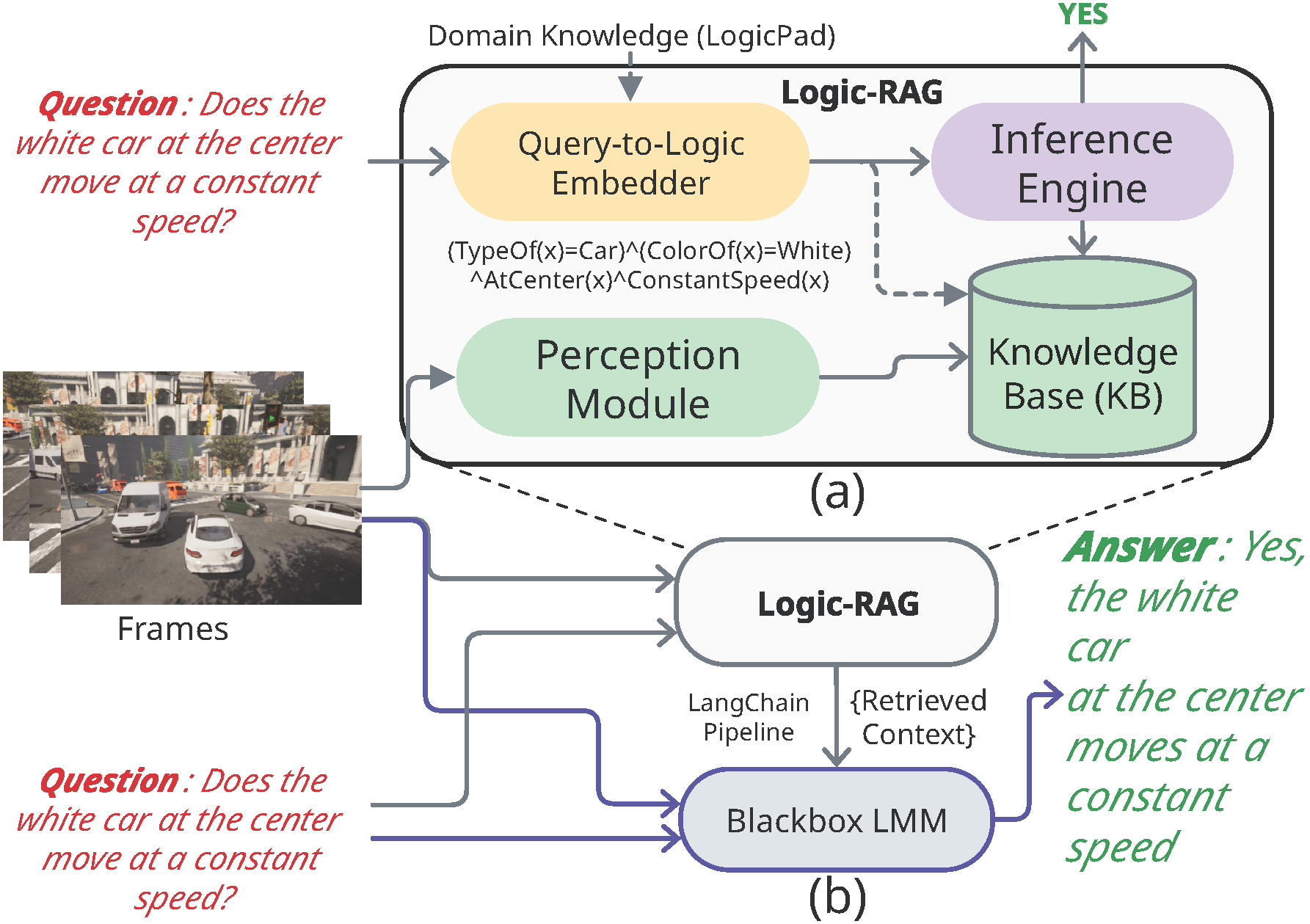

Logic-RAG is a novel Retrieval-Augmented Generation framework that enhances Large Multimodal Models' (LMMs) spatial reasoning capabilities in autonomous driving contexts. By constructing a dynamic knowledge base of object-object relationships in first-order logic (FOL), Logic-RAG significantly improves the accuracy of visual-spatial queries in driving scenarios.

This repository contains the complete implementation of the Logic-RAG framework, including the perception module, query-to-logic embedder, and logical inference engine. We provide inference scripts for our proposed framework along with baseline evaluation scripts for GPT-4V and Claude 3.5 models to facilitate comparison.

Logic-RAG framework for visual-spatial reasoning:

- Python 3.9

- PyTorch 2.4.1

- CUDA compatible GPU

We provide a Conda environment file for easy setup:

# Clone the repository

git clone https://github.com/Imran2205/LogicRAG.git

cd LogicRAG

# Create and activate conda environment

conda env create -f environment.yaml

conda activate lragAfter activating the environment, compile the following script for the Mask2Former model:

cd perception_module/segmentation/Mask2Former-mod/modeling/pixel_decoder/ops

sh make.shDownload all the pre-computed knowledge base for KITTI and model weights from the following link: Pre-trained Models & KB (Google Drive)

Extract the downloaded file to the root of this repository.

Alternatively, you can download and extract the file using the following commands:

gdown 14vRnzCDD9rw63Os3w-Ii4ZF_352y__km

unzip LogicRAG_Data.zipDownload the left color images of KITTI object tracking dataset from KITTI website.

To run the inference using precomputed knowledge base and tracker trajectories, run:

cd kb_framework/kb_inference

python inference_in_kb.py \

--csv ../kitti_questions/kitti_que.csv \

--fol_trans_csv ./translated_queries/question_query_kitti_llama33.csv \

--kb_dir ../../LogicRAG_Data/precomputed_knowledge_base/kb_out_kitti \

--tracker_dir ../../LogicRAG_Data/tracker_trajectories/track_out_kitti \

--output logic_rag_response.csvThe Logic-RAG pipeline consists of several components that need to be run sequentially to generate the knowledge base and perform inference.

steps.

Generate semantic segmentation masks for scene understanding using the following commands:

cd perception_module/segmentation/Mask2Former-mod

mkdir ../../../track_in_kitti_test/

python demo/demo.py --config-file ./configs/cityscapes/panoptic-segmentation/swin/maskformer2_swin_large_IN21k_384_bs16_90k.yaml \

--input /data/datasets/KITTI/MOT/testing/image_02/*/*.png \

--output ../../../track_in_kitti_test/ \

--opts MODEL.WEIGHTS ../../../LogicRAG_Data/model_weights/Mask2Former/model_final_064788.pklTo generate depth maps for the scene, run:

cd perception_module/depth/PixelFormer-mod

python pixelformer/test_lrag.py configs/arguments_test_kitti_depth.txtThe configuration file should contain:

--model_name pixelformer_kittieigen

--encoder large07

--dataset kitti

--input_height 352

--input_width 1216

--max_depth 80

--do_kb_crop

--data_path /data/datasets/KITTI/MOT/testing

--filenames_file ./data_splits/kitti_lrag_test.txt

--checkpoint_path ../../../LogicRAG_Data/model_weights/PixelFormer/kitti.pth

--output ../../../track_in_kitti_test/

Please replace data_path value with the actual path to KITTI MOT test dataset.

Note: You can either use ./data_splits/kitti_lrag_test.txt as filenames_file without modification

or

ensure your filenames_file contains lines in the format:

image_02/0000/000000.png

image_02/0000/000001.png

Or,

image_02/0000/000000.png image_02/0000/000000.png 1000.0

image_02/0000/000001.png image_02/0000/000001.png 1000.0

Run the following commands to generate optical flow data:

cd perception_module/optical_flow/co-tracker

python demo_frame.py \

--dataset_root /data/datasets/KITTI/MOT/testing/image_02 \

--output_dir ../../../track_in_kitti_test/ \

--checkpoint ../../../LogicRAG_Data/model_weights/CoTracker3/scaled_offline.pth \

--sample_interval 5 \

--dataset KITTIExtract static key points to serve as anchors for multilateration during tracking:

cd perception_module/tracking

python key_point_extractor.py \

--data_dir ../../track_in_kitti_test/ \

--output_dir ../../track_in_kitti_test/ \

--distance_threshold 0.8 \

--sample_interval 20 \

--max_depth 50 \

--dataset KITTITrack objects across frames and save the trajectories to ../../track_out_kitti_test:

cd perception_module/tracking

python new_tracker.py \

--data_dir ../../track_in_kitti_test/ \

--output_dir ../../track_out_kitti_test \

--type_model_path ../../LogicRAG_Data/model_weights/TypeDetector/best_type_model.pth \

--color_model_path ../../LogicRAG_Data/model_weights/ColorDetector/best_color_model.pth \

--num_processes 30 \

--rgb_base_dir /data/datasets/KITTI/MOT/testing/image_02 \

--dataset KITTIGenerate the FOL knowledge base from tracking data and save to ../../kb_out_kitti_test:

cd kb_framework/kb_generator

python final_KB_gen.py \

--tracker_dir ../../track_out_kitti_test \

--output_dir ../../kb_out_kitti_test \

--logic_pad ../LogicPad/LogicPad.yaml \

--window_size 10 \

--dataset KITTI \

--num_processes 30Use the notebook kb_framework/kb_inference/fol_translate_llama.ipynb to translate natural language questions

to first-order logic. The translations will be saved to a CSV file

(e.g., ./kb_framework/kb_inference/translated_queries/question_query_kitti_llama33.csv).

Note: We precomputed the translations csv file. so that we do not need to load LlaMa 3 each time we run inference.

Run inference using the generated knowledge base:

cd kb_framework/kb_inference

python inference_in_kb.py \

--csv ../kitti_questions/kitti_que.csv \

--fol_trans_csv ./translated_queries/question_query_kitti_llama33.csv \

--kb_dir ../../kb_out_kitti_test \

--tracker_dir ../../track_out_kitti_test \

--output logic_rag_response.csvTo run the inference using precomputed knowledge base and tracker trajectories run:

cd kb_framework/kb_inference

python inference_in_kb.py \

--csv ../kitti_questions/kitti_que.csv \

--fol_trans_csv ./translated_queries/question_query_kitti_llama33.csv \

--kb_dir ../../LogicRAG_Data/precomputed_knowledge_base/kb_out_kitti \

--tracker_dir ../../LogicRAG_Data/tracker_trajectories/track_out_kitti \

--output logic_rag_response.csvGenerate responses using GPT-4V for comparison:

cd baselines

python get_gpt4_response.py \

--csv /path/to/kitti_questions/kitti_que.csv \

--frames_dir /path/to/kitti/MOT/testing/image_02 \

--output gpt4v_response.csv \

--log_dir ./logsGenerate responses using Claude 3.5 for comparison:

cd baselines

python get_claude_response.py \

--csv /path/to/kitti_questions/kitti_que.csv \

--frames_dir /path/to/kitti/MOT/testing/image_02 \

--output claude_response.csv \

--log_dir ./logsWe evaluate Logic-RAG on both synthetic and real-world driving scenes:

- Synthetic Driving Scenes: Generated using a controlled environment to test specific spatial reasoning capabilities.

- Real-World Driving Scenes: Derived from the KITTI Multi-Object Tracking dataset. It provides challenging real-world scenarios.

Logic-RAG significantly improves spatial reasoning accuracy:

- Baseline LMMs: GPT-4V and Claude 3.5 achieved only 55% accuracy on synthetic scenes and <75% on real-world scenes.

- Logic-RAG augmented: Increased accuracies to >80% on synthetic and >90% on real-world scenes.

- Ablation study: Even without logical inference, fact-based context from Logic-RAG improved accuracy by 15%.

If you find our work useful in your research, please consider citing:

@inproceedings{kabir2025logicrag,

title={Logic-RAG: Augmenting Large Multimodal Models with Visual-Spatial Knowledge for Road Scene Understanding},

author={Kabir, Imran and Reza, Md Alimoor and Billah, Syed},

booktitle={2025 IEEE International Conference on Robotics and Automation (ICRA)},

year={2025},

organization={IEEE}

}We thank the authors of the following projects whose code we have adapted or used: