This repo demos a simple template of using transformers.js, LangChain.js, and Deep Chat to create a demo chat.

# clone git repository

git clone https://github.com/shizheng-rlfresh/slm-rag.git

# go to the directory and install dependency

npm install# start a serve on localhost

npm run dev -- --open

# build app

npm run build

# preview

npm run preview-

To import transformers models through

transformers.js, you will need a.onnxmodel, e.g.,model.onnx(preferrably a quantized model, e.g.,model_quantized.onnx).transformers.jsrecommends using theconversion scriptto convert a customized model, e.g., your own pretrained or fine-tuned models.

# create a python virtual environment python -m venv .venv # activate .venv and install required packages source .venv/bin/activate pip install -r requirements.txt # run the conversion script - <modelid> python -m scripts.convert --quantize --model_id <modelid>

-

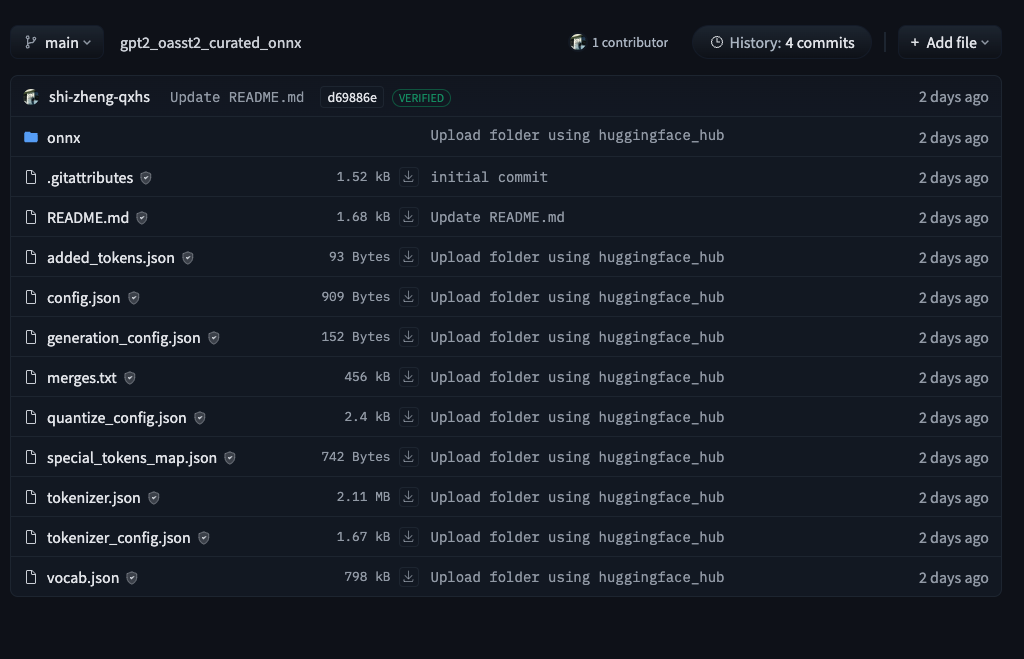

Push your custom model to hub and craft your huggingface repo files structures as follows, where your converted models are enclosed in

onnxdirectory.

-

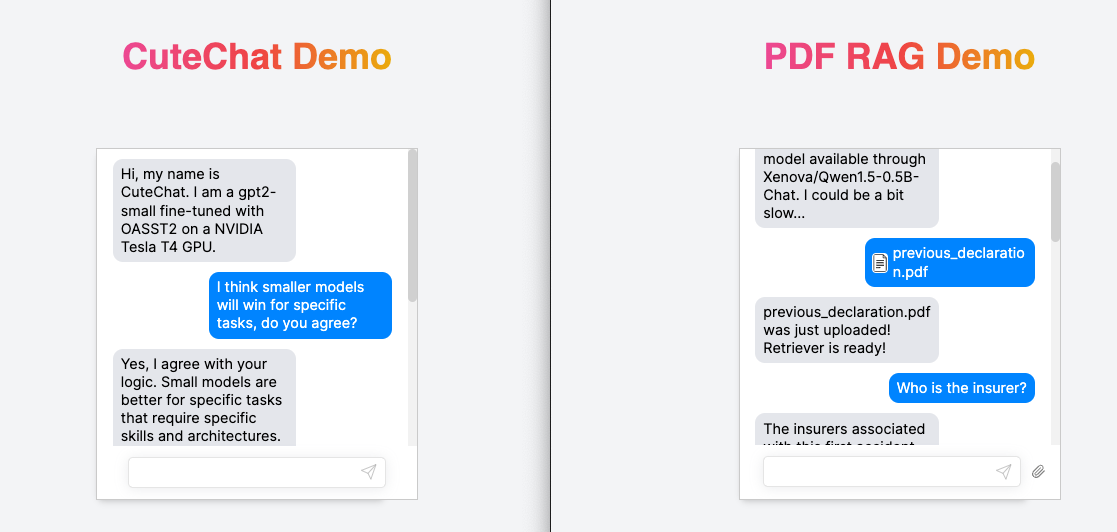

In this demo, we used a custom gpt2-small (124MM parms) fine-tuned on a conversational dataset, i.e.,

oasst2. This model was fine-tuned on a NVIDIA Tesla T4 GPU for 20 epochs.

// import model from HuggingFace Hub import { pipeline } from '@xenova/transformers'; // for CuteChat Demo, we used our own model const pipe = await pipeline('text-generation', 'shi-zheng-qxhs/gpt2_oasst2_curated_onnx');

// for PDF RAG Demo, we used 'Qwen1.5-0.5B-Chat' // Note this could be a bit slow running on web const pipe = await pipeline('text-generation', 'Xenova/Qwen1.5-0.5B-Chat');

You can either use

pipelineormodel.generateas if usingtransformersin python. Inchat.js, we used custom functions to process the user input and model generations, which can be modified based on your own need.Deep Chatallows usinghandlerinrequestto use models imported directly fromtransformers.js.chat.svelteshows an example of how we handled custom functions, as well as usingrequestInterceptorandresponseInterceptorto process the (user) input and (model generated) output.

langchain.jsdoes not support LLM throughtransformers.jsas of now (and there are open issues oncustom LLMs. It is not hard to implement a custom LLM). In this demo code, we chose to usevectorStorefromlangchain.js(seeragloader.js) andpipelinefromtransformers'js(seeragchat.js).- RAG component is implemented

rag.sveltewithDeep Chat.