This is a repository for organizing papers, codes and other resources related to unified multimodal models.

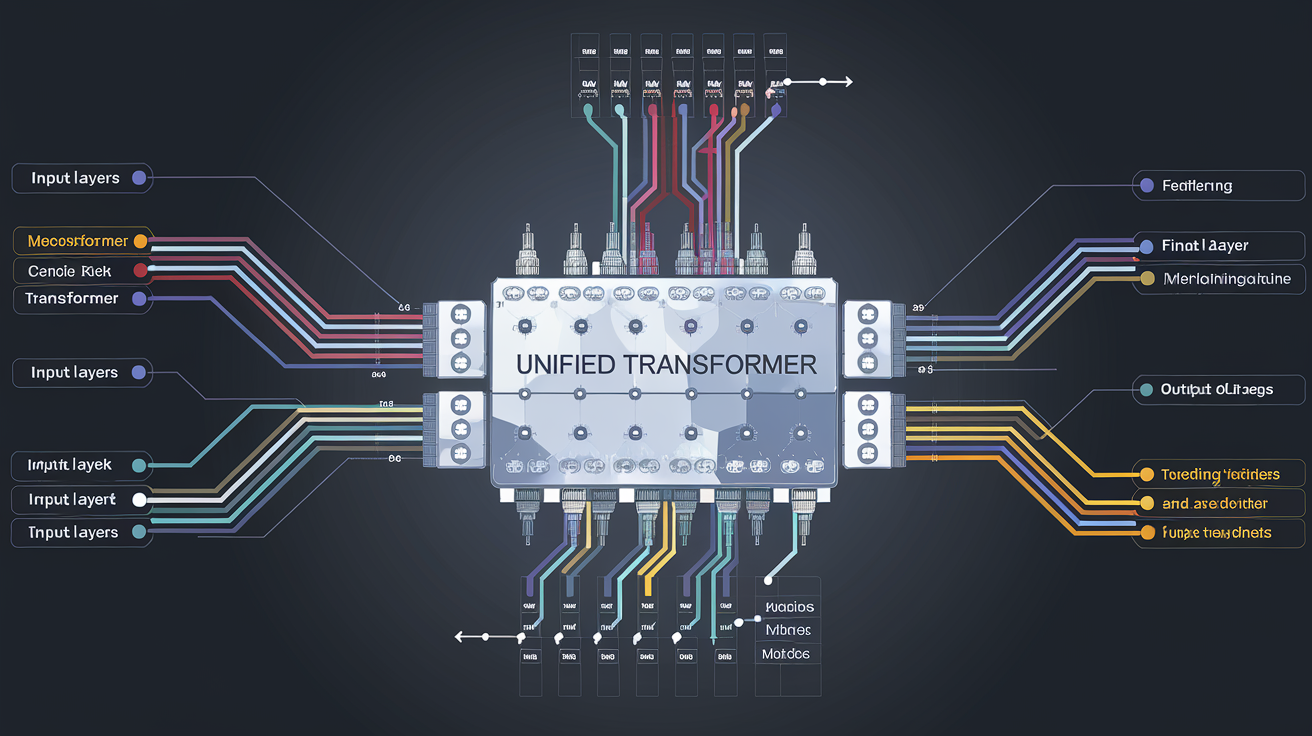

Traditional multimodal models can be broadly categorized into two types: multimodal understanding and multimodal generation. Unified multimodal models aim to integrate these two tasks within a single framework. Such models are also referred to as Any-to-Any generation in the community. These models operate on the principle of multimodal input and multimodal output, enabling them to process and generate content across various modalities seamlessly.

If you have any suggestions (missing papers, new papers, or typos), please feel free to edit and pull a request. Just letting us know the title of papers can also be a great contribution to us. You can do this by open issue or contact us directly via email.

- Open-source Toolboxes and Foundation Models

- Evaluation Benchmarks and Metrics

- Unified Multimodal Understanding and Generation

-

Janus: Decoupling Visual Encoding for Unified Multimodal Understanding and Generation (Oct. 2024, arXiv)

-

Emu3: Next-Token Prediction is All You Need (Sep. 2024, arXiv)

-

MIO: A Foundation Model on Multimodal Tokens (Sep. 2024, arXiv)

-

MonoFormer: One Transformer for Both Diffusion and Autoregression (Sep. 2024, arXiv)

-

VILA-U: a Unified Foundation Model Integrating Visual Understanding and Generation (Sep. 2024, arXiv)

-

Show-o: One Single Transformer to Unify Multimodal Understanding and Generation (Aug. 2024, arXiv)

-

Transfusion: Predict the Next Token and Diffuse Images with One Multi-Modal Model (Aug. 2024, arXiv)

-

ANOLE: An Open, Autoregressive, Native Large Multimodal Models for Interleaved Image-Text Generation (Jul. 2024, arXiv)

-

X-VILA: Cross-Modality Alignment for Large Language Model (May. 2024, arXiv)

-

Chameleon: Mixed-Modal Early-Fusion Foundation Models (May 2024, arXiv)

-

SEED-X: Multimodal Models with Unified Multi-granularity Comprehension and Generation (Apr. 2024, arXiv)

-

Mini-Gemini: Mining the Potential of Multi-modality Vision Language Models (Mar. 2024, arXiv)

-

AnyGPT: Unified Multimodal LLM with Discrete Sequence Modeling (Feb. 2024, arXiv)

-

World Model on Million-Length Video And Language With Blockwise RingAttention (Feb. 2024, arXiv)

-

Video-LaVIT: Unified Video-Language Pre-training with Decoupled Visual-Motional Tokenization (Feb. 2024, arXiv)

-

MM-Interleaved: Interleaved Image-Text Generative Modeling via Multi-modal Feature Synchronizer (Jan. 2024, arXiv)

-

Unified-IO 2: Scaling Autoregressive Multimodal Models with Vision, Language, Audio, and Action (Dec. 2023, arXiv)

-

Emu2: Generative Multimodal Models are In-Context Learners (Jul. 2023, CVPR)

-

Gemini: A Family of Highly Capable Multimodal Models (Dec. 2023, arXiv)

-

VL-GPT: A Generative Pre-trained Transformer for Vision and Language Understanding and Generation (Dec. 2023, arXiv)

-

DreamLLM: Synergistic Multimodal Comprehension and Creation (Dec. 2023, ICLR)

-

Making LLaMA SEE and Draw with SEED Tokenizer (Oct. 2023, ICLR)

-

NExT-GPT: Any-to-Any Multimodal LLM (Sep. 2023, ICML)

-

LaVIT: Unified Language-Vision Pretraining in LLM with Dynamic Discrete Visual Tokenization (Sep. 2023, ICLR)

-

Planting a SEED of Vision in Large Language Model (Jul. 2023, arXiv)

-

Emu: Generative Pretraining in Multimodality (Jul. 2023, ICLR)

-

CoDi: Any-to-Any Generation via Composable Diffusion (May. 2023, NeurIPS)

-

Multimodal unified attention networks for vision-and-language interactions (Aug. 2019)

-

UniMuMo: Unified Text, Music, and Motion Generation (Oct. 2024, arXiv)