This is the official repository of Exo2Ego-V Paper

Jia-Wei Liu*, Weijia Mao*, Zhongcong Xu, Jussi Keppo, Mike Zheng Shou

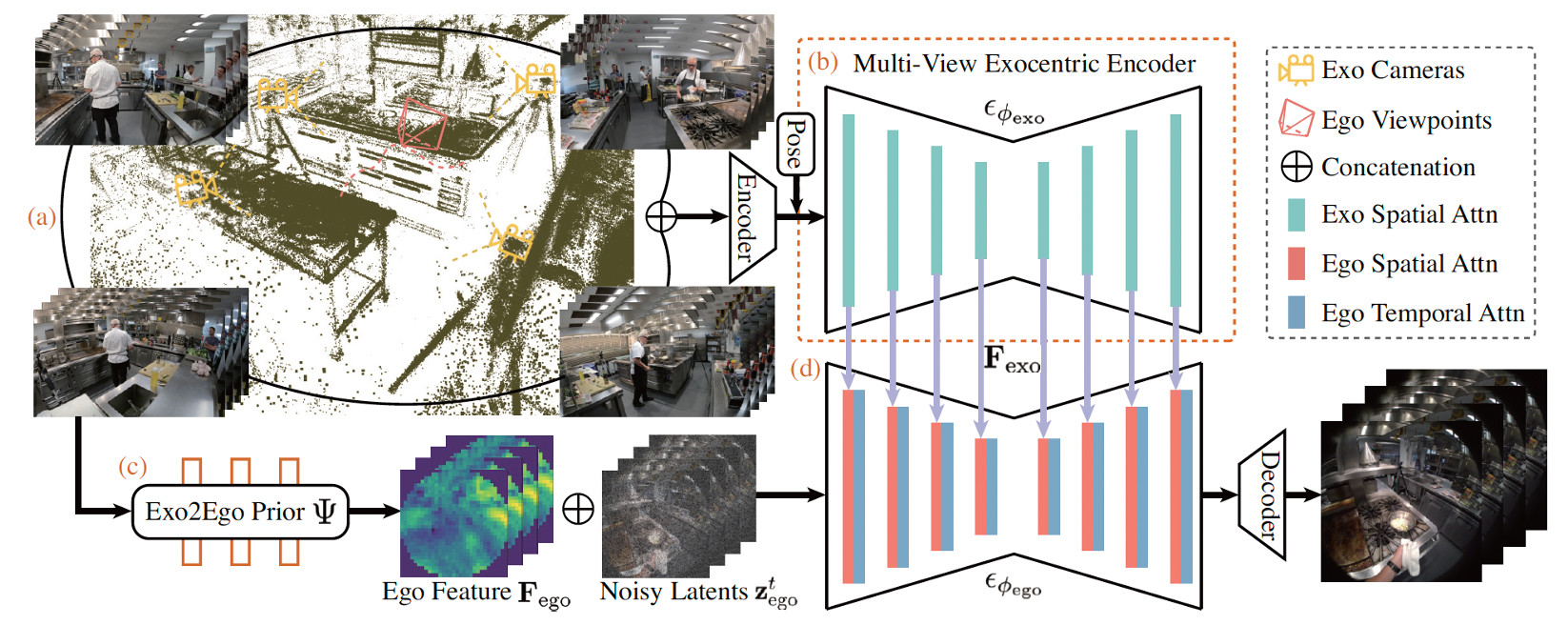

TL;DR: A novel exocentric-to-egocentric video generation method for challenging daily-life skilled human activities.

git clone https://github.com/showlab/Exo2Ego-V.git

cd Exo2Ego-V

pip install -r requirements.txt

python tools/download_weights.pyModel weights should be placed under ./pretrained_weights.

Please refer to https://ego-exo4d-data.org/ for downloading the Ego-Exo4D dataset. Our experiments utilized the downscaled takes at 448px on the shortest side.

Please modify the data and output directory in each script.

python scripts_preprocess/extract_frames_from_videos.pyPlease modify the data, input, and output directory in each script.

python scripts_preprocess/get_ego_pose.py

python scripts_preprocess/get_exo_pose.py

python scripts_preprocess/get_ego_intrinsics.pyStage 1: Train Exo2Ego Spatial Appearance Generation. Please modify the data and pretrained model weights directory in configs/train/stage1.yaml

bash train_stage1.shStage 2: Train Exo2Ego Temporal Motion Video Generation. Please modify the data, pretrained model weights, and stage 1 model weights directory in configs/train/stage2.yaml

bash train_stage2.shWe release the 5 Pretrained Exo2Ego View Translation Prior checkpoints on link.

If you find our work helps, please cite our paper.

@article{liu2024exocentric,

title={Exocentric-to-egocentric video generation},

author={Liu, Jia-Wei and Mao, Weijia and Xu, Zhongcong and Keppo, Jussi and Shou, Mike Zheng},

journal={Advances in Neural Information Processing Systems},

volume={37},

pages={136149--136172},

year={2024}

}This repo is maintained by Jiawei Liu. Questions and discussions are welcome via jiawei.liu@u.nus.edu.

This codebase is based on MagicAnimate, Moore-AnimateAnyone, and PixelNeRF. Thanks for open-sourcing!

Copyright (c) 2025 Show Lab, National University of Singapore. All Rights Reserved. Licensed under the Apache License, Version 2.0 (see LICENSE for details)