Paper Link, Model Link, Dataset Link, COSMOE LINK

-

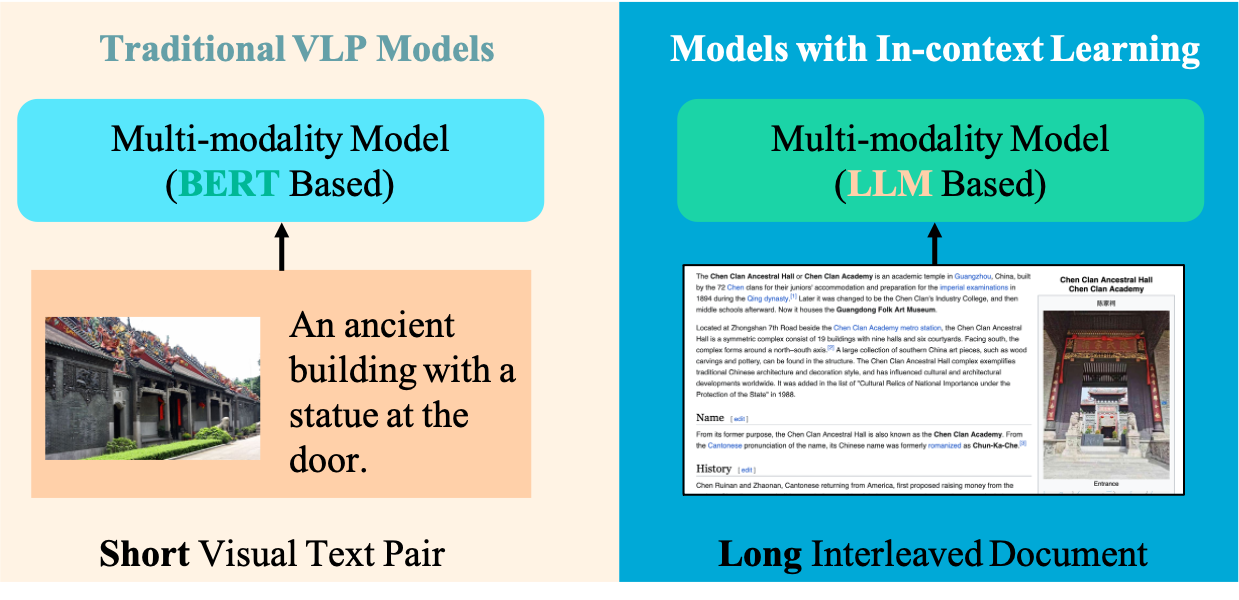

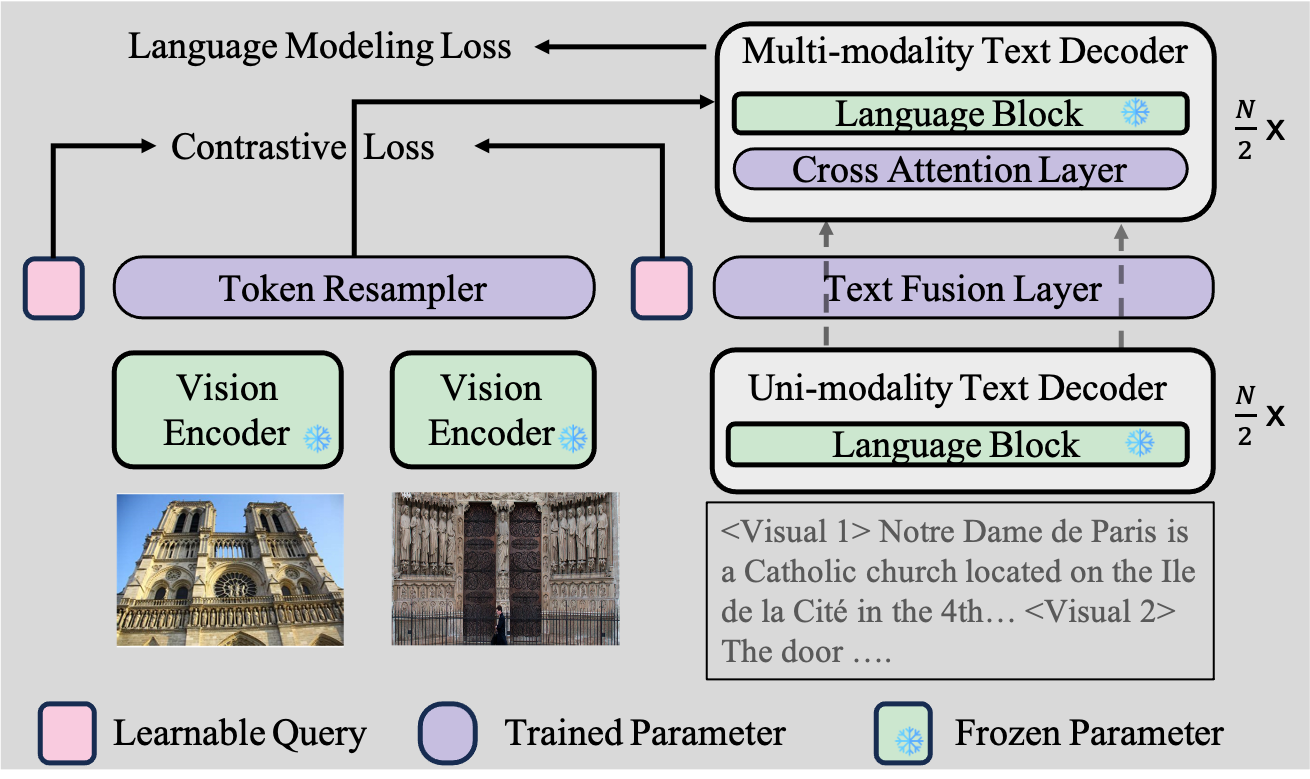

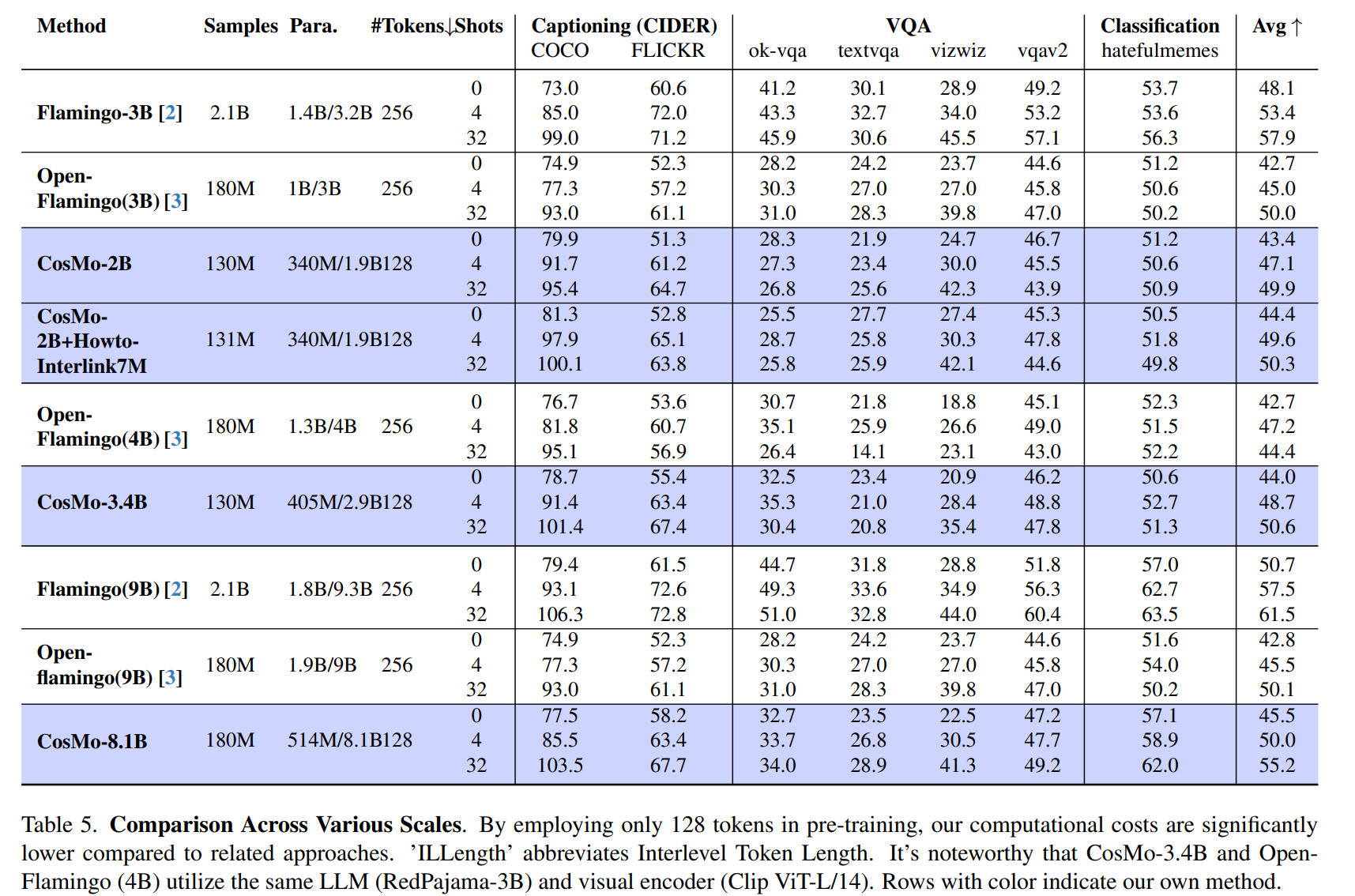

Cosmo, a fully open-source and comprehensive interleaved pre-training framework, is meticulously crafted for image and video processing.

Its primary focus lies on In-context Learning.

- 2/April/2024. The code support trained on MOE (Mixtral 8x7b) model now. See CosMOE Website for details.

- 2/Jan/2024. We provide the preprocess scripts to prepare the pre-training/downstream dataset.

- 2/Jan/2024. We release Howto-Interlink7M dataset. See Huggingface List View for details.

- Provides an Interleaved Image/Video Dataloader.

- Integration with Webdataset.

- Utilizes Huggingface Transformers along with Deepspeed for training.

- Incorporates contrastive loss.

- Enables few-shot evaluation.

- Supports instruction tuning.

- Scaline from 2B model to 56B.

See MODEL_CARD.md for more details.

See HowToInterlink7M.md.

See INSTALL.md.

See DATASET.md.

See PRETRAIN.md.

Since MOE checkpoint is larger than 200GB which is time consuming to download. So it's best to pre-cache in a local directory with tools like AZCopy.

mkdir /tmp/pretrained_models

cd /tmp/pretrained_models

git clone https://huggingface.co/mistralai/Mixtral-8x7B-v0.1

To reduce GPU memory usage during MOE training, we employ 4-bit training. Ensure 'bitsandbytes' is installed beforehand.

pip install requirements_mistral.txt

python src/main_pretrain.py --base_config src/config/train_server/base_180m.yaml --variant_config src/config/train_server/mixtral/ base.yaml --deepspeed src/config/deepspeed/deepspeed_config_mixtral_8x7b.jsonThis code support 44 downstream datasets. Include but not limited to COCO, FLICKR30K, OK-VQA, TextVQA, VizWiz, VQAV2, Hatefulmemes, Vatex, TGIF, MSVD, MSRVTT.

See Evaluation.md

See TUNING.md

If you find our work helps, please consider cite the following work

@article{wang2024cosmo,

title={COSMO: Contrastive Streamlined Multimodal Model with Interleaved Pre-Training},

author={Wang, Alex Jinpeng and Li, Linjie and Lin, Kevin Qinghong and Wang Jianfeng and Lin, Kevin and Yang, Zhengyuan and Wang, Lijuan and Shou, Mike Zheng},

journal={arXiv preprint arXiv:2401.00849},

year={2024}

}

Email: awinyimgprocess at gmail dot com

Our work are mainly based on the following works:

MMC4,Open-flamingo, Open-CLIP, Huggingface Transformer and WebDataset.