LoRA - Low Rank Adaptation of LLM - is one of the most popular methods for efficient fine-tuning of Large Language Models without need for training all of it's parametrs. One of it's most recent modifications - LoRA-FA is a memory efficient extension of the method, which allows to reduce memory cost around x1.4 times. However both LoRA and LoRA-FA rely on random initialization of matrix A and initialization with zeros of matrix B. In our project compare LoRA-FA paper approach to our extension of method.

Our contribution is that we try to observe more efficient loss/metric convergence while training from better initial low-rank weights obtained via low-rank approximation of initial

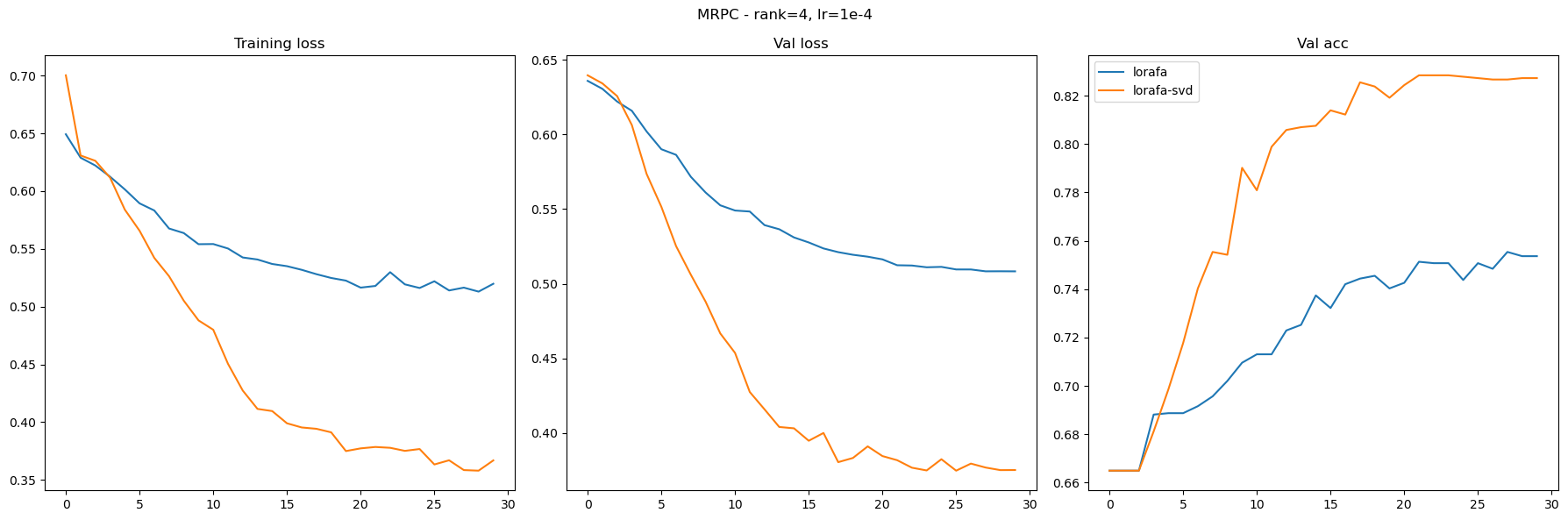

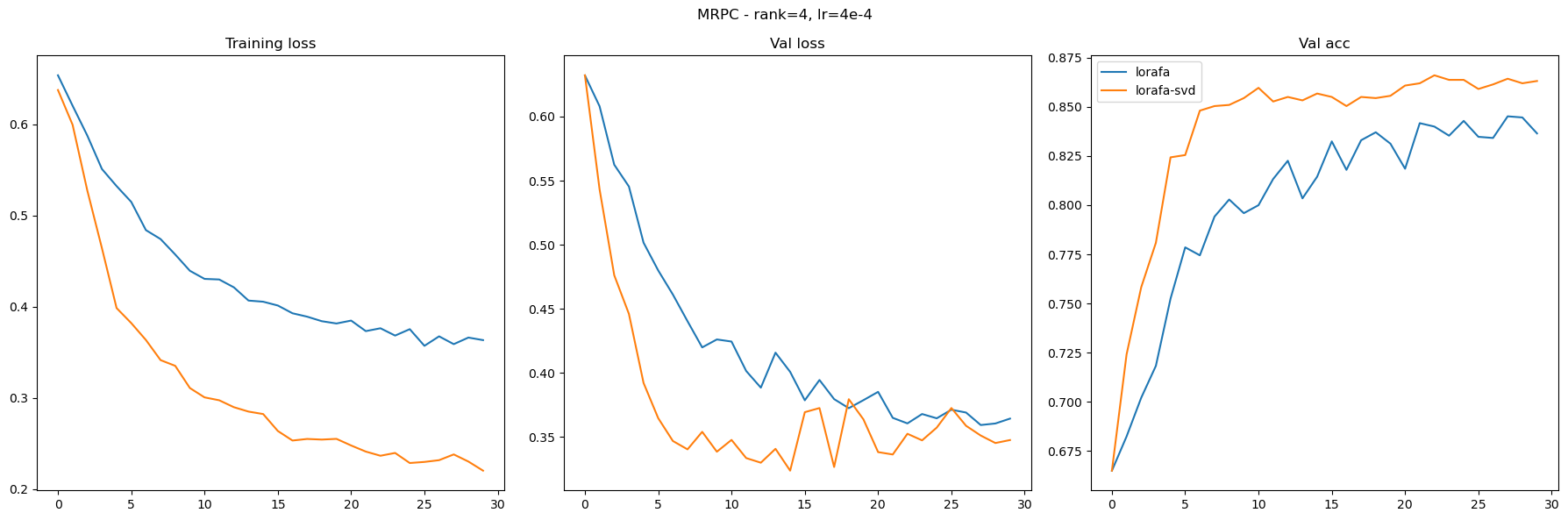

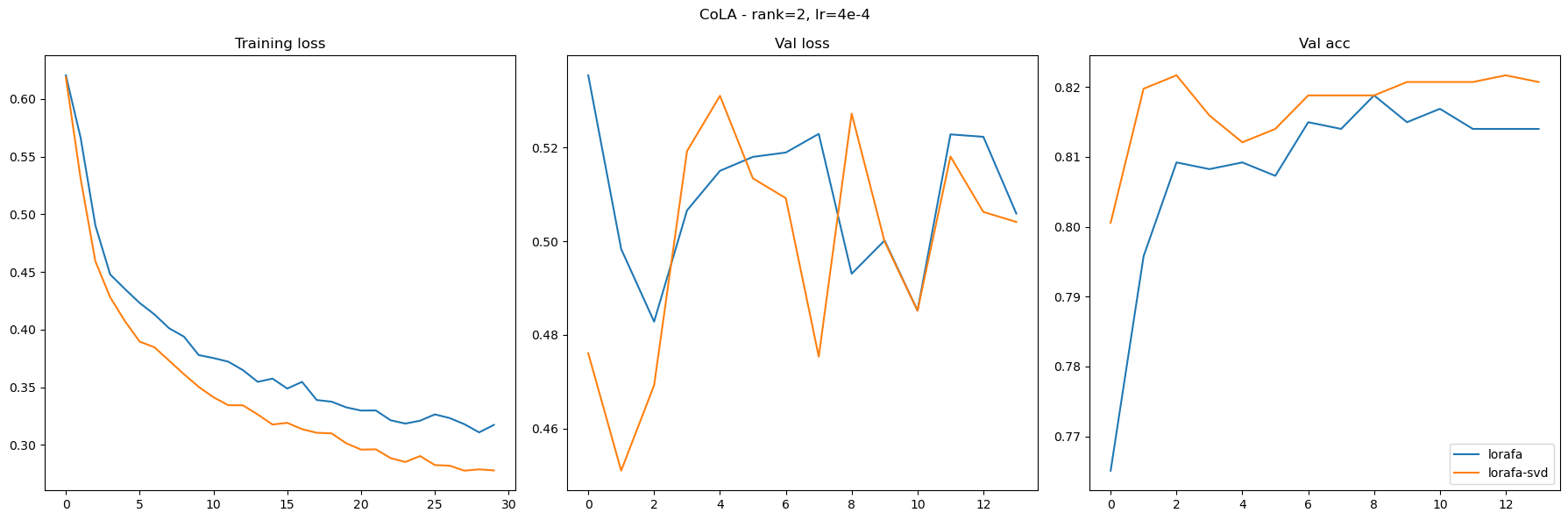

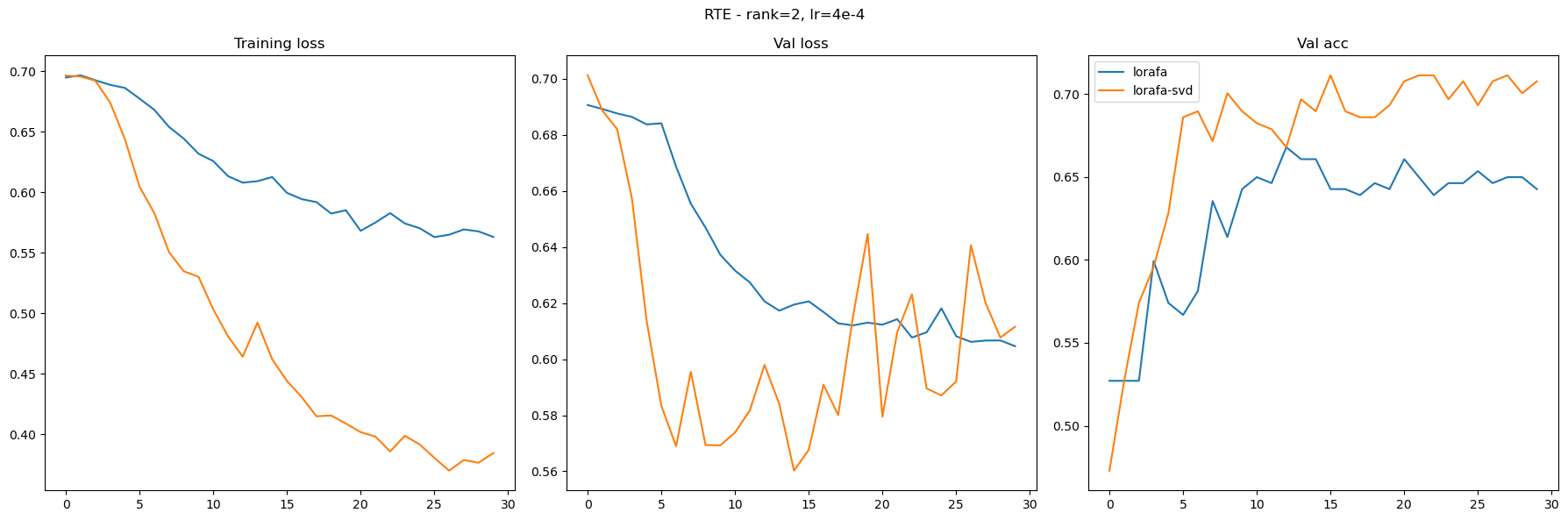

We experiment with RoBERTa-base model (150 M. parameterse) on several datasets from GLUE (MRPC, RTE, COLA) and observe dynamics of metrics and losses of standard LoRA-FA vs our initialized with SVD. We also experiment with additional regularization (see corresponding notebook).

- files named

*_experiment.ipynbcontain main experiments, which produce resulting logs in\logsfolder with loss and accuracy history \loracontains module based of one implemented in minLoRA and modified for project needs- other files are either utility or tests of functionality

"CoLA":'https://dl.fbaipublicfiles.com/glue/data/CoLA.zip',

"SST":'https://dl.fbaipublicfiles.com/glue/data/SST-2.zip',

"QQP":'https://dl.fbaipublicfiles.com/glue/data/STS-B.zip',

"STS":'https://dl.fbaipublicfiles.com/glue/data/QQP-clean.zip',

"MNLI":'https://dl.fbaipublicfiles.com/glue/data/MNLI.zip',

"QNLI":'https://dl.fbaipublicfiles.com/glue/data/QNLIv2.zip',

"RTE":'https://dl.fbaipublicfiles.com/glue/data/RTE.zip',

"WNLI":'https://dl.fbaipublicfiles.com/glue/data/WNLI.zip',

"diagnostic":'https://dl.fbaipublicfiles.com/glue/data/AX.tsv'

MRPC_TRAIN = 'https://dl.fbaipublicfiles.com/senteval/senteval_data/msr_paraphrase_train.txt'

MRPC_TEST = 'https://dl.fbaipublicfiles.com/senteval/senteval_data/msr_paraphrase_test.txt'

In order to successfully run notebooks in environment, one is expected to have installed latest pytorch, transformers and datatsets libraries, as well as other standard dependencies like numpy, matplotlib and jupyter notebook (as well as other imports in notebooks that didn't end up being mentioned here :)).

With all dependencies installed one can run experiment notebooks (or demo ones) and follow instructions described there.

As a result of many experiments, we observe clear superiority of low-rank SVD initialization of AB - in training regimes with smaller learning rates they converge always faster and to better quality, while with greater LR (~0.005) quality and speed becomes comparable (bu still with noticable gap).

Some plots with our results (you can visit plots_for_preso.ipynb to see more of them):

- Sergey Karpukhin, @hr3nk

- Yulia Sergeeva, @SergeevaJ

- Pavel Bartenev, @PavelBartenev

- Pavel Tikhomirov, @ocenandor

- Maksim Komiakov, @kommaks

Copyright 2023 Karpukhin Sergey

Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the “Software”), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED “AS IS”, WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE.