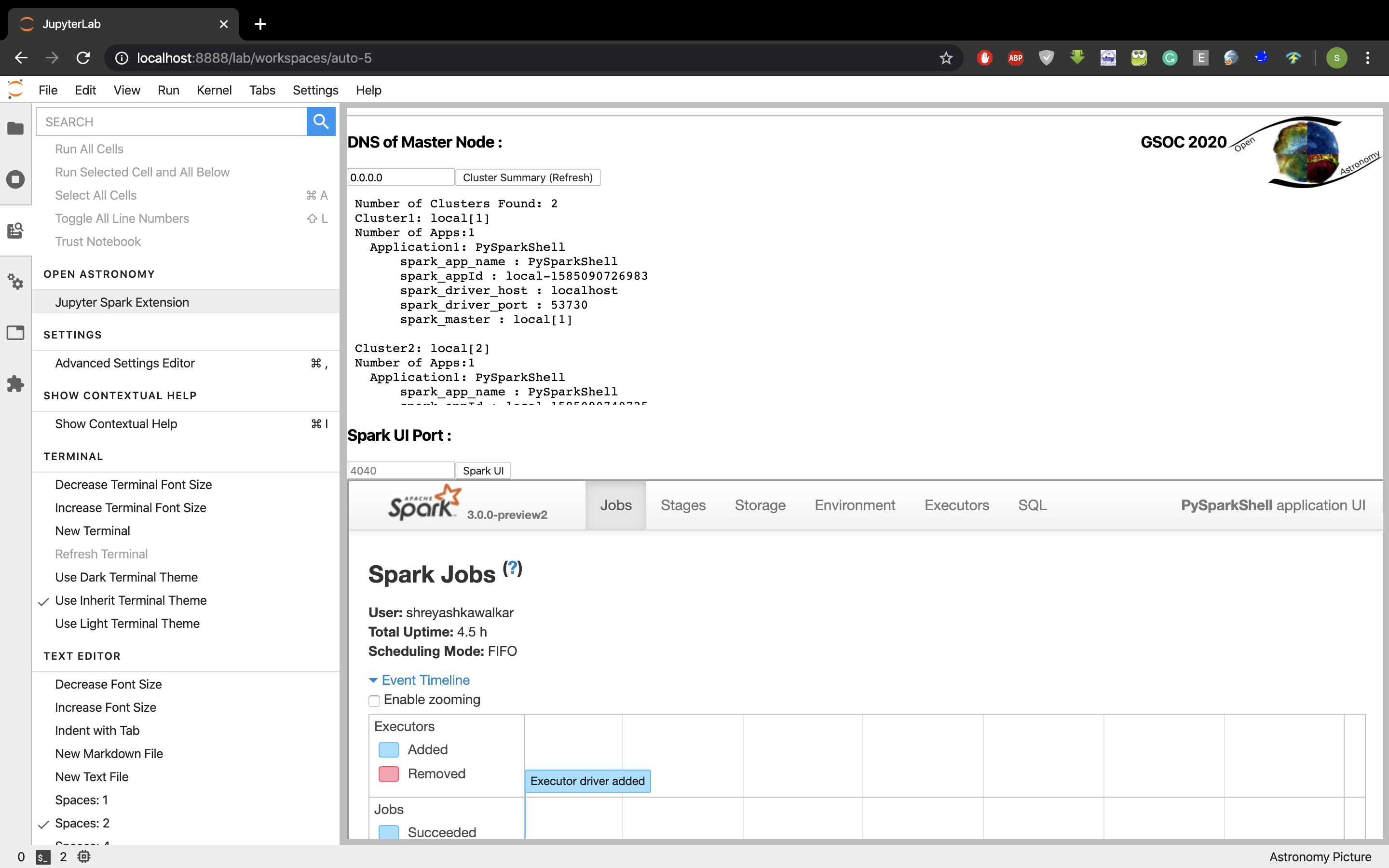

1. Auto searching of active Cluster on Local System:

The jupyterlab widget server extension by default upon activation starts fetching for the active spark clusters and its application on the localhost for ports within range 4040 :4049. Or by manually providing a choice of port

2. Auto searching of active Cluster on Remote System:

The jupyterlab widget server extension provides a slot to insert master node DNS, that starts fetching for an active spark clusters of a remote system with its application for ports within range 4040 : 4049, or by manually providing a choice of port.

3. Summarising and presenting Spark Applications’ Context

The extensions summarises the fetched spark clusters’ metadata, which user can directly copy, to get connected to the spark application of their choice.

4. Rendering Applications Spark UI of choice

Another main feature is to provide access to monitor any application of the SparksUI on the extension, on a local system or even on a remote system. The extensions Web-Scraps the SparkUI and forward-proxy all the inherited links to the extension’s base URI so that it can be IFramed on the extension itself.

- Dynamic Interactive UI:

Pardon me for the present UI =D. The UI dynamically manages to handle IFrame window resolution based upon user access.

- Server Extension for programmatic APIs :

TThis enables us to render a python package that sustains all the programmed scripts and scrapes web using Request Handlers like tornado to reduces complexity on JS.

- IFrames with Proxy Server by WebScrapping

In order to render a webapp directly onto the widget and provide the embedded links of the webapp, WebScrapping enables developers to proxy forward them for customising the webapp.

- Pyspark for python - spark cluster programmatic Integration

- Spark Cluster deployment

Please Click here for the illustrative video

Time Series:

00:00 Pyspark to python environment for python spark programmatic APIs

00:16 Spark cluster setting up

00:34 Spark environment setting up in .bash_profile

00:56 Deploying Multiple Spark Cluster and spark applications

02:25 Launching JupyterLab widget extension: JupyterSparkExt

04:40 Projecting same on Jupiter lab extension

05:12 Rendering sparkUI for port of choice.

06:05 With Help of Summary connecting with pyspark context

pythonic spark library

pip install pyspark

Spark Cluster

wget https://downloads.apache.org/spark/spark-3.0.0-preview2/ tar xfvz spark-3.0.0-preview2-bin-hadoop3.2 cd spark-3.0.0-preview2-bin-hadoop3.2

Open Terminal or go to system environment and add:

vi .bash_profile export SPARK_HOME=~/spark-3.0.0-preview2-bin-hadoop3.2 export PATH=$SPARK_HOME/bin:$PATH export PYSPARK_PYTHON=python3 #export PYSPARK_DRIVER_PYTHON="jupyter" #export SPARK_LOCAL_IP="0.0.0.0" #export PYSPARK_DRIVER_PYTHON_OPTS="notebook --no-browser --port=8888” source bash_profile

test

echo $SPARK_HOME

Configure router for traffic on port 80

sudo apt-get install openssh-server openssh-client ssh-keygen -t rsa -P ""

open .ssh/id_rsa.pub (of master) and copy content to .ssh/authorized_keys into all the wokers as well as master

Follow above mentioned steps in all the systems

on Master Node only:

sudo vi /etc/hosts <MASTER-IP > master <SLAVE01-IP> worker01 <SLAVE02-IP> worker02 ssh worker01 ssh worker02 vi $SPARK_HOME/conf/spark-env.sh export SPARK_MASTER_HOST="<MASTER-IP>" sudo vi $SPARK_HOME/conf/slaves master worker01 worker02

sudo apt-get install awscli aws configure set aws_access_key_id <aws_access_key_id> aws configure set aws_secret_access_key <aws_secret_access_key> aws configure set region <region> [RUN] SPARK_AWS_EMR.ipynb

Open Terminal

Deploying cluster 1 spark application 1

pyspark —master local[0] —name c1_app1

Deploying cluster 1 spark application 2

pyspark —master local[0] —name c1_app2

Deploying cluster 2 spark application 1

pyspark —master local[1] —name c2_app1

Deploying cluster 2 spark application 2

pyspark —master local[1] —name c2_app2

Application Server Extension:

git clone https://github.com/astronomy-commons/jupyter-spark.git cd jupyter-spark pip install ~/jupyter-spark --pre jupyter serverextension enable --py spark_ui_tab --sys-prefix

Rendering Widget:

jupyter labextension install . --no-build jupyter labextension link . jupyter lab # or jupyter lab --watch