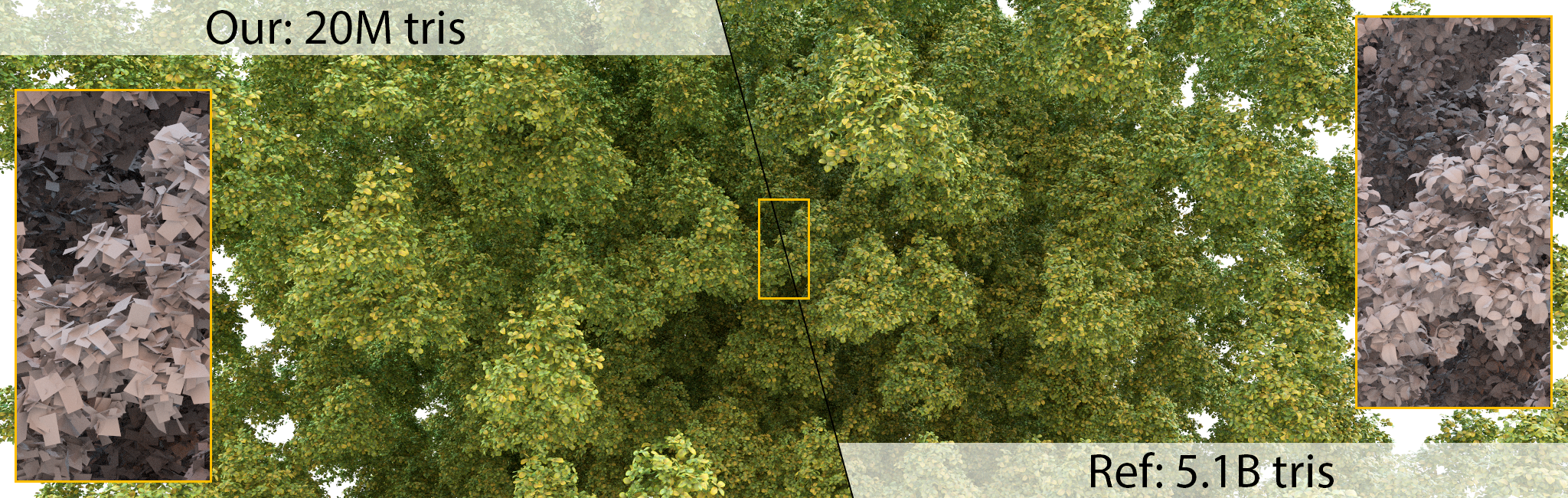

Differentiable rasterization applied to 3D model simplification tasks, as described in the paper:

Appearance-Driven Automatic 3D Model Simplification

Jon Hasselgren, Jacob Munkberg, Jaakko Lehtinen, Miika Aittala and Samuli Laine

https://research.nvidia.com/publication/2021-04_Appearance-Driven-Automatic-3D

https://arxiv.org/abs/2104.03989

Copyright © 2021, NVIDIA Corporation. All rights reserved.

This work is made available under the Nvidia Source Code License.

For business inquiries, please contact researchinquiries@nvidia.com

For press and other inquiries, please contact Hector Marinez at hmarinez@nvidia.com

Requires VS2019+, Cuda 10.2+ and PyTorch 1.6+

Tested in Anaconda3 with Python 3.6 and PyTorch 1.8

Install the Cuda toolkit (required to build the PyTorch extensions). We support Cuda 10.2 and above. Pick the appropriate version of PyTorch compatible with the installed Cuda toolkit. Below is an example with Cuda 11.1

conda create -n dmodel python=3.6

activate dmodel

conda install pytorch torchvision torchaudio cudatoolkit=11.1 -c pytorch -c conda-forge

conda install imageio

pip install PyOpenGL glfw

Install nvdiffrast: https://github.com/NVlabs/nvdiffrast in the dmodel conda env.

activate dmodel

Sphere to cow example:

python train.py --config configs/spot.json

The results will be stored in the out folder.

The Spot model was

created and released into the public domain by Keenan Crane.

Additional assets can be downloaded here [205MB].

Unzip and place the subfolders in the project data folder, e.g., data\skull.

All assets are copyright of their respective authors, see included license files for further details.

Included examples

skull.json- Joint normal map and shape optimization on a skullewer.json- Ewer model from a reduced mesh as initial guessgardenina.json- Aggregate geometry examplehibiscus.json- Aggregate geometry examplefigure_brushed_gold_64.json- LOD example, trained against a supersampled referencefigure_displacement.json- Joint shape, normal map, and displacement map example

The json files that end in _paper.json are configs with the settings used

for the results in the paper. They take longer and require a GPU with sufficient memory.

-

Build docker image (run the command from the code root folder).

docker build -f docker/Dockerfile -t diffmod:v1 .Requires a driver that supports Cuda 10.1 or newer. -

Start an interactive docker container:

docker run --gpus device=0 -it --rm -v /raid:/raid -it diffmod:v1 bash -

Detached docker:

docker run --gpus device=1 -d -v /raid:/raid -w=[path to the code] diffmod:v1 python train.py --config configs/spot.json