Implement object detection using the very powerful YOLO model. Many of the ideas in this project are inspired by the two YOLO papers: Redmon et al., 2016 and Redmon and Farhadi, 2016.

Project Objective:-

- Detect objects in a car detection dataset

- Implement non-max suppression to increase accuracy

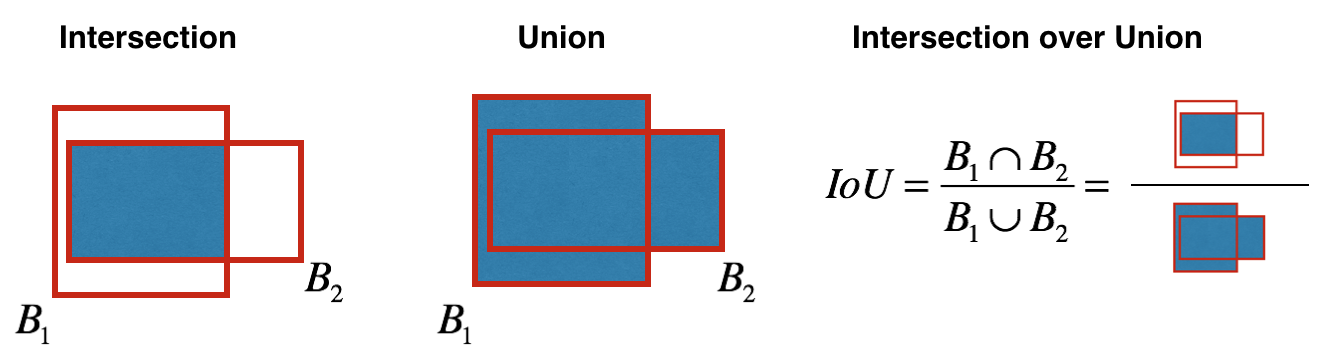

- Implement intersection over union

- Handle bounding boxes, a type of image annotation popular in deep learning

Collected data from a camera mounted on car, which takes pictures of the road ahead every few seconds.

road_video_compressed2.mp4

Gathered all these images into a folder and labelled them by drawing bounding boxes around every car found. Here's an example of what bounding boxes look like:

"You Only Look Once" (YOLO) is a popular algorithm because it achieves high accuracy while also being able to run in real time. This algorithm "only looks once" at the image in the sense that it requires only one forward propagation pass through the network to make predictions. After non-max suppression, it then outputs recognized objects together with the bounding boxes.

- The input is a batch of images, and each image has the shape (608, 608, 3)

- The output is a list of bounding boxes along with the recognized classes.

Anchor boxes are chosen by exploring the training data to choose reasonable height/width ratios that represent the different classes. For this project, 5 anchor boxes were chosen (to cover the 80 classes), and stored in the file './model_data/yolo_anchors.txt'

- The dimension of the encoding tensor of the second to last dimension based on the anchor boxes is

$(m, n_H,n_W,anchors,classes)$ . - The YOLO architecture is: IMAGE (m, 608, 608, 3) -> DEEP CNN -> ENCODING (m, 19, 19, 5, 85).

What this encoding represents.

Figure 2 : Encoding architecture for YOLOSince you're using 5 anchor boxes, each of the 19 x19 cells thus encodes information about 5 boxes. Anchor boxes are defined only by their width and height.

For simplicity, you'll flatten the last two dimensions of the shape (19, 19, 5, 85) encoding, so the output of the Deep CNN is (19, 19, 425).

Figure 3 : Flattening the last two last dimensionsNow, for each box (of each cell) you'll compute the following element-wise product and extract a probability that the box contains a certain class.

The class score is

Here's one way to visualize what YOLO is predicting on an image:

- For each of the 19x19 grid cells, find the maximum of the probability scores (taking a max across the 80 classes, one maximum for each of the 5 anchor boxes).

- Color that grid cell according to what object that grid cell considers the most likely.

Doing this results in this picture:

Figure 5: Each one of the 19x19 grid cells is colored according to which class has the largest predicted probability in that cell.Another way to visualize YOLO's output is to plot the bounding boxes that it outputs. Doing that results in a visualization like this:

Figure 6: Each cell gives you 5 boxes. In total, the model predicts: 19x19x5 = 1805 boxes just by looking once at the image (one forward pass through the network)! Different colors denote different classes.In the figure above, the only boxes plotted are ones for which the model had assigned a high probability, but this is still too many boxes. You'd like to reduce the algorithm's output to a much smaller number of detected objects.

To do so, you'll use non-max suppression. Specifically, you'll carry out these steps:

- Get rid of boxes with a low score. Meaning, the box is not very confident about detecting a class, either due to the low probability of any object, or low probability of this particular class.

- Select only one box when several boxes overlap with each other and detect the same object.

Even after filtering by thresholding over the class scores, you still end up with a lot of overlapping boxes. A second filter for selecting the right boxes is called non-maximum suppression (NMS).

Figure 7 : In this example, the model has predicted 3 cars, but it's actually 3 predictions of the same car. Running non-max suppression (NMS) will select only the most accurate (highest probability) of the 3 boxes.Non-max suppression uses the very important function called "Intersection over Union", or IoU.