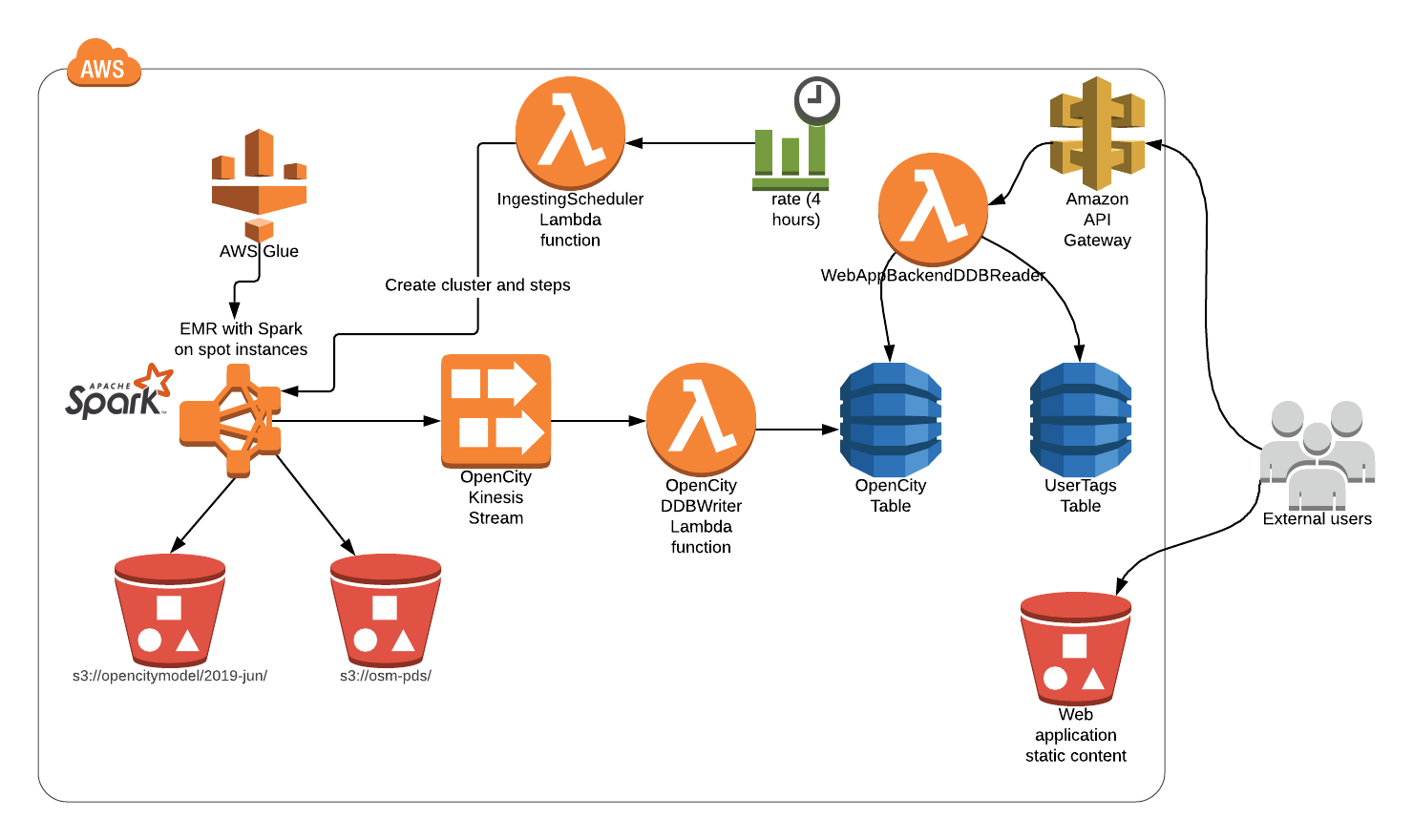

The project uploads Open City Model database and Open Street Maps to DynamoDB with EMR, Kinesis and Lambda. It also provides PoC web application to perform geospatial requests, view buildings and add tags on it.

How it works:

- EMR cluster launched every N hours. The only step it has - Spark job spark-kinesis-ingester.

- spark-kinesis-ingester reads data from S3 Data Lake (tables metadata comes from Glue Data Catalog) and puts it into Kinesis stream. Open City Model data partitioned by US states and on each invocation one random US state data processed.

- Lambda function OpenCityDDBWriter reads records from the Kinesis stream and puts it into DynamoDB table.

- Web application for navigating OpenCity data build with Lambda and API Gateway.

Overall architecture:

-

Create Glue table as described here

-

Build spark-kinesis-ingester module:

mvn clean install

-

Put jar

/target/spark-kinesis-ingester-1.0-SNAPSHOT.jarto your S3 bucket. -

Go to deploy folder and prepare terraform config file

config.tfvars:

region="es-east-1"

jar_path="s3://your_bucket/jars/spark-kinesis-ingester-1.0-SNAPSHOT.jar"

s3_static_bucket_name="static-content-bucket"

- Go to webapp folder and build frontend:

npm install

gulp

- Apply it:

terraform init

terraform plan -var-file=config.tfvars

terraform apply -var-file=config.tfvars

It outputs API Gateway endpoint:

Outputs:

backend_api_url = https://???????.execute-api.eu-west-1.amazonaws.com/opencity

- Go to webapp folder and build frontend with api url from the previous step:

gulp --api_endpoint https://???????.execute-api.eu-west-1.amazonaws.com/opencity

- Apply terraform one more time:

terraform apply -var-file=config.tfvars

To init OSM data:

-

Run CMR cluster

-

Create

planettable as described here -

Run Job with:

spark-submit

--deploy-mode cluster

--conf spark.sql.catalogImplementation=hive

--conf spark.yarn.maxAppAttempts=1

--class io.shuvalov.spark.kinesis.ingester.IngesterJob

%jar_path%

"SELECT concat('', id) as hash, type, to_json(tags) as tags, lat, lon, to_json(nds) as nds, to_json(members) as members,

unix_timestamp(timestamp) as timestamp, uid, user, version FROM opencitymodel.planet limit 10"

OSM

Web app provides simple UI with a map based on Leaflet: