An adaptive training algorithm for residual network based on model Lipschitz

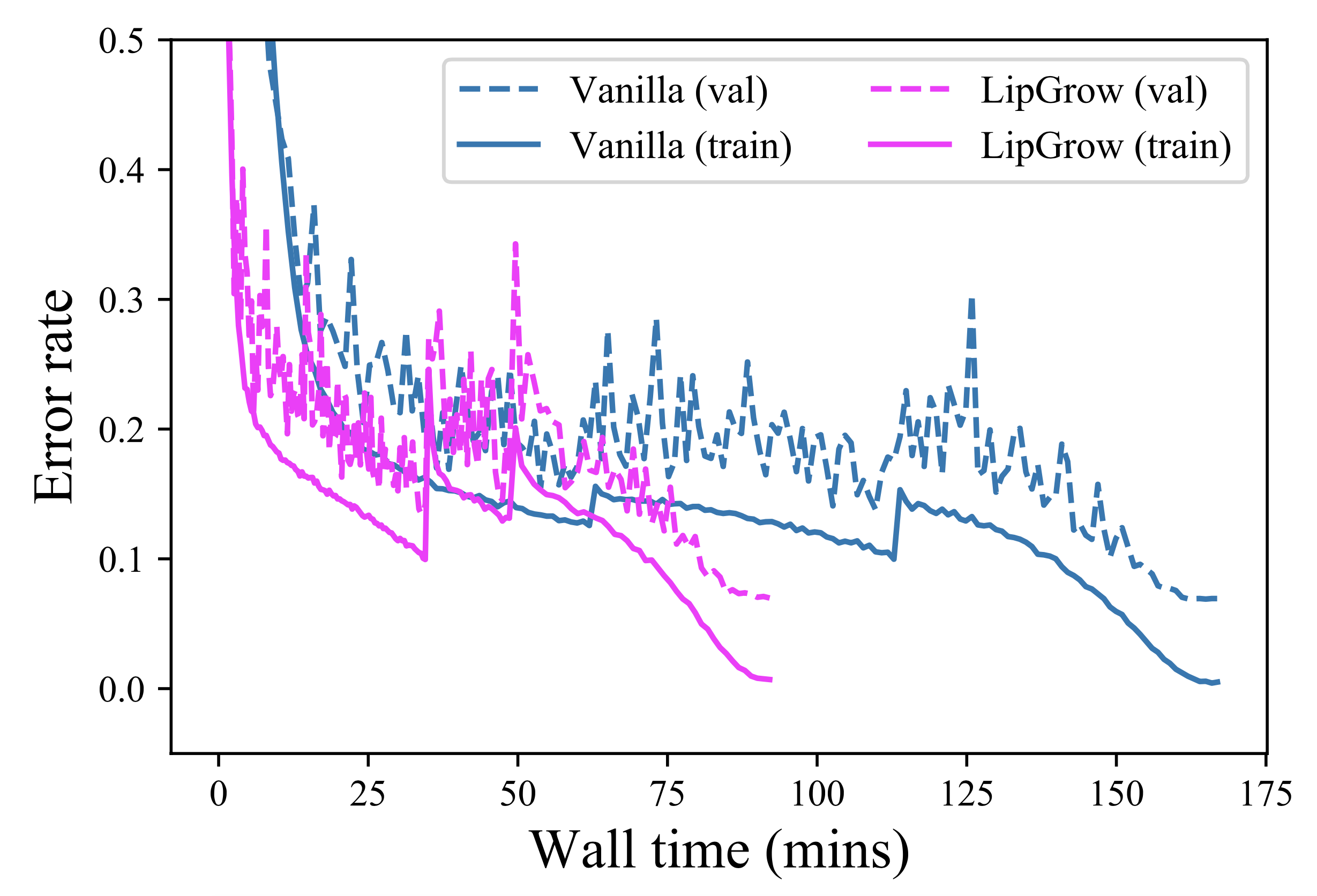

- Our algorithm reduces about 50% time when training ResNet-74 on CIFAR-10

- Install PyTorch

- Clone recursively

git clone --recursive https://github.com/shwinshaker/LipGrow.git

- By default, build a

./datadirectory which includes the datasets - By default, build a

./checkpointsdirectory to save the training output

-

CIFAR-10/100

./launch.sh -

Tiny-ImageNet

./imagenet-launch.sh -

Recipes

- For vanilla training, set

grow=false - For training with fixed grow epochs, set

grow='fixed', and provide grow epochsdupEpoch - For adaptive training, set

grow='adapt', and use adaptive cosine learning rate schedulerscheduler='adacosine'

- For vanilla training, set

- ResNet architecture for ImageNet is slightly from the published one. The uneven number of blocks in every subnetwork requires a different grow scheduler for each subnetwork, which demands some extra work

If you find our algorithm helpful, consider citing our paper

Towards Adaptive Residual Network Training: A Neural-ODE Perspective

@inproceedings{Dong2020TowardsAR,

title={Towards Adaptive Residual Network Training: A Neural-ODE Perspective},

author={Chengyu Dong and Liyuan Liu and Zichao Li and Jingbo Shang},

year={2020}

}