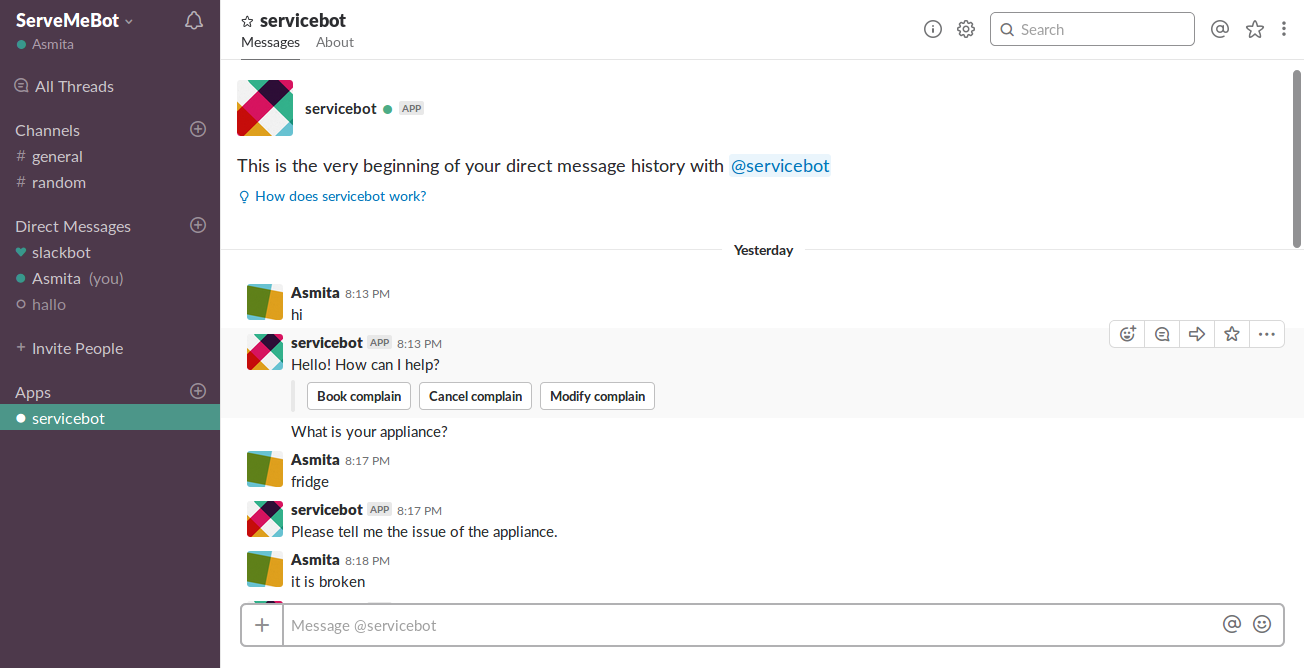

Chat Bot built using RASA NLU and RASA CORE, which the consumers can use on their phones to book a service appointment for their Appliances. Rasa NLU version: 0.13.2 Rasa Core version: 0.11.3

Clone this repo to get started:

git clone https://github.com/WonderboyWonderboyv/ServeMeBot.git

After you clone the repository, a directory will be downloaded to your local machine. It contains all the files of this repo and you should refer to this directory as your 'project directory'.

If you haven’t installed Rasa NLU and Rasa Core yet, you can do it by navigating to the project directory and running:

pip install -r requirements.txt

You also need to install a spaCy English language model. You can install it by running:

python -m spacy download en

It contains some training data and the main files which use as the basis of our custom assistant. It also has the usual file structure of the assistant built with Rasa Stack. This directory consists of the following files:

- data/nlu_data.json file contains training examples of intents

- nlu_cofing.yml file contains the configuration of the Rasa NLU pipeline:

- data/stories.md file contains some training stories which represent the conversations between a user and the assistant.

- domain.yml file describes the domain of the assistant which includes intents, entities, slots, templates and actions the assistant should be aware of.

- actions.py file contains the code of a custom action.

- endpoints.yml file contains the webhook configuration for custom action.

- You can train the Rasa NLU model by running:

$ python nlu_tf_model.py

This will train the Rasa NLU model and store it inside the /models/nlu_tf folder of our project directory.

- Train the Rasa Core model by running:

$ python train_bot_online.py

This will train the Rasa Core model and store it inside the /models/dialogue folder of our project directory.

- Start the server for the custom action by running:

$ python -m rasa_core_sdk.endpoint --actions actions

This will start the server for emulating the custom action.

- Test the assistant by running:

$ python dialogue_management_model.py

This will load the assistant in your terminal for you to chat.

- Configure the slack app.

- Make sure custom actions server is running

- Start the agent by running run_app.py file (do put your bot token)

- Start the ngrok on the port 5004

- Provide the url: https://your_ngrok_url/webhooks/slack/webhook to 'Event Subscriptions' page of the slack configuration.

- Talk to you bot.