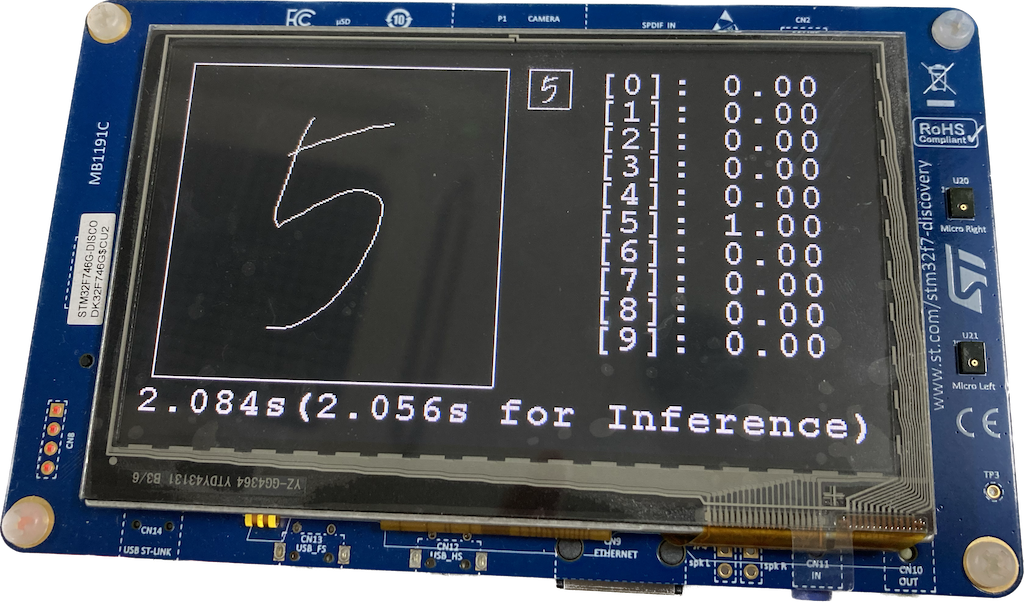

On-device MNIST inference example using STM32F746G-DISCO Board & Tensorflow Lite for Microcontroller(TFLM)

- STM32F746G-DISCO Board

- STM32CubeIDE

- X-CUBE-AI 7.3.0 (Built in this project)

- STM32Cube Firmware for F7 Series, Version 1.17.0 (Built in this project)

- Create new STM32 project in STM32CubeIDE

- Menu [File] > [New] > [STM32 Project]

- Tab [Board Selector] > Find & Select 'STM32F746G-DISCO' > Button [Next]

- Configure your project (e.g. project name) > Button [Finish]

- Open the

{project-name}.iocfile > Click 'Software Packs' in [Pinout & Configuration] Tab > Click 'Select Components' - Find and Install 'STMicroelectronics.X-CUBE-AI' > Select 'Artificial Intelligence X-CUBE-AI Core'

- Click 'Software Packs' in 'Categories' tab and select 'STMicroelectronics.X-CUBE-AI.7.3.0'

- Check 'Artificial Intelligence X-CUBE-AI'

- Save the

{project-name}.iocfile to generate code

- Store your keras(tensorflow) model to SavedModel using Python

model = ... # Get model

model.save('/path/to/location') # ft.keras.models.save_model()- Convert SavedModel to TFLite model

import tensorflow as tf

# Convert the model (path to the SavedModel directory)

converter = tf.lite.TFLiteConverter.from_saved_model('/path/to/location')

tflite_model = converter.convert()

# Save the model

with open('model.tflite', 'wb') as f:

f.write(tflite_model)- Open the

{Project name}.iocfile - Tab [Pinout & Configuration] > [Categories] > [Software Packs] > Select [STMicroelectronics.X-CUBE-AI]

- Button [Add network] > Select 'TFLite' and 'TFLite Micro runtime' > Button [Browse...] > Select your TFLite model(

.tflitefile)- This step is essential to use TLFM

- Button [Analyze] > [OK]

- Remember RAM usage to allocate

tensor_arenalater

- Remember RAM usage to allocate

- Save the

{project-name}.iocfile to generate code and convert tflite to c bytes array- In

X-CUBE-AI/App/network.cfile, you can see c bytes array of tflite file

- In

In debug_log_imp.cc, there is no implementation of tflm_io_write function. So, we have to define this function which TFLM uses to leave a log.

int tflm_io_write(const void *buff, uint16_t count) {

return 0;

}You can see TensorFlow for Microcontroller Get Started Reference to run inference. But there are X-CUBE-AI APIs which wrap TFLM classes and functions. It is highly compatible with STM MCU and equivalent to use TFLM library. So, I used X-CUBE-AI APIs.

The implementation of below is in tasks.c. Please see the InferenceTask() function.

- Include the library & model headers

#include "tflm_c.h"

#include "network_tflite_data.h"tflm_c.hprovides TFLM class and function wrappernetwork_tflite_data.hprovides tflite bytes array generated by X-CUBE-AI

- Allocate memory

- We need to preallocate a certain amount of memory for input, output, and intermediate arrays. This is provided as a

uint8_tarray of sizeTENSOR_ARENA_SIZEwhich can be determined by referring by Model Analyzing (See Add Model Section).

#define TENSOR_ARENA_SIZE (80 * 1024)

static uint8_t tensor_arena[TENSOR_ARENA_SIZE];- Load a model and get handler

tflm_c_createintflm_c.ccfunction executes model load, operation resolver and interpreter instantiation function in TFLM.

// it will be stored X-CUBE-AI instance

// it is used for other X-CUBE-AI API(e.g. tflm_c_input, tflm_c_output)

uint32_t* hdl;

TfLiteStatus status = tflm_c_create(

g_tflm_network_model_data, // tflite bytes array in network_tflite_data.h

tensor_arena, // preallocated memory

TENSOR_ARENA_SIZE, // the size of tensor_arena

&hdl

);- Create Input Tensor

tflm_c_tensor_infois used to storing tensor data(both input and output)

struct tflm_c_tensor_info input_tensor;

tflm_c_input(hdl, 0, &input_tensor);

float* input = (float*)input_tensor.data;

for (int i = 0; i < TENSOR_INPUT_SIZE; i++) {

// input processed data to input_tensor.data

input[i] = raw_data[i] / 255.0f; // raw_data is somewhere

}- Inference

tflm_c_invoke(hdl);- Create Output Tensor

- After call the

tflm_c_invokefunction, the output data has been stored somewhere. We can get data by calling thetflm_c_outputfunction. Then, we can usetflm_c_tensor_infostructure to get the output data.

struct tflm_c_tensor_info output_tensor;

tflm_c_output(hdl, 0, &output_tensor);

float* output = (float*)output_tensor.data; // output[0], output[1], ..., output[9]struct tflm_c_tensor_info {

TfLiteType type; // data type (e.g. float32, uint8, ...)

int32_t idx; // data index

uint32_t batch; // axis: 0

uint32_t height; // axis: 1

uint32_t width; // axis: 2

uint32_t depth; // axis: 3

uint32_t extension; // axis: 4

uint32_t channels; // axis: 5

size_t bytes; // tensor data size

float scale;

int zero_point;

void* data; // start address of tensor data

struct tflm_c_shape shape;

};