The project aims to classify the sentiment of input text into 5 categories: very bad(1), bad(2), neutral(3), good(4), and very good(5)

Input (X): Text

Output (Y): Sentiment Category (1-5)

Yelp Dataset which is 5,996,996 Yelp reviews, labeled by sentiment (1-5) in JSON format.

Preprocess the dataset in the following order:

- Lowercase all words

- I’m > I am

- McDonald's > McDonald

- Only keep words with known GloVe embeddings.

Since we already have pre-trained GloVe vectors, I did not stem the words; let’s use all the information we have.

JSON to processed and indexed HDF5 format to be used by ML models. Data stored in AWS S3.

Fig 1. Sentiment Analysis Data Pipeline

All ETL jobs are done in Python.

Evaluated the following models for this project:

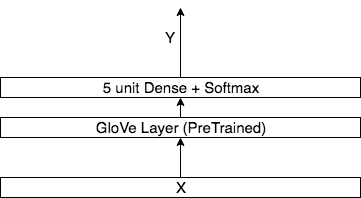

The model is based on Stanford’s GloVe project [1]. I added a dense layer on top of pre-trained GloVe layer to classify the text.

Fig 2. GloVe average model architecture

Implemented in TensorFlow.

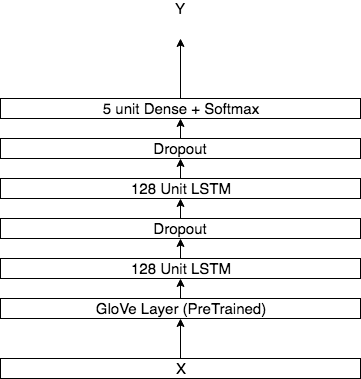

The model expands on the concept of the previous model with two LSTM layers and dropout in between.

Fig 3. GloVe + LSTM model architecture

Implemented in TensorFlow.

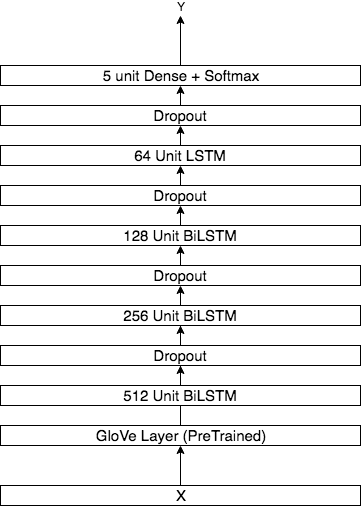

The model expands on the concept of the previous model with four LSTM layers and dropout in between.

Fig 4. GloVe + BiLSTM model architecture

Implemented in TensorFlow.

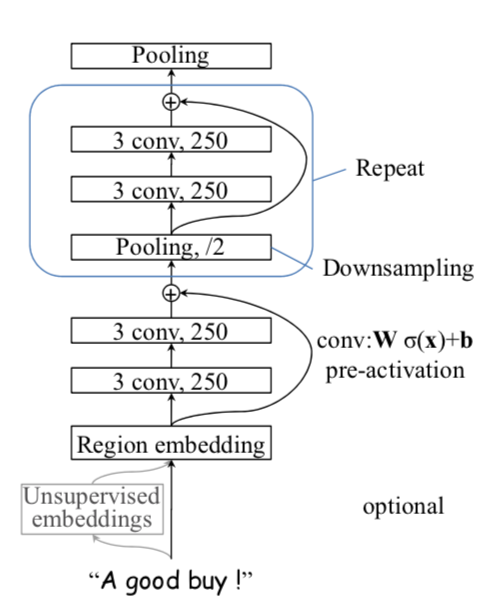

Based on Johnson, Rie & Zhang, Tong’s DPCNN model[2] for text classification.

Fig 5. DPCNN Architecture

Implemented in Tensorflow

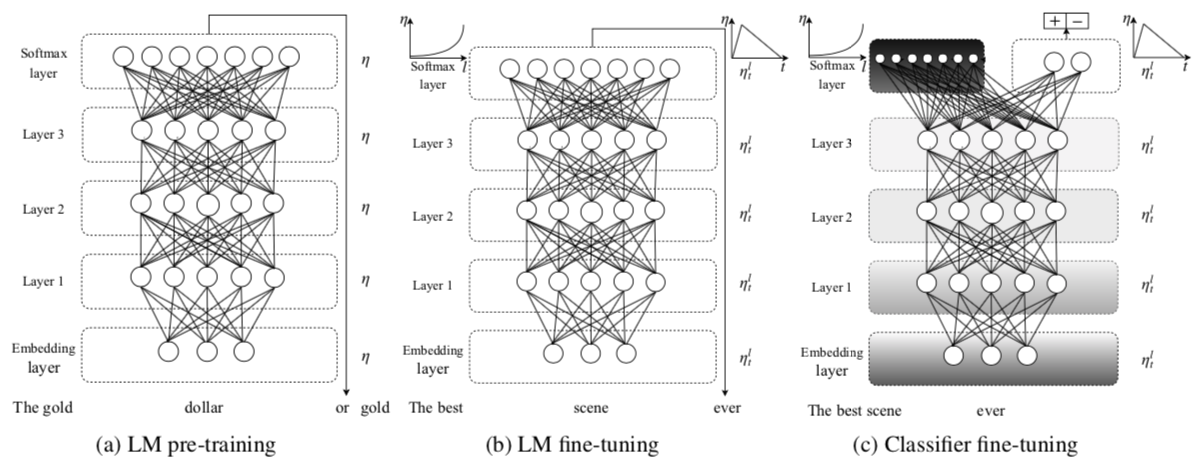

Based on Jeremy Howard and Sebastian Ruder’s ULMFit model[3]. This model is state-of-the-art at the time of this writing.

Fig 6. ULMFiT model architecture

Implemented in TensorFlow.

The dataset was sliced into 98% training, 1% dev, and 1% testing. The model was trained on one P3.16xlarge with Deep Learning AMI. The model is saved after each epoch.

The inference is done with a saved model in a Fargate container.

Due to Lambda’s limitation on size, I had to deploy the last model in a docker container on AWS Fargate.

- Jeffrey Pennington, Richard Socher, and Christopher D. Manning. 2014. GloVe: Global Vectors for Word Representation.

- Johnson, Rie & Zhang, Tong. 2017. Deep Pyramid Convolutional Neural Networks for Text Categorization.

- Jeremy Howard, Sebastian Ruder. 2018. Universal Language Model Fine-tuning for Text Classification