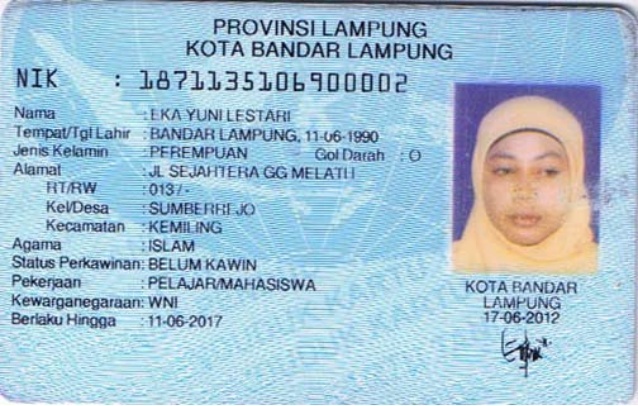

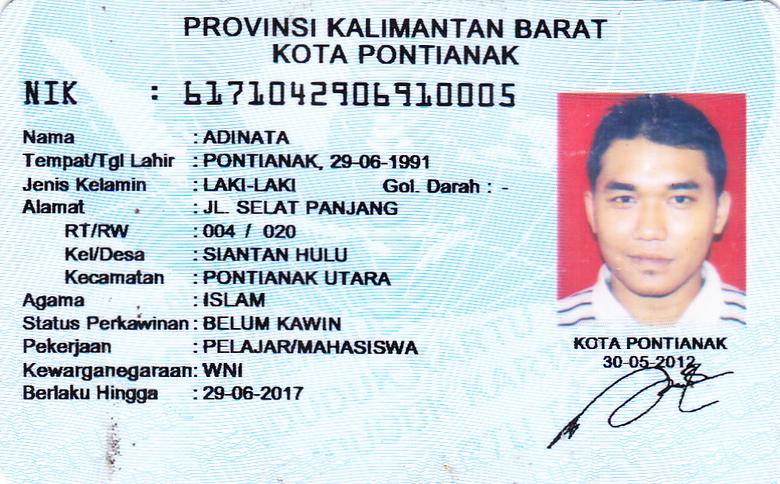

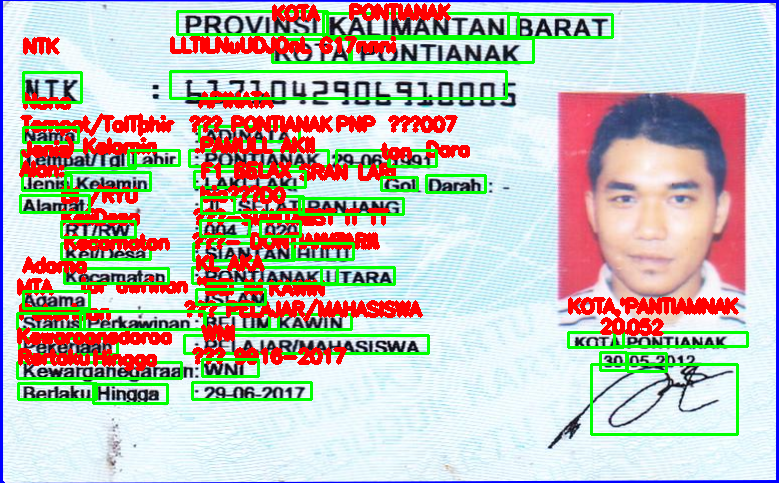

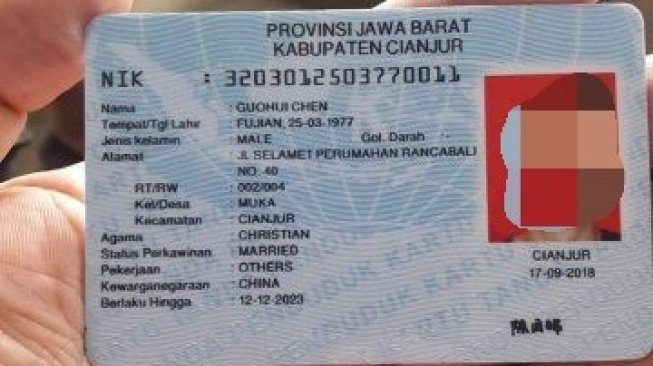

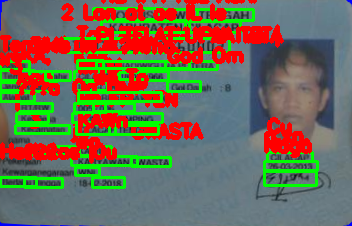

Using OpenCV and image recognition to parse data and validate Indonesian identification cards

There are multiple challenges in achieving the desired result:

- Detect where the card is

- Crop the original picture to just the card

- If the card is slanted in the picture, make the card up right

- Detect the text inside the card

- Calculate confidence level in the text detection, if it is below a certain threshold, have the user to reupload or position the camera correctly. Might need to also turn on/off the camera's flash

- Image/sensor noise. Sensor noise from a handheld camera is typically higher than that of a traditional scanner.

- Viewing angles. The scene might not have a viewing angle that is parallel to the text.

- Blurring.

- Lighting condition. We cannot control if the camera has flash on, or if the sun is shining brightly.

- Resolution. Differences in camera quality might affect the amount of noise in the scene.

- Non-paper objects. Paper is not reflective, but text that appear on a reflective object makes it harder to detect.

The project is still using some external libraries to process the text, however, it will be restricted from using any paid services like Google Cloud Vision API which basically does everything for you.

Although yes in a production environment using some machine learning model maintained by Google will yield better results, the goal of this project is to learn what Google Cloud Vision API is doing under the hood.

- Figure out pattern in KTP cards and predict where information will be (i.e. where will name, address, dob be in the picture)

- Use text recognition to parse text from the picture

- Figure out how to do confidence metrics/calculation

- Convert to microservice

Running the migration: alembic upgread head