by Mu Chen, Zhedong Zheng, Yi Yang, and Tat-Seng Chua

Update at 14/11/2022, ArXiv Version of PiPa is available.

Update at 13/12/2022, Code and Checkpoints release

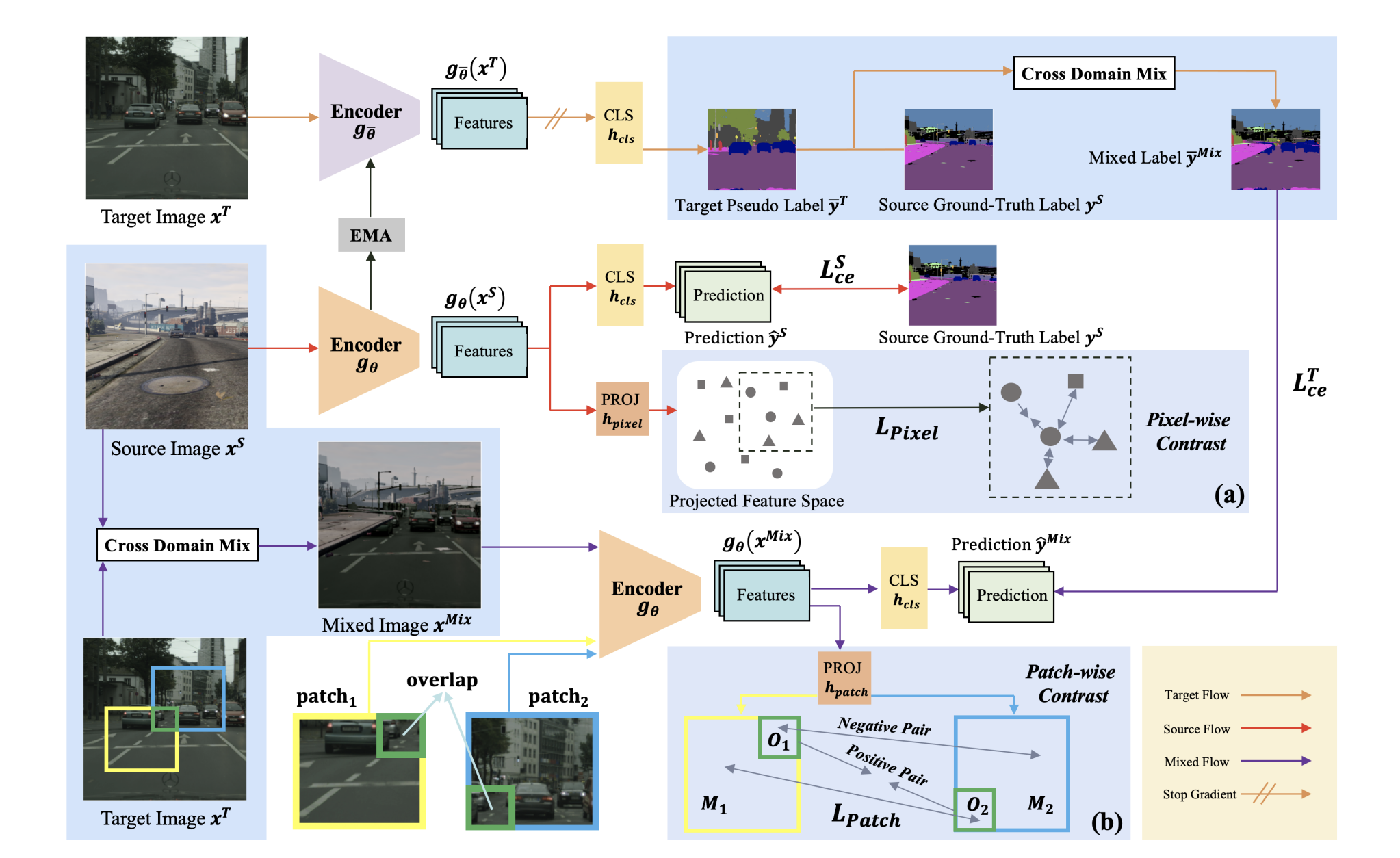

Unsupervised Domain Adaptation (UDA) aims to enhance the generalization of the learned model to other domains. The domain-invariant knowledge is transferred from

the model trained on labeled source domain, e.g., video

game, to unlabeled target domains, e.g., real-world scenarios, saving annotation expenses. Existing UDA methods for semantic segmentation usually focus on minimizing

the inter-domain discrepancy of various levels, e.g., pixels,

features, and predictions, for extracting domain-invariant

knowledge. However, the primary intra-domain knowledge,

such as context correlation inside an image, remains underexplored. In an attempt to fill this gap, we propose a unified pixel- and patch-wise self-supervised learning framework, called PiPa, for domain adaptive semantic segmentation that facilitates intra-image pixel-wise correlations and

patch-wise semantic consistency against different contexts.

The proposed framework exploits the inherent structures

of intra-domain images, which: (1) explicitly encourages

learning the discriminative pixel-wise features with intraclass compactness and inter-class separability, and (2) motivates the robust feature learning of the identical patch

against different contexts or fluctuations.

In this project, we used python 3.8.5

conda create --name pipa -y python=3.8.5

conda activate pipaThen, the requirements can be installed with:

pip install tensorboard

pip install -r requirements.txt -f https://download.pytorch.org/whl/torch_stable.html

pip install mmcv-full==1.3.7 # requires the other packages to be installed firstFurther, download the MiT weights from SegFormer using the following script.

sh tools/download_checkpoints.shDownload [GTA5], [Synthia] and [Cityscapes] to run the basic code.

Cityscapes: download leftImg8bit_trainvaltest.zip and

gt_trainvaltest.zip from here

and extract them to data/cityscapes.

GTA: download all image and label packages from

here and extract

them to data/gta.

Synthia: download SYNTHIA-RAND-CITYSCAPES from

here and extract it to data/synthia.

The data folder is structured as follows:

PiPa

├── ...

├── data

│ ├── cityscapes

│ │ ├── leftImg8bit

│ │ │ ├── train

│ │ │ ├── val

│ │ ├── gtFine

│ │ │ ├── train

│ │ │ ├── val

│ ├── gta

│ │ ├── images

│ │ ├── labels

│ ├── synthia

│ │ ├── RGB

│ │ ├── GT

│ │ │ ├── LABELS

├── ...

Data Preprocessing: Finally, please run the following scripts to convert the label IDs to the train IDs and to generate the class index for RCS:

python tools/convert_datasets/gta.py data/gta --nproc 8

python tools/convert_datasets/cityscapes.py data/cityscapes --nproc 8

python tools/convert_datasets/synthia.py data/synthia/ --nproc 8We provide pretrained models below for PiPa based on hrda.

| model name | mIoU | checkpoint file download |

|---|---|---|

| pipa_gta_to_cs.pth | 75.6 | Google Drive |

| pipa_syn_to_cs.pth | 68.2 | Google Drive |

python -m tools.test path/to/config_file path/to/checkpoint_file --format-only --eval-option python run_experiments.py --config configs/pipa/gtaHR2csHR_hrda.pyThe logs and checkpoints are stored in work_dirs/.

We thank the authors of the following open-source projects for making the code publicly available.

If you find this work helpful to your research, please consider citing the paper:

@article{chen2022pipa,

title={PiPa: Pixel-and Patch-wise Self-supervised Learning for Domain Adaptative Semantic Segmentation},

author={Chen, Mu and Zheng, Zhedong and Yang, Yi and Chua, Tat-Seng},

journal={arXiv preprint arXiv:2211.07609},

year={2022}

}