In this project, I have used what I've learned about deep neural networks and convolutional neural networks to classify traffic signs. I've trained and validated a model so it can classify traffic sign images using the German Traffic Sign Dataset. After the model is trained, I tried out my model on images of German traffic signs that I found on the web.

To meet specifications, the project consists of below three files:

- the Ipython notebook with the code

- the code exported as an html file

- a writeup report either as a markdown or pdf file

All files are written to meet the rubric points

The goals / steps of this project are the following:

- Load the data set

- Explore, summarize and visualize the data set

- Design, train and test a model architecture

- Use the model to make predictions on new images

- Analyze the softmax probabilities of the new images

- Visualize the convolutional neural network layer

- Summarize the results with a written report

- Pickle : This is for loading the existing dataset pickled

- Numpy : For converting RGB images to gray

- Matplotlib : For visualization purpose

- Sklearn : For shuffling the input data

- Tensorflow : Neural network creation, training

The German Traffic Sign Dataset is already pickled for us. It comes predivided into three sections.

- train.p : Training dataset consisting of 34799 samples

- valid.p : Validation dataset consisting of 4410 samples

- test.p : Testing dataset consisting of 12630 samples

- I loaded these datasets in respective variables.

- I chose randomly which image and its respective label to visualize. This made me know how our image of traffic sign actually looks like

- All the images present in the train, test, validation set are

32x32x3size.

- The images/samples under train.p are in

RGBformat. Thus, I changed that tograyscalewith a very good method found on web. This method is explained here. - After converting it to

grayscale, I normalized it with below implementation.

a = .1

b = .9

min_data = 0 # Min value in grayscale is 0

max_data = 255 # Max value in grayscale is 255

norm = a + ((rgb - min_data)*(b - a)/(max_data - min_data))

- This preprocessing helped reduce the total size of dataset. This is because, if we use

RGBcolor space, to save one image, we need to save data of 3 channels. But to save image ingrayscale, we need to save data for only one channel. Thus size of our images data set reduces. - Once all the training set images are converted into grayscale, we are ready to design a neural network for image classification.

The network is defined in the model() function.

The model consists of

- 3

Conv2Dlayers - Every Conv2D layer has a

maxpooling layer - 4

Fully Connectedlayers

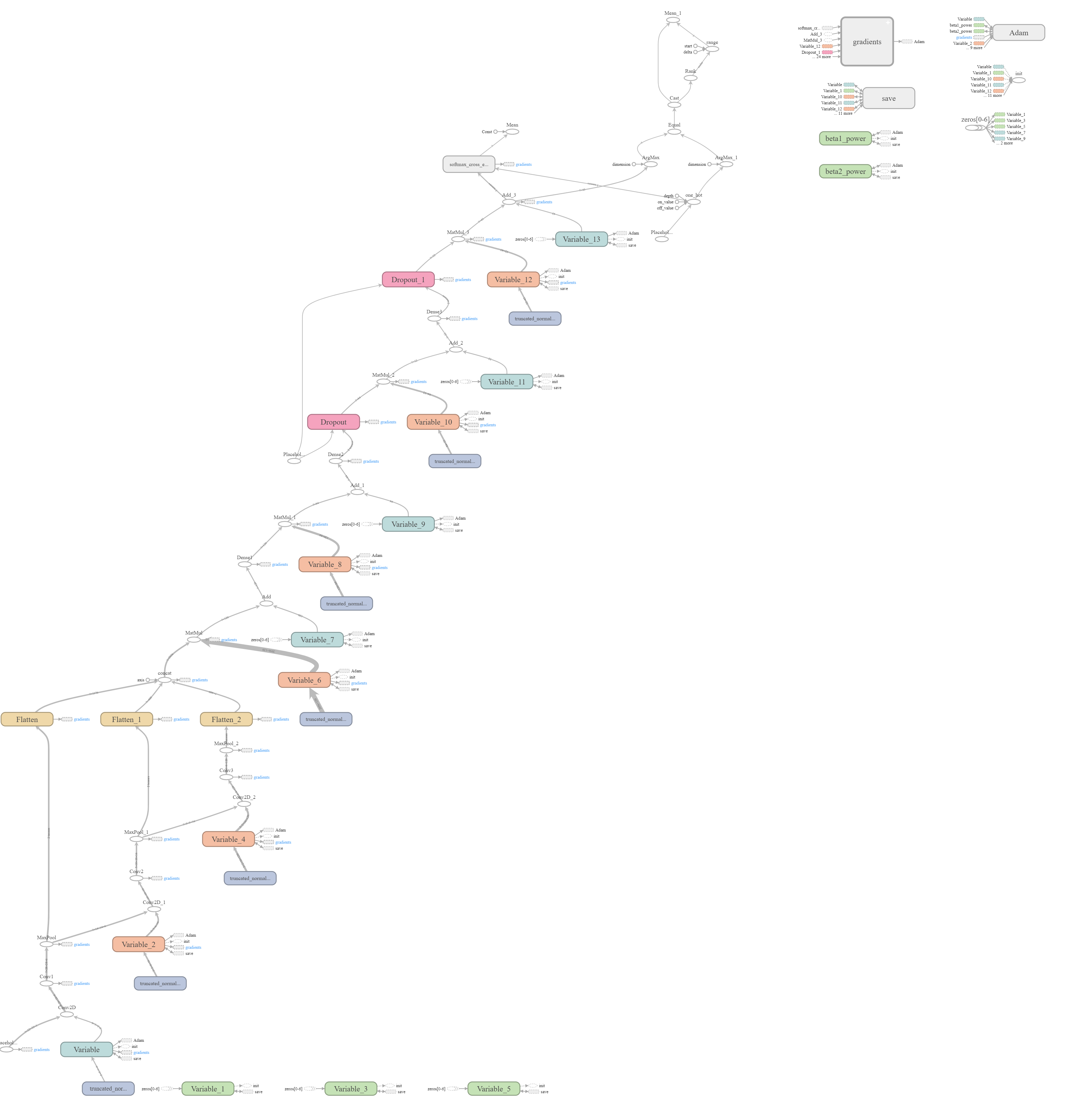

The below graph will describe how the network looks.

This graph is generated using Tensorboard

- For optimization, we're using

AdamOptimizeralready built in tensorflow with the default hardcodedlearning_rate = 0.001. - I have written a function

evaluate()for calculation of our accuracy after every epoch. - This function returns the accuracy over the passed

X_dataandy_datato theevaluate()function.

- Once we are done with the preprocessing and setting up other functionalities, we're ready to run a session for training purpose

- For session, we've set below two hyperparameters for

batch_size = 128num_epochs = 50

- For every epoch, we're finding the validation accuracy and storing it under

validation_accuracy_listwhich will later help us plot the validation accuracy graph to see how well we are doing. See below print of epoch #31 for more clarification

Training: 31/50

EPOCH 31 ...

Validation Accuracy = 0.957

- Once all the epochs are complete we are ready to plot the validation accuracy graph.

- The validation accuracy increases with the number of iterations.

- It increases rapidly in the beginning, but later stays almost constant.

- Our model has run for predefined (50) number of epochs. It has lerned from the training set and it's time to save that learning in some checkpoint so that we can load that later for inferencing part.

- Here while training, if the validation accuracy goes above

0.95, we're saving the model totrafficSignClassifier - For this, we've used tensorflow's

Saver()method.

- We had already loaded the test set from

German Traffic Sign Dataset - Now, we will load the saved model and run the inferencing on it

- For this purpose, we'll be using the

evaluate()function to evaluate the accuracy on test set - For our model, it comes out to be,

0.939

INFO:tensorflow:Restoring parameters from ./trafficSignClassifier

Test accuracy = 0.939

This is to check the accuracy on the images downloaded from internet.

I've downloaded some images from internet. But these images are of different shapes. So, initially to feed these to our network, I'll have to modify them to a 32x32 format with grayscale color.

These images are

Keep Right

Keep Right

No Entry

No Entry

Priority Road

Priority Road

Speed Limit 50km/h

Speed Limit 50km/h

Stop

Stop

- We predicted these images and the accuracy came out to be 100% since all 5/5 images were predicted correctly.

- In the further steps, we're analyzing the model.

- Here, we have used the tensorflow's

top_k()function to find the top 'k' number of probabilities for inference - As we're asked to find the top 5 probabilities, it's found and printed in the next cells along with the index prediction

Label: keep_right

Output: [[1. 0. 0. 0. 0.]]

Predicted Label Index: [[38. 0. 1. 2. 3.]]

Label: no_entry

Output: [[1. 0. 0. 0. 0.]]

Predicted Label Index: [[17. 0. 1. 2. 3.]]

Label: speed_limit_50

Output: [[1. 0. 0. 0. 0.]]

Predicted Label Index: [[2. 0. 1. 3. 4.]]

Label: priority_road

Output: [[1. 0. 0. 0. 0.]]

Predicted Label Index: [[12. 0. 1. 2. 3.]]

Label: stop

Output: [[1. 0. 0. 0. 0.]]

Predicted Label Index: [[14. 0. 1. 2. 3.]]

This is the optional topic to cover in this project.

- For this visualizations, we're already given a function named

outputFeatureMap()which takes several arguments including the activation stage and the input image. - We had to load the saved model in order to start inferencing for one particular layer.

- From the session variable, we used the

get_tensor_by_name()function which takes in the tensor name which we gave to particular layer while writing the network model and returns its object. - We sent the image and the tensor we got from previous step to the

outputFeatureMap()function and it plotted the visualization of layer. - Here, we've visualized second conv2d layer from our model