Kubernetes Fury OPA provides policy enforcement at runtime for the Kubernetes Fury Distribution (KFD).

If you are new to KFD please refer to the official documentation on how to get started with KFD.

Tip

Starting from Kubernetes v1.25, Pod Security Standards (PSS) are promoted to stable. For most use cases, the policies defined in the Pod Security Standards are a great starting point, consider applying them before switching to one the of tools provided by this module.

For more advanced use-cases, where custom policies that are not included in the PSS must be enforced, this module is the right choice.

The Kubernetes API server provides a mechanism to review every request that is made (object creation, modification, or deletion). To use this mechanism the API server allows us to create a Validating Admission Webhook that, as the name says, will validate every request and let the API server know if the request is allowed or not based on some logic (policy).

Kubernetes Fury OPA module is based on OPA Gatekeeper and Kyverno, two popular open-source Kubernetes-native policy engines that runs as a Validating Admission Webhook. It allows writing custom constraints (policies) and enforcing them at runtime.

SIGHUP provides a set of base constraints that could be used both as a starting point to apply constraints to your current workloads and to give you an idea of how to implement new rules matching your requirements.

Fury Kubernetes OPA provides the following packages:

| Package | Version | Description |

|---|---|---|

| Gatekeeper Core | v3.17.1 |

Gatekeeper deployment, ready to enforce rules. |

| Gatekeeper Rules | N.A. |

A set of custom rules to get started with policy enforcement. |

| Gatekeeper Monitoring | N.A. |

Metrics, alerts and dashboard for monitoring Gatekeeper. |

| Gatekeeper Policy Manager | v1.0.13 |

Gatekeeper Policy Manager, a simple to use web-ui for Gatekeeper. |

| Kyverno | v1.12.6 |

Kyverno is a policy engine designed for Kubernetes. It can validate, mutate, and generate configurations using admission controls and background scans. |

Click on each package name to see its full documentation.

| Kubernetes Version | Compatibility | Notes |

|---|---|---|

1.31.x |

✅ | No known issues |

1.30.x |

✅ | No known issues |

1.29.x |

✅ | No known issues |

1.28.x |

✅ | No known issues |

Check the compatibility matrix for additional information on previous releases of the module.

Note

The following instructions are for using the module with furyctl legacy, or downloading it and using it via kustomize.

In the latest versions of the Kubernetes Fury Distribution the OPA module is natively integrated and can be used and configured by the .spec.distribution.modules.policy key in the configuration file.

| Tool | Version | Description |

|---|---|---|

| furyctl | >=0.27.0 |

The recommended tool to download and manage KFD modules and their packages. To learn more about furyctl read the official documentation. |

| kustomize | >=3.10.0 |

Packages are customized using kustomize. To learn how to create your customization layer with kustomize, please refer to the repository. |

| KFD Monitoring Module | >v1.10.0 |

Expose metrics to Prometheus (optional) and use Grafana Dashboards. |

You can comment out the service monitor in the kustomization.yaml file if you don't want to install the monitoring module.

- List the packages you want to deploy and their version in a

Furyfile.yml

bases:

- name: opa/gatekeeper

version: "1.13.0"See

furyctldocumentation for additional details aboutFuryfile.ymlformat.

-

Execute

furyctl legacy vendor -Hto download the packages -

Inspect the download packages under

./vendor/katalog/opa/gatekeeper. -

Define a

kustomization.yamlthat includes the./vendor/katalog/opa/gatekeeperdirectory as a resource.

resources:

- ./vendor/katalog/opa/gatekeeper-

Apply the necessary patches. You can find a list of common customization here.

-

To deploy the packages to your cluster, execute:

kustomize build . | kubectl apply -f -Warning

Gatekeeper is deployed by default as a Fail open (also called Ignore mode) Admission Webhook. Should you decide to change it to Fail mode read carefully the project's documentation on the topic first.

Tip

If you decide to deploy Gatekeeper to a different namespace than the default gatekeeper-system, you'll need to patch the file vwh.yml to point to the right namespace for the webhook service due to limitations in the kustomize tool.

Gatekeeper supports 3 levels of granularity to exempt a namespace from policy enforcement.

- Global exemption at Kubernetes API webhook level: the requests to the API server for the namespace won't be sent to Gatekeeper's webhook.

- Global exemption at Gatekeeper configuration level: requests to the API server for the namespace will be sent to Gatekeeper's webhook, but Gatekepeer will not enforce constraints for the namespace. It is the equivalent of exempting the namespace in all the constraints. Useful when you don't want any of the constraints enforced in a namespace.

- Exemption at constraint level: you can exempt namespaces in the definition of each constraint. Useful when you may want only a subset of all the constraints to be enforced in a namespace.

Caution

Exempting critical namespaces like kube-system or logging won't guarantee that the cluster will function properly when Gatekeeper webhook is in Fail mode.

For more details on how to implement the exemption, please refer to the official Gatekeeper documentation site.

Disable one of the default constraints by creating the following kustomize patch:

patchesJson6902:

- target:

group: constraints.gatekeeper.sh

version: v1beta1

kind: K8sUniqueIngressHost # replace with the kind of the constraint you want to disable

name: unique-ingress-host # replace with the name of the constraint you want to disable

path: patches/allow.ymladd this to the patches/allow.yml file:

- op: "replace"

path: "/spec/enforcementaction"

value: "allow"If for some reason OPA Gatekeeper is giving you issues and blocking normal operations in your cluster, you can disable it by removing the Validating Admission Webhook definition from your cluster:

kubectl delete ValidatingWebhookConfiguration gatekeeper-validating-webhook-configurationGatekeeper is configured by default in this module to expose some Prometheus metrics about its health, performance, and operative information.

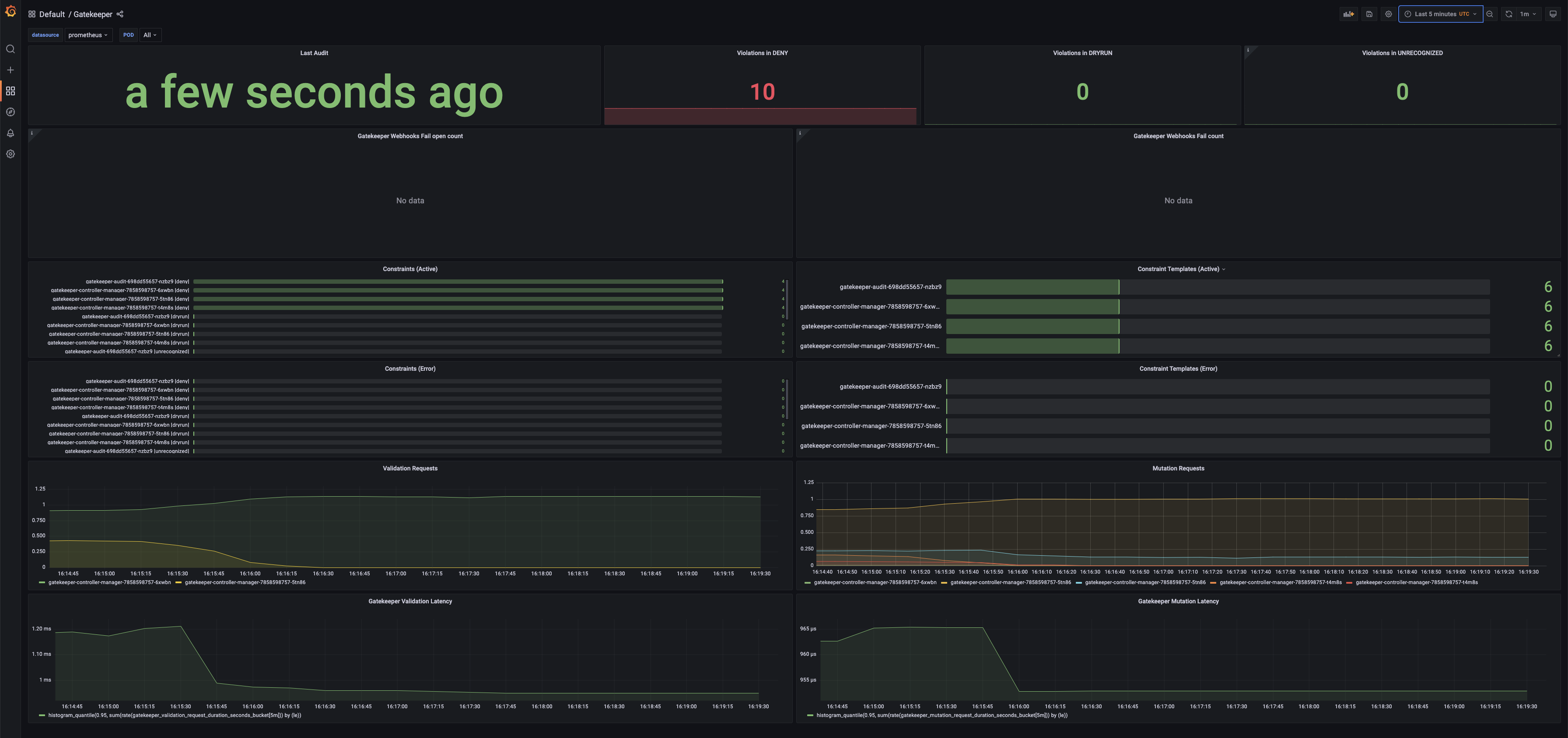

You can monitor and review these metrics by checking out the provided Grafana dashboard. (This requires the KFD Monitoring Module to be installed).

Go to your cluster's Grafana and search for the "Gatekeeper" dashboard:

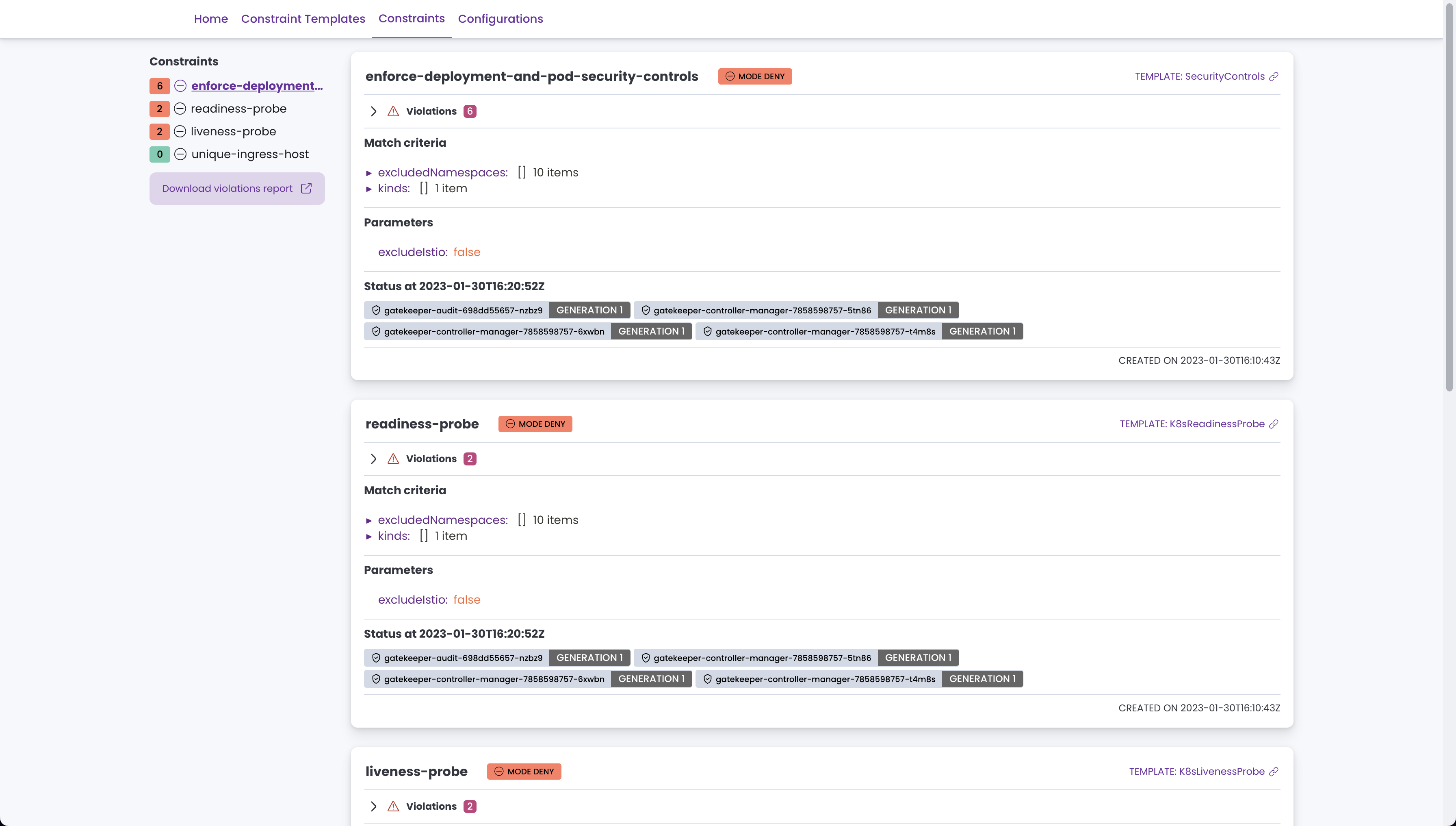

You can also use Gatekeeper Policy Manager to view the Constraints Templates, Constraints, and Violations in a simple-to-use UI.

Two alerts are also provided by default with the module, the alerts are triggered when the number of errors seen by the Kubernetes API server trying to contact Gatekeeper's webhook is too high. Both for Fail open (Ignore) mode and Fail mode:

| Alert | Description |

|---|---|

| GatekeeperWebhookFailOpenHigh | Gatekeeper is not enforcing {{$labels.type}} requests to the API server. |

| GatekeeperWebhookCallError | Kubernetes API server is rejecting all requests because Gatekeeper's webhook '{{ $labels.name }}' is failing for '{{ $labels.operation }}'. |

Notice that the alert for when the Gatekeeper webhook is in Ignore mode (the default) depends on an API server metric that has been added in Kubernetes version 1.24. Previous versions of Kubernetes won't trigger alerts when the webhook is failing and in Ignore mode.

- List the packages you want to deploy and their version in a

Furyfile.yml

bases:

- name: opa/kyverno

version: "1.13.0"See

furyctldocumentation for additional details aboutFuryfile.ymlformat.

-

Execute

furyctl legacy vendor -Hto download the packages -

Inspect the download packages under

./vendor/katalog/opa/kyverno. -

Define a

kustomization.yamlthat includes the./vendor/katalog/opa/kyvernodirectory as a resource.

resources:

- ./vendor/katalog/opa/kyverno- To deploy the packages to your cluster, execute:

kustomize build . | kubectl apply --server-side -f -Before contributing, please read the Contributing Guidelines.

In case you experience any problems with the module, please open a new issue.

This module is open-source and released under the following LICENSE