Code for "Optimizing Hand Region Detection in MediaPipe Holistic Full-Body Pose Estimation to Improve Accuracy and Avoid Downstream Errors".

Fixing google-ai-edge/mediapipe#5373

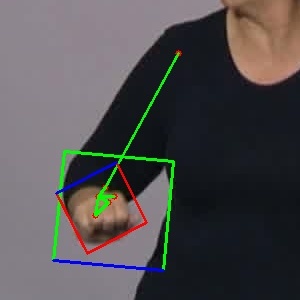

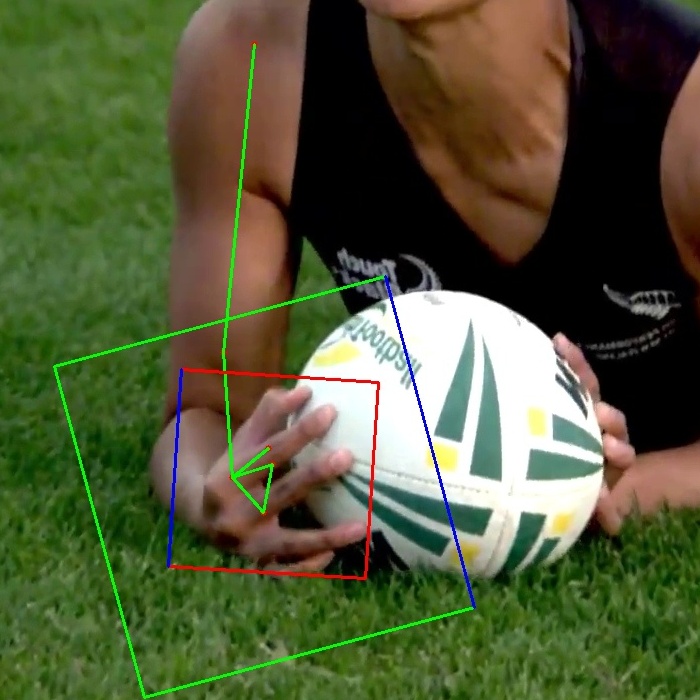

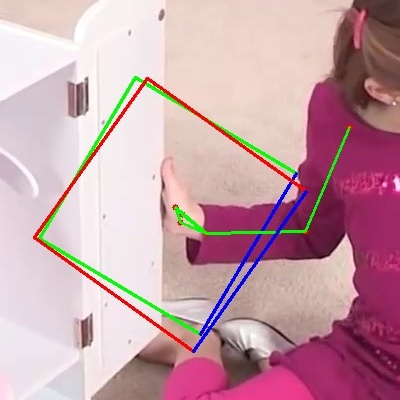

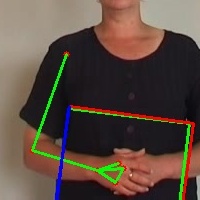

The MediaPipe ROI estimation can be not so great... Here are a few examples:

Worst and Best are the edge cases as seen in the data. The rest were picked manually. We only look at the right hand. If the data is for the left hand, we flip the image. In the following table, for each image we show the hand keypoints in green lines, and two bounding boxes in green - gold ROI and red - predicted ROI. To indicate the orientation of the bounding box, we draw a blue line on the bottom edge of each box.

| Worst | Bad | OK | Good | Best |

|---|---|---|---|---|

| ROI: 0.08% | --- | --- | --- | ROI: 93.7% |

|

|

|

|

|

git clone https://github.com/sign-language-processing/mediapipe-hand-crop-fix.git

cd mediapipe-hand-crop-fixDownload and extract the Panoptic Hand Pose Dataset:

cd data

wget http://domedb.perception.cs.cmu.edu/panopticDB/hands/hand_labels.zipEstimate full body poses using MediaPipe Holistic:

python -m mediapipe_crop_estimate.estimate_posesCollect the annotations as well as estimated regions of interest:

python -m mediapipe_crop_estimate.collect_handsThen, train an MLP using the annotations and estimated regions of interest:

python -m mediapipe_crop_estimate.train_mlpFinally, evaluate:

python -m mediapipe_crop_estimate.evaluate