CLIP model to embed SignWriting images.

Can be used in signwriting-evaluation.

Trained on SignBank+, by correlating the SignWriting images with their corresponding texts. This does not guarantee that the embeddings are useful for lexical tasks like transcription, and an empirical evaluation is needed.

Preliminary results show that the model can be used for more semantic tasks, but not for lexical tasks. The model is hosted on HuggingFace.

# 0. Setup the environment.

conda create --name vq python=3.11

conda activate clip

pip install .

# 1. Creates a dataset of SignWriting images.

DATA_DIR=/scratch/amoryo/clip

sbatch scripts/create_dataset.sh "$DATA_DIR"

# 2. Trains the model and reports to `wandb`.

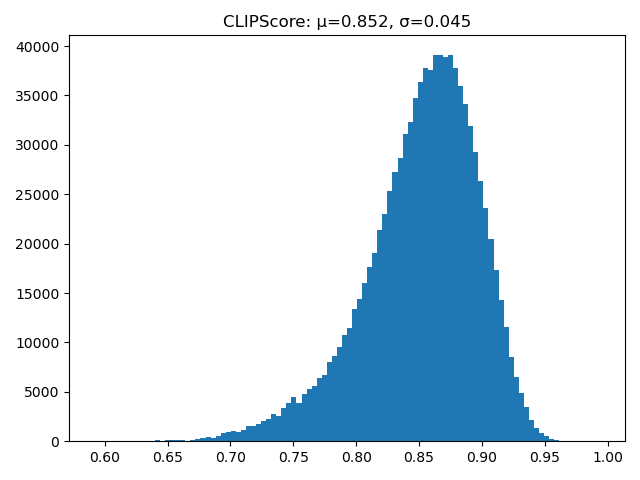

sbatch scripts/train_model.sh "$DATA_DIR"The original CLIP model encodes all SignWriting images as very similar embeddings, as evident by the distribution of cosine similarities between embeddings of random signs. The distribution is head-heavy, with most similarities between 0.8 and 0.9.

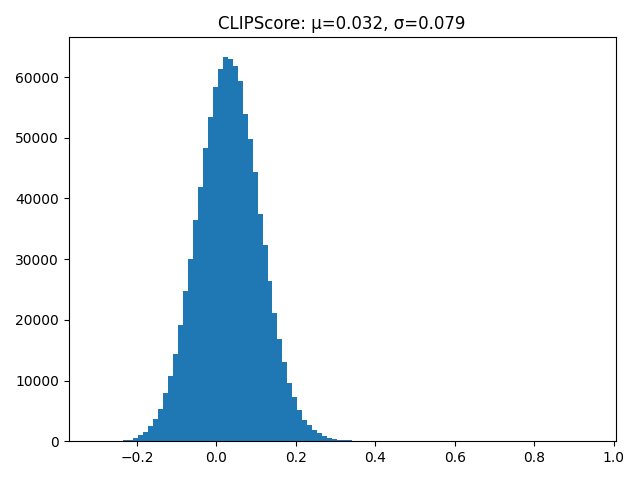

On the other hand, SignWritingCLIP encodes SignWriting images as more diverse embeddings. This is evident by a more tail-heavy distribution.

For three signs representing the sign for "hello", the nearest neighbors are shown below.

|  |  | ||||

| CLIP | SignWritingCLIP | CLIP | SignWritingCLIP | CLIP | SignWritingCLIP | |

| 1 |  |  |  |  |  |  |

| 2 |  |  |  |  |  |  |

| 3 |  |  |  |  |  |  |

| 4 |  |  |  |  |  |  |

| 5 |  |  |  |  |  |  |

| 6 |  |  |  |  |  |  |

| 7 |  |  |  |  |  |  |

| 8 |  |  |  |  |  |  |

| 9 |  |  |  |  |  |  |

| 10 |  |  |  |  |  |  |