Pytorch implementation of CVPR2021 paper "Distribution-aware Adaptive Multi-bit Quantization"

This code is the main source for the experiments in the paper. However, it is not elegant enough and hard to extend to other project. Therefore, It is deprecated and we reconstruct this algorithm with plug-and-play manner, please refer to DAMBQV2.

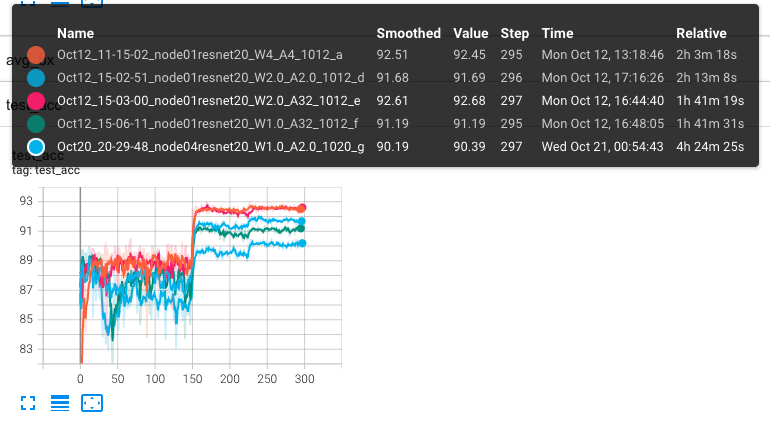

Train resnet20 on CIFAR10 Dataset from pretrained W32A32 to W4A4

python CIFAR10/main.py --wd 1e-4 --lr 5e-2 -id 0 --bit_W_init 4 --bit_A_init 4 --init [resnet20_W32A32_model_path] --comment resnet20_W4_A4

If this repo or paper is helpful, please cite,

@inproceedings{zhao2021distribution,

title={Distribution-aware adaptive multi-bit quantization},

author={Zhao, Sijie and Yue, Tao and Hu, Xuemei},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={9281--9290},

year={2021}

}