- Ubuntu 16.04/18.04

- python > = 3.6

- opencv >= 3.4

- pyqt5

- caffe-ssd (gpu version preferably)

- Load the source of Magic project

git-lfs clone https://github.com/simshineaicamera/magic.git

cd magicNote please use git-lfs, because yolov3 model is large, can't be downloaded without git-lfs

- Install python package requirments:

python3.6 -m pip install -r requirments.txt- If you have already installed SIMCAM_SDK you can skip this step.

Compile caffe-ssd on your system and setPYTHONPATHinto your system path

- The most easiest way to install the tool is using docker. Run following commands to upload and run the docker image

sudo docker run -it \

-v /tmp/.X11-unix:/tmp/.X11-unix \

-e DISPLAY=$DISPLAY \

--privileged \

simcam/magic:v1.0 bash

cd /home/Magic- Run the

run.shscript

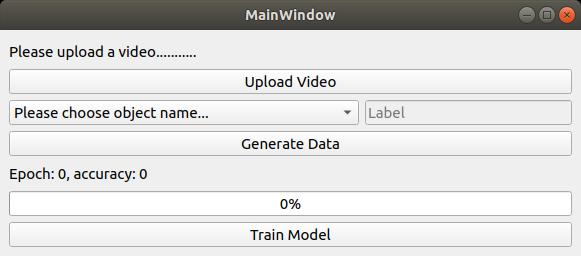

./run.shYou will see GUI for auto labeling and training your own model

-

Let's say, you are going train your own cat detection model, press

Upload Videobutton to upload a video , and please make sure that video contains desired object (in this case cat) -

Choose object name

Note, you can chooseOtherclass as well, and give a label for the object for examplemycat. In this option, the program usetrackingmethod for automatic labeling -

Press

Generate Databutton to generate data for training. -

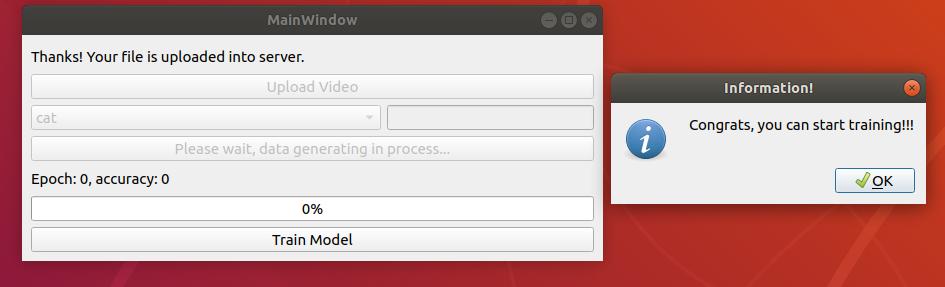

Data generating process takes 10-15 mins depends on your system CPU and GPU capability. After generating data, you will see message as below.

-

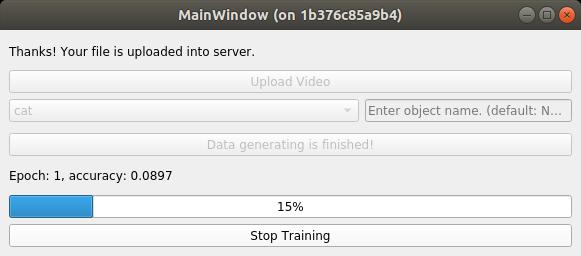

Press

Train Modelbutton to start training the model. Training speed depends on your system capability (CPU and GPU). You can see training process on the screen , epoch and accuracy.

You can stop training process early if you think accuracy of the model is enough. From the experiments, if model accuracy is higher than 0.86 value, you can test the model for deploy.