This repository contains code for the O'Reilly Live Online Training for Hands on Transfer Learning with BERT

This training will focus on implementing BERT to solve a variety of modern NLP tasks including information retrieval, sequence classification / regression, and question/answering tasks. The training will begin with a refresher on the BERT architecture and how BERT learns to model language. We will then move into examples of fine-tuning BERT on domain-specific corpora and using pre-trained models to perform NLP tasks out of the box.

BERT is one of the most relevant NLP architectures today and it is closely related to other important NLP deep learning models like GPT-3. Both of these models are derived from the newly invented transformer architecture and represent an inflection point in how machines process language and context.

The Natural Language Processing with Next-Generation Transformer Architectures series of online trainings provides a comprehensive overview of state-of-the-art natural language processing (NLP) models including GPT and BERT which are derived from the modern attention-driven transformer architecture and the applications these models are used to solve today. All of the trainings in the series blend theory and application through the combination of visual mathematical explanations, straightforward applicable Python examples within hands-on Jupyter notebook demos, and comprehensive case studies featuring modern problems solvable by NLP models. This training is part of a series and assumes that the attendee is coming in with knowledge from BERT Transformer Architecture for NLP training. (Note that at any given time, only a subset of these classes will be scheduled and open for registration.)

Information Retrieval with BERT

To run this requires some additional work

- Make sure you have flask installed (it is in the requirements.txt)

- Install ngrok to generate a secure tunnel to your local app

- From the

twitter-bert-flask-appdirectory, runpython app.pyto start your local flask app - Test the app by going to [http://localhost:5050/classify?tweet=this earthquake is causing such devastation](http://localhost:5050/classify?tweet=this earthquake is causing such devastation)

- Run ngrok to create a secure tunnel

ngrok http 5050. This will make a random tunnel each time unless you pay for subdomains (I recommend it if you use ngrok frequently) - In

twitter.js, changetwitter-bert.ngrok.ioto whatever subdomain your ngrok has chosen on startup - In chrome, navigate to

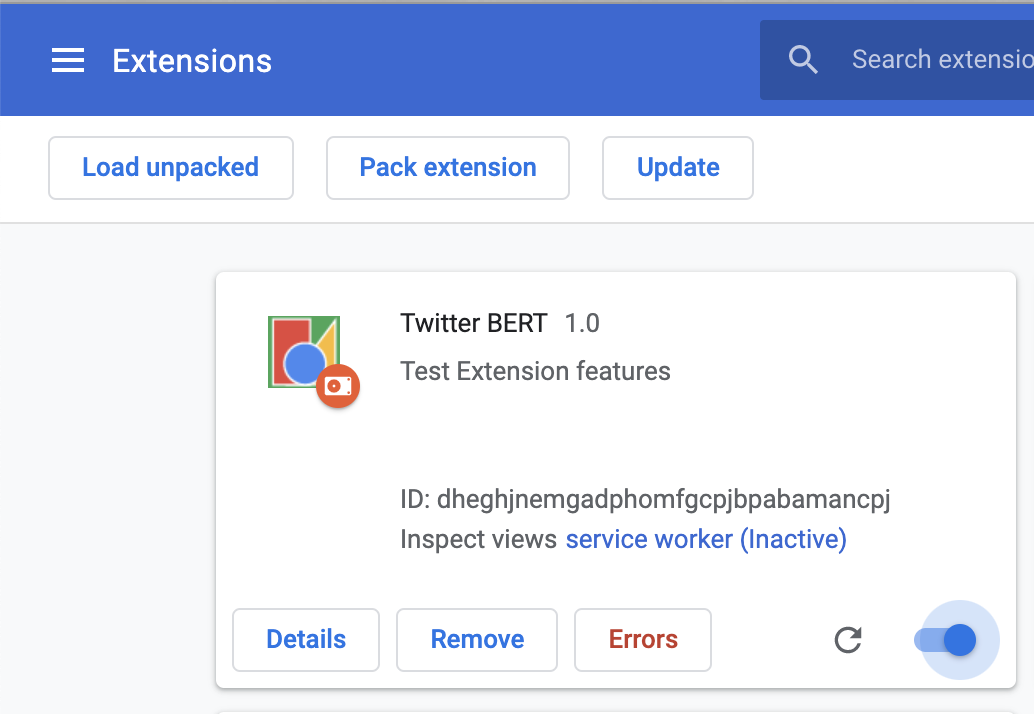

chrome://extensionsand click onLoad unpacked. To do this, you must haveDeveloper Modeon ont he top right of this page.

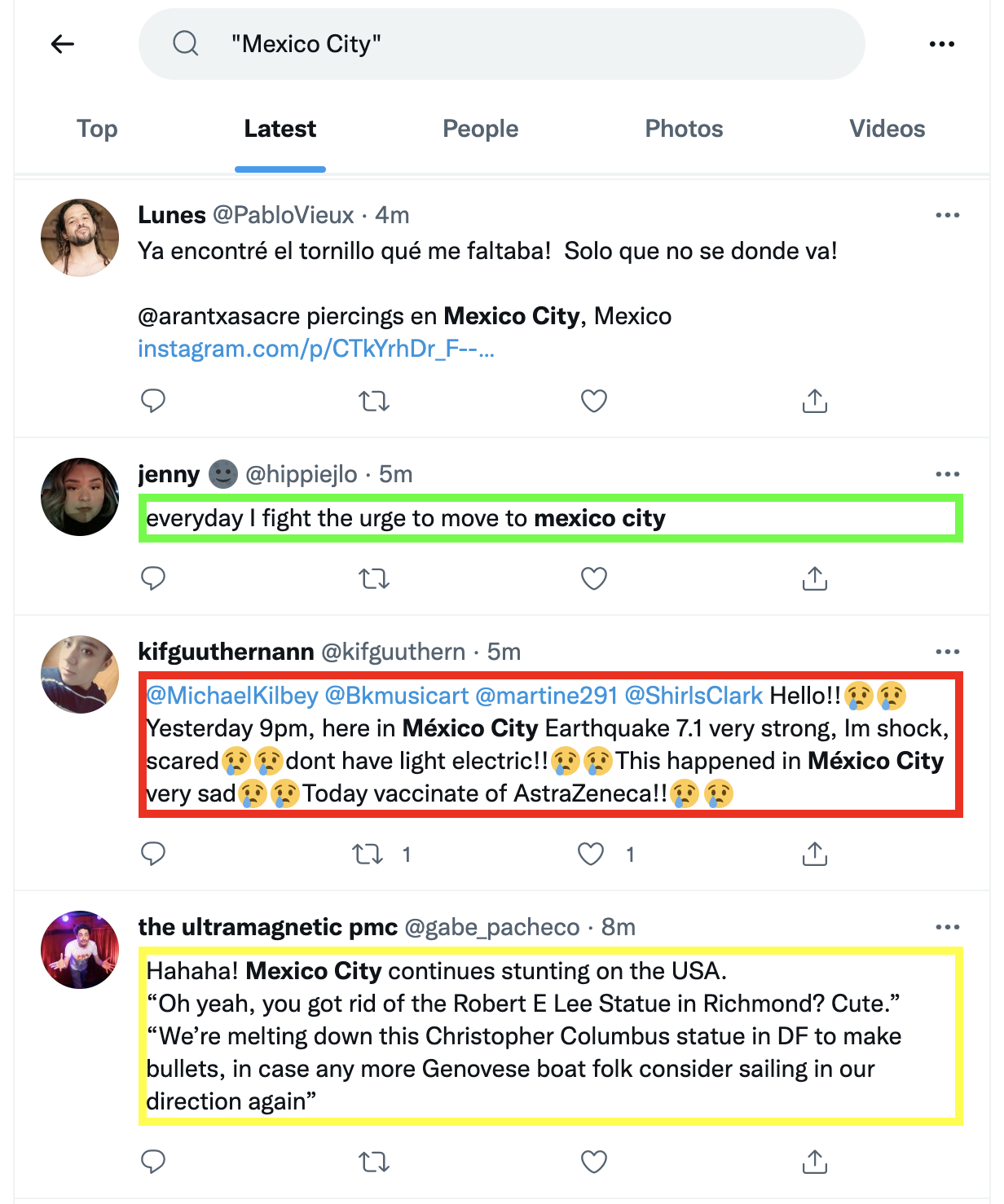

6. Navigate to twitter and see it work!

6. Navigate to twitter and see it work!

- Red borders indicate a probability of >= 90% of the tweet being about a disaster

- Yellow borders indicate a probability of >= 50% of the tweet being about a disaster

- Green borders indicate a probability of < 50% of the tweet being about a disaster

Sinan Ozdemir is currently the Director of Data Science at Directly, managing the AI and machine learning models that power the company’s intelligent customer support platform. Sinan is a former lecturer of Data Science at Johns Hopkins University and the author of multiple textbooks on data science and machine learning. Additionally, he is the founder of the recently acquired Kylie.ai, an enterprise-grade conversational AI platform with RPA capabilities. He holds a Master’s Degree in Pure Mathematics from Johns Hopkins University and is based in San Francisco, CA.