This repository contains the data and source code used for the "Comprehensive benchmarking of Large Language Models for RNA secondary structure prediction," by L.I. Zablocki, L.A. Bugnon, M. Gerard, L. Di Persia, G. Stegmayer, D.H. Milone (under review), Research Institute for Signals, Systems and Computational Intelligence, sinc(i). See the preprint for details.

In the last three years, a number of RNA large language models (RNA-LLM) have appeared in literature. We selected the models for benchmarking based on their open access availability, summarizing the main features in the table below.

| LLM | Visualization | Dim | Pre-training seqs | Pre-training databases | Architecture (number of layers) | Number of parameters | Source |

|---|---|---|---|---|---|---|---|

| RNABERT 2022 [1] |  |

120 | 70 k | RNAcentral | Transformer (6) | 500 k | Link |

| RNA-FM 2022 [2] |  |

640 | 23 M | RNAcentral | Transformer (12) | 100 M | Link |

| RNA-MSM 2024 [3] |  |

768 | 3 M | Rfam | Transformer (12) | 96 M | Link |

| ERNIE-RNA 2024 [4] |  |

768 | 20 M | RNAcentral | Transformer (12) | 86 M | Link |

| RNAErnie 2024 [5] |  |

768 | 23 M | RNAcentral | Transformer (12) | 105 M | Link |

| RiNALMo 2024 [6] |  |

1280 | 36 M | RNAcentral +Rfam +Ensembl | Transformer (33) | 650 M | Link |

These steps will guide you through the process of training the secondary structure RNA predictor model, based on the RNA-LLM representations.

First:

git clone https://github.com/sinc-lab/rna-llm-folding

cd rna-llm-folding

With a conda working installation, run:

conda env create -f environment.yml

This should install all required dependencies. Then, activate the environment with:

conda activate rna-llm-folding

Scripts to train and evaluate a RNA-LLM for RNA secondary structure prediction are in the scripts folder.

For example, to use the one-hot embedding for the ArchiveII dataset, run:

python scripts/run_archiveII_famfold.py --emb one-hot_ArchiveII

The --emb option is used to tell the script the desired LLM and dataset combination that will be used for training and testing. In the example, we used the one-hot embedding for ArchiveII, already available in data/embeddings. By default, train will be executed on GPU if available. Results will be saved in results/<timestamp>/<dataset>/<llm>.

To run the experiments with other datasets, use scripts/run_bpRNA.py, scripts/run_bpRNA_new.py, scripts/run_pdb-rna.py and scripts/run_archiveII_kfold.py, which are invoked the same way that’s described in the example.

To use other embeddings and datasets, download the RNA-LLM embedding representations for the desired LLM-dataset combination from the following table, and save them in the data/embeddings directory.

| ArchiveII | bpRNA & bpRNA-new | PDB-RNA |

|---|---|---|

| one-hot | one-hot | one-hot |

| RNABERT | RNABERT | RNABERT |

| RNA-FM | RNA-FM | RNA-FM |

| RNA-MSM | RNA-MSM | RNA-MSM |

| ERNIE-RNA | ERNIE-RNA | ERNIE-RNA |

| RNAErnie | RNAErnie | RNAErnie |

| RiNALMo | RiNALMo | RiNALMo |

Note: Instructions to generate the RNA-LLM embeddings listed above are detailed in scripts/embeddings.

-

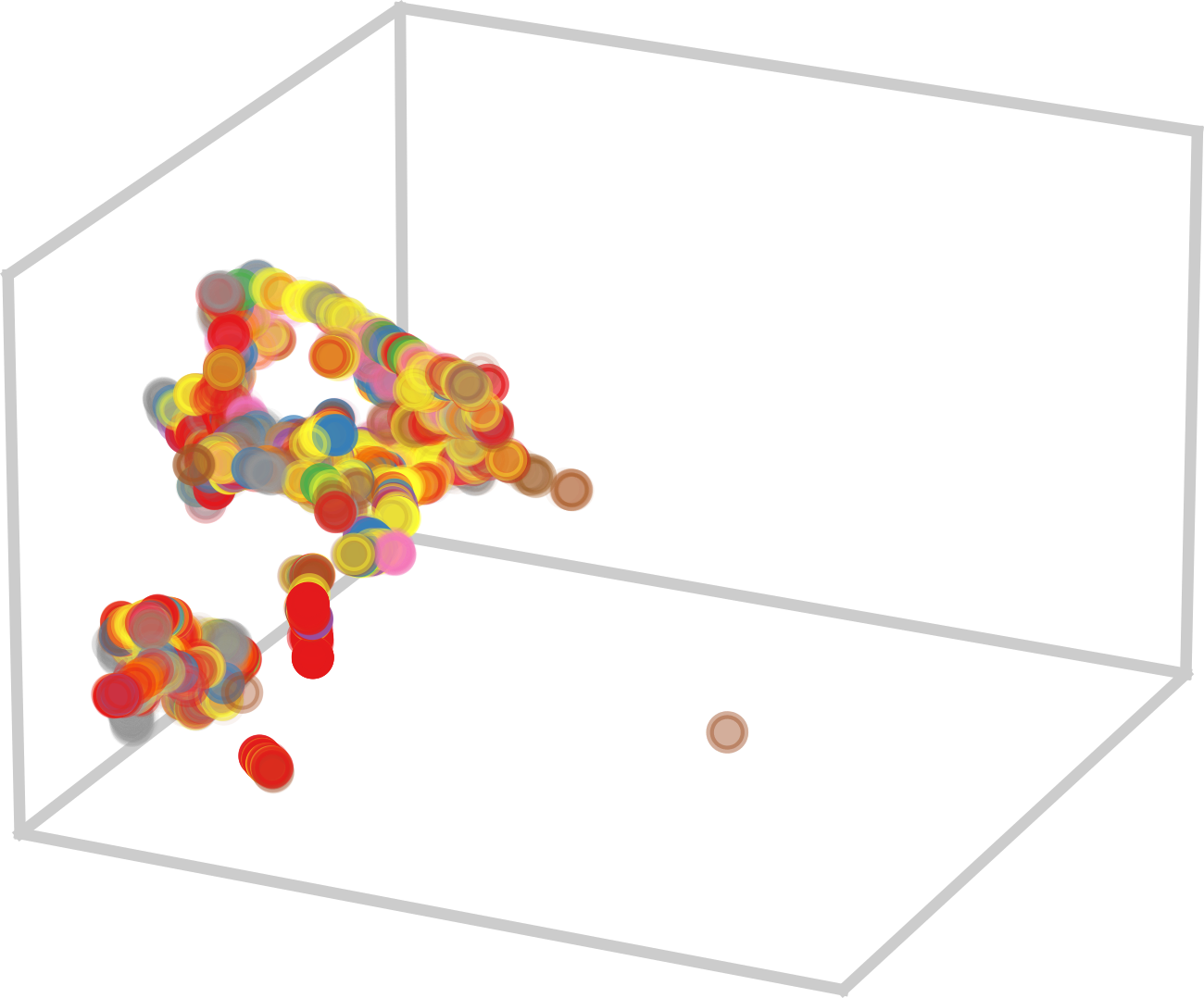

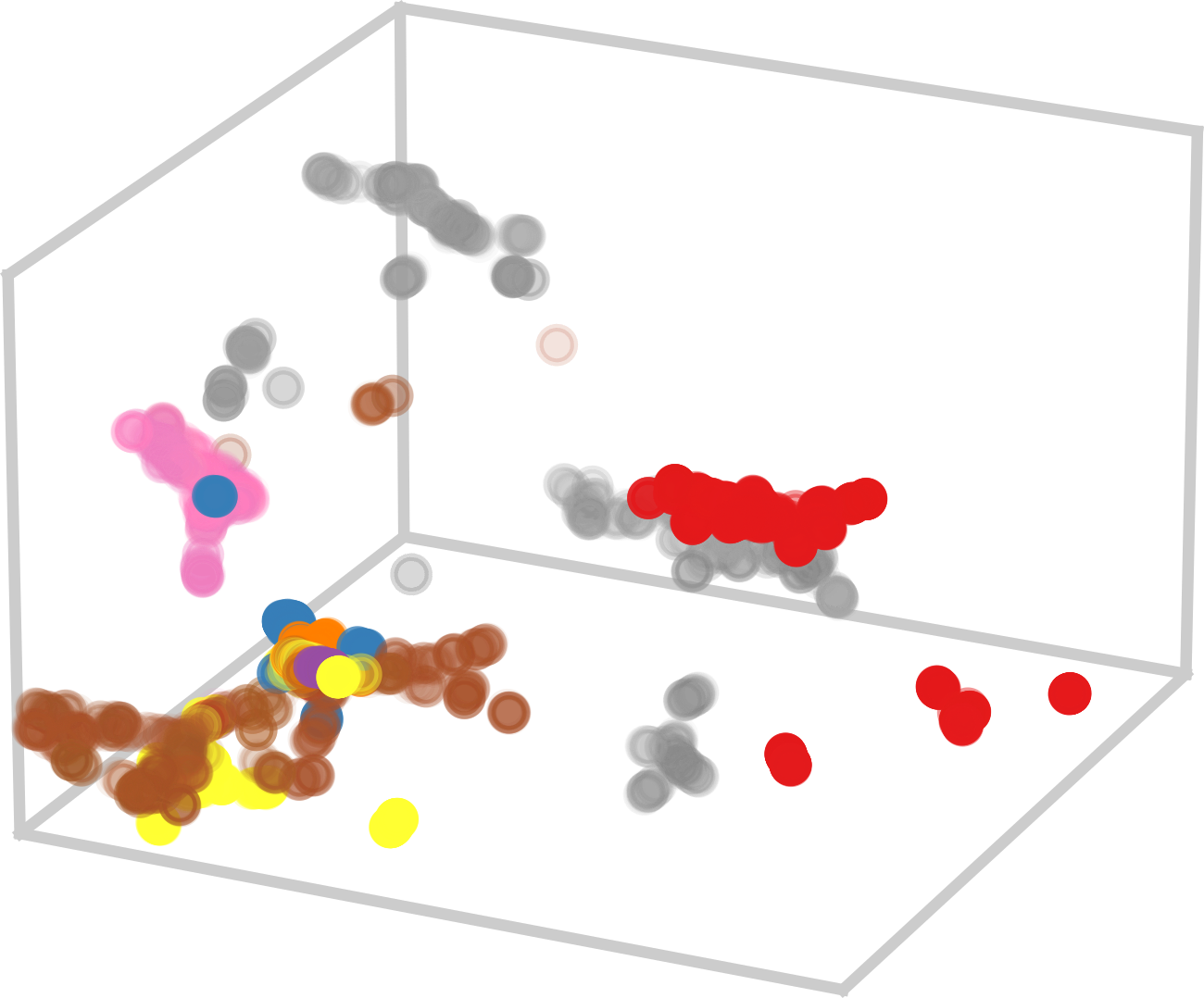

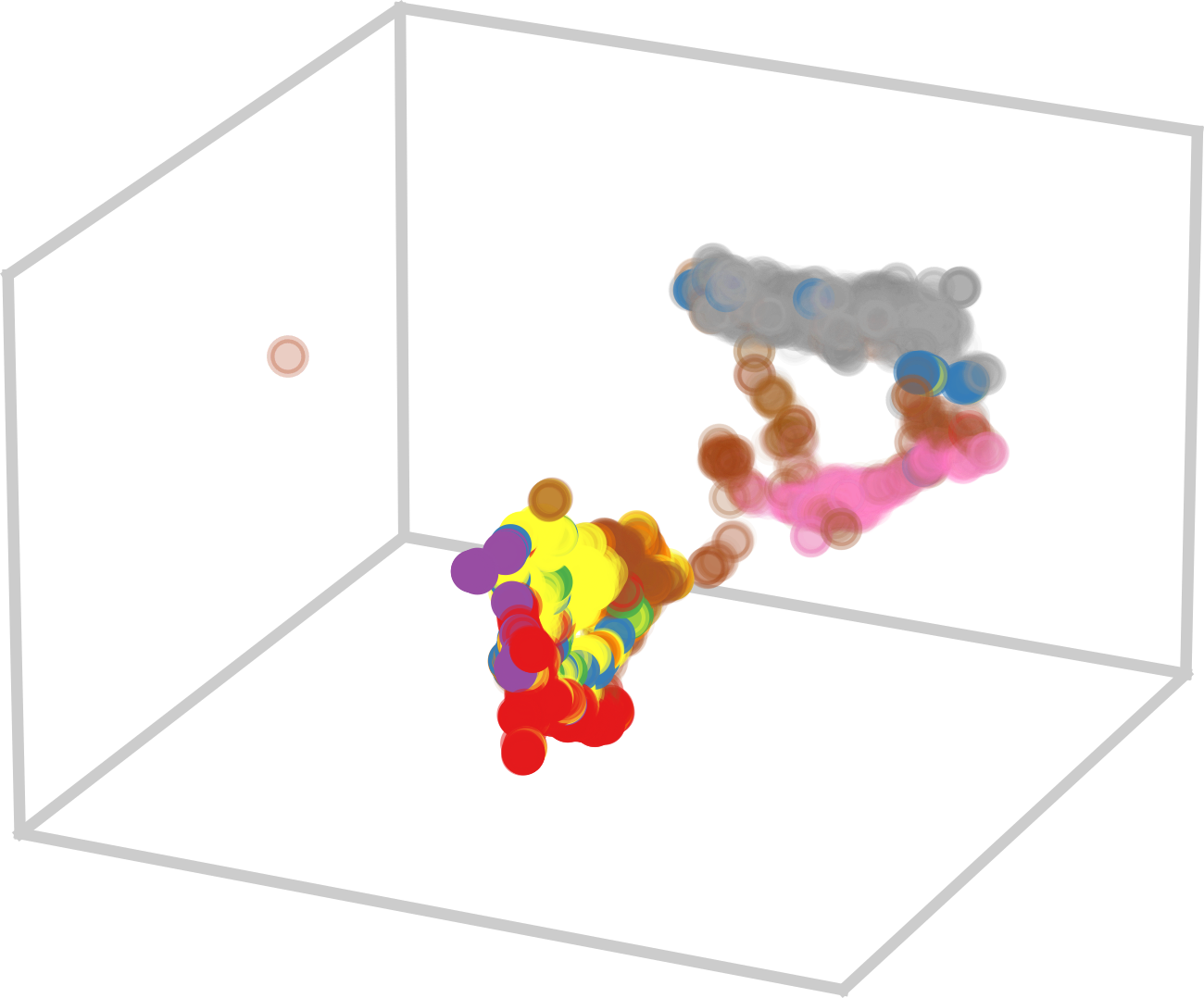

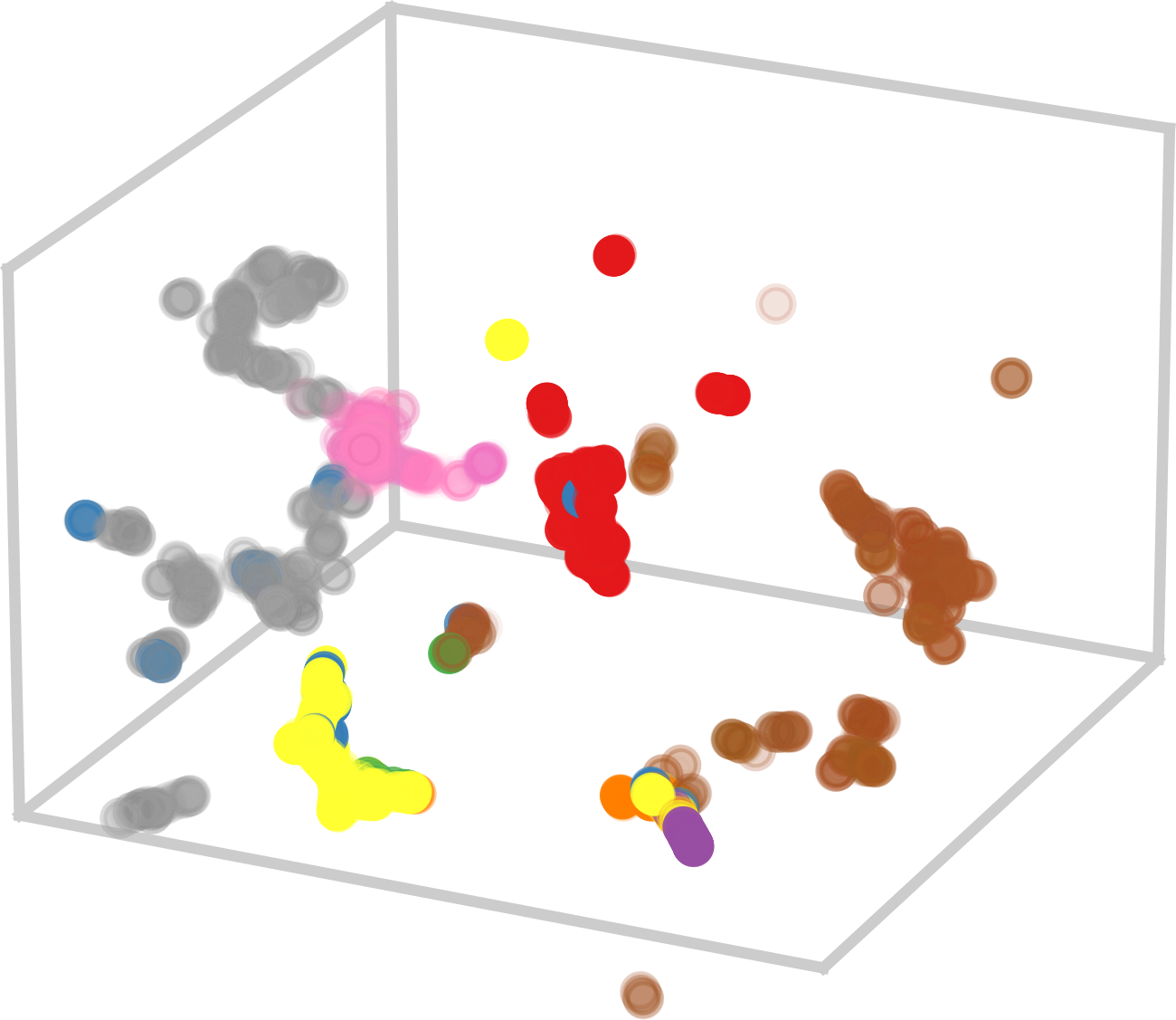

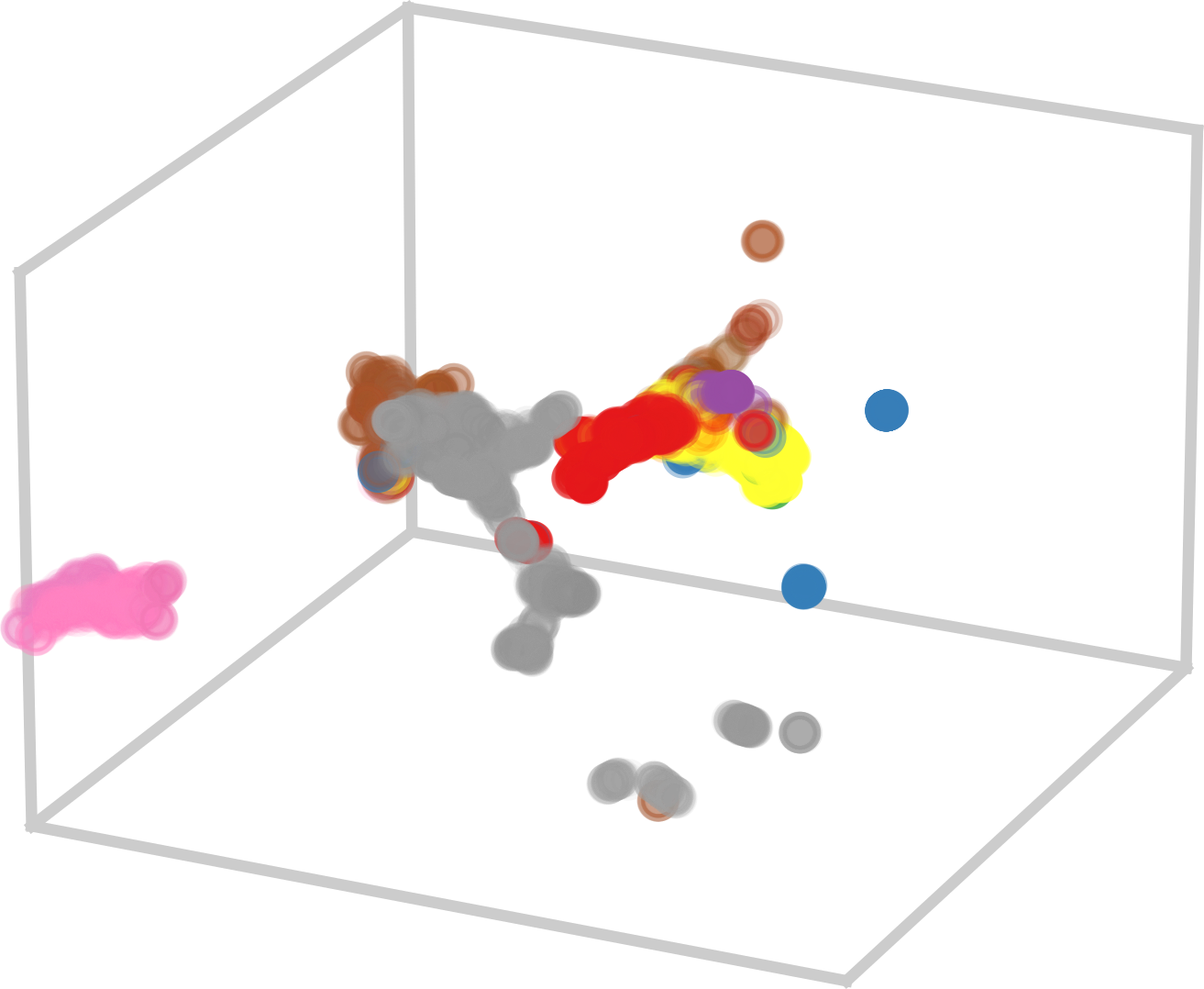

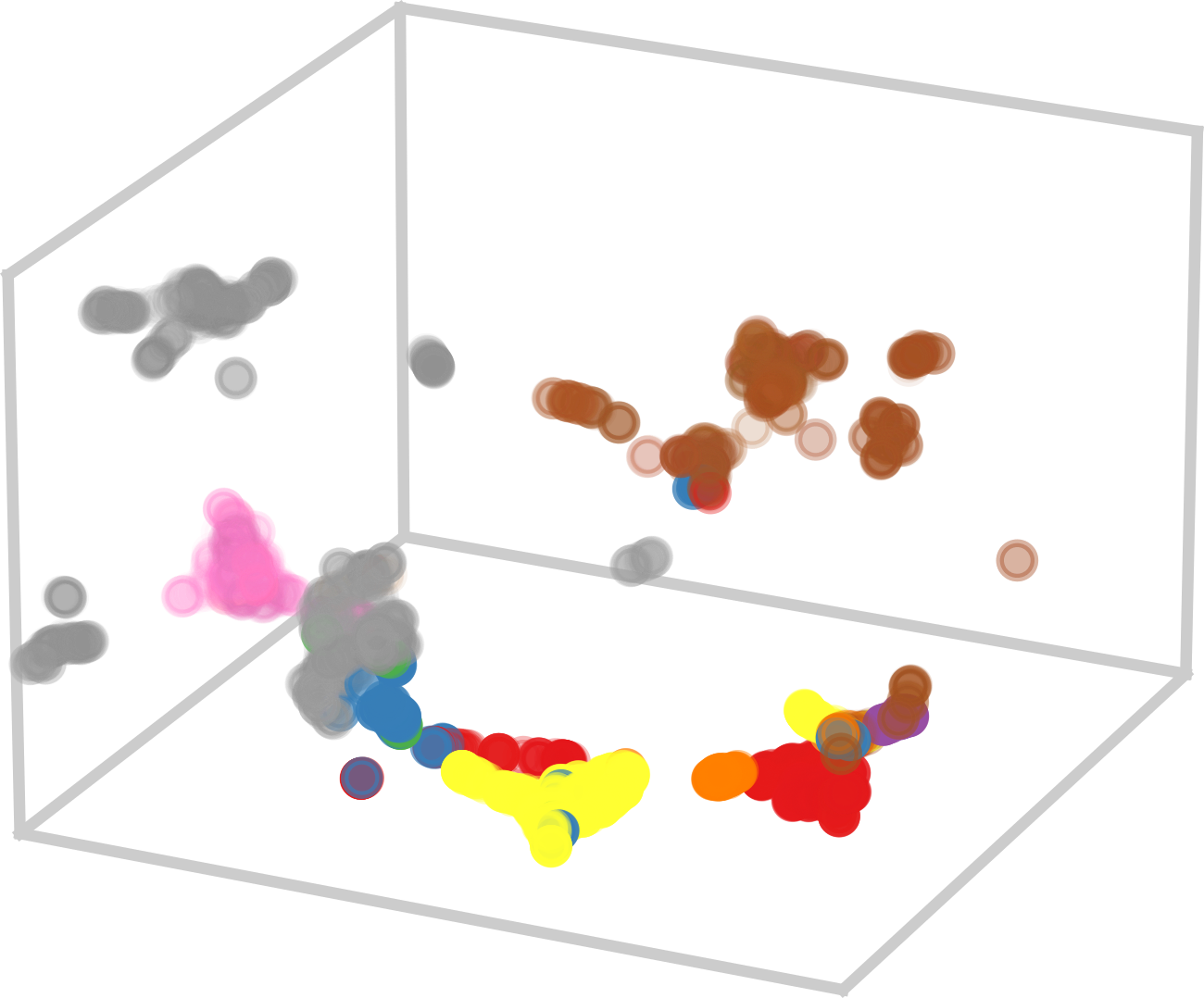

Projection of RNA-LLM embeddings: The UMAP notebook makes use of a UMAP projection to illustrate the high-dimensional embeddings into a 3D space.

-

Performance on increasing homology challenge datasets: The violinplots notebook generates the plots for performance analysis for each RNA-LLM with the different datasets.

-

Cross-family benchmarks: We used the boxplots notebook to assess inter-family performance.

-

Non-canonical and motifs performance: This notebook generates the comparison of non-canonical base pairs and the characterization of performance by structural motifs.