A Discord bot to answer questions based on docs using the latest ChatGPT API, built on Hasura GraphQL Engine and LangChain.

You can try the bot on our Discord, read more about the annoucement here.

Its features include:

- Asynchronous architecture based on the Hasura events system with rate limiting and retries.

- Performant Discord bot built on Hasura's streaming subscriptions.

- Ability to ingest your content to the bot.

- Prompt to make GPT-3 answer with sources while minimizing bogus answers.

Made with ❤️ by Hasura

We at Hasura always believe that we are better at caring for plumbing so they can focus on their core problems. Hence, when text-davinci-003 came out, we saw an opportunity to resolve our user's query on Discord.

We had the following objectives when creating the bot,

- Use Hasura's docs/blogs/learning courses.

- Always list sources when answering.

- Better to say "I don't know" over an incorrect answer.

- Capture user feedback and iterate quickly.

-

Setup pod42-server

-

You can use the one-click to deploy on Hasura Cloud to get started quickly:

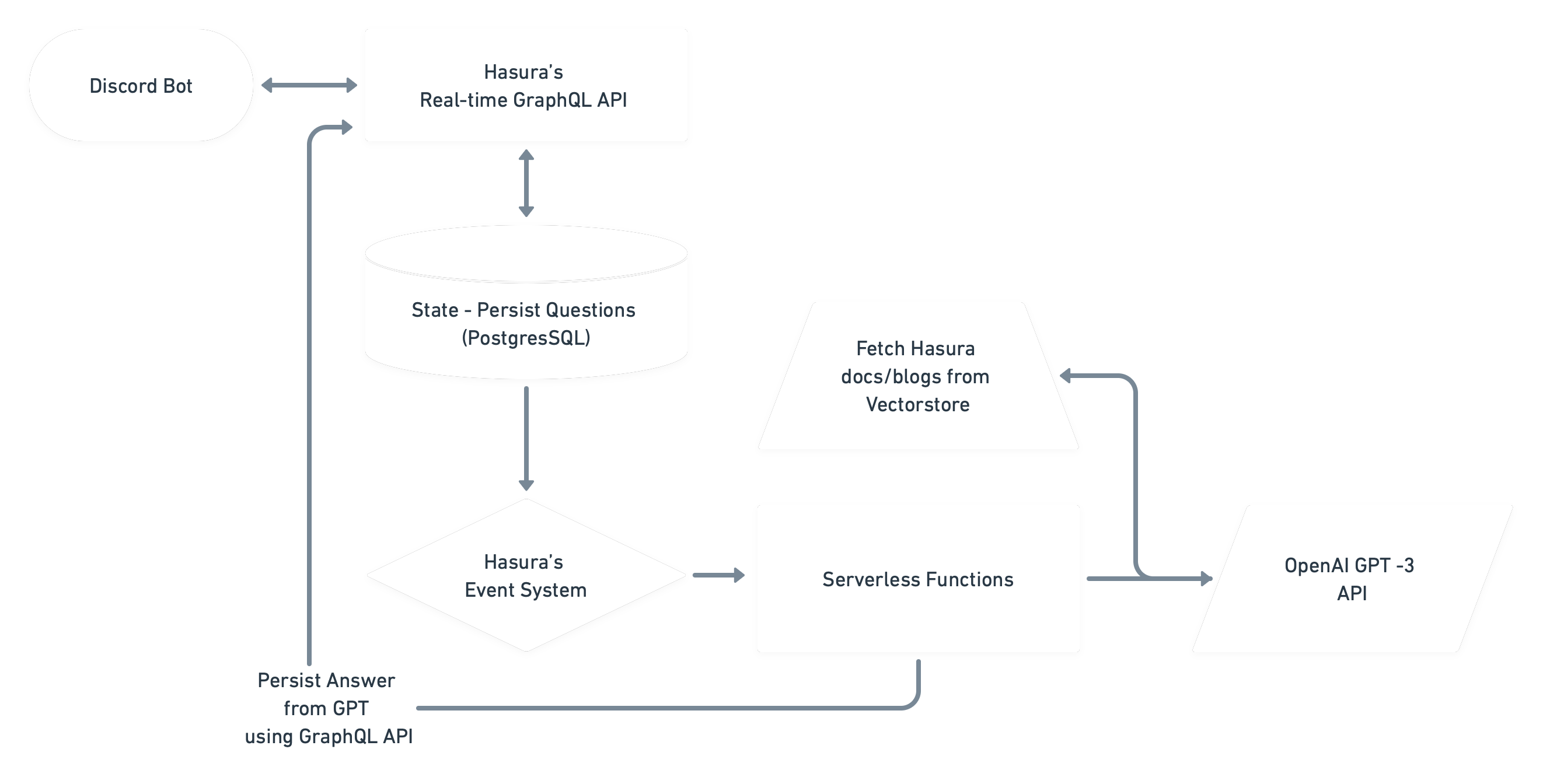

Pod42 is based on 3-factor architecture

Pod42 is based on 3-factor architecture

- Uses real-time GraphQL API from Hasura.

- Minimal state and code.

- Instant feedback.

- Easily Scalable.

Tasks:

- Collect questions from users and persist them via Hasura's GraphQL API.

- Listen for answers in real-time using subscriptions.

- Completely asynchronous orchestrator using event triggers and subscriptions.

- Event triggers handle retry and rate limit to webhook.

- Subscriptions allow us to deliver instant answers to the Discord bot.

Tasks:

- Trigger workflow when a new question comes.

- When the Answer arrives, notify the clients through subscription.

- Stateless easily be deployed as a function on the cloud.

Tasks:

- Fetch top K-related docs excerpts from the vector store.

- Combine them in one document along with the question to OpenAI.

- Persist the answer using Hasura's GraphQL API.

For Hasura's use case, we want to emphasize the correctness of the answers; it's better for us if Pod42 says "I Don't Know" instead of bluffing an answer.

We see that gpt-3.5-turbo does much better in that regard. It's also more verbose, but many new users like the details, and in terms of latency, gpt-3.5-turbo was ~60% faster.

Also, We found that passing information part user role in the prompt is more effective at the moment vs. the system role.

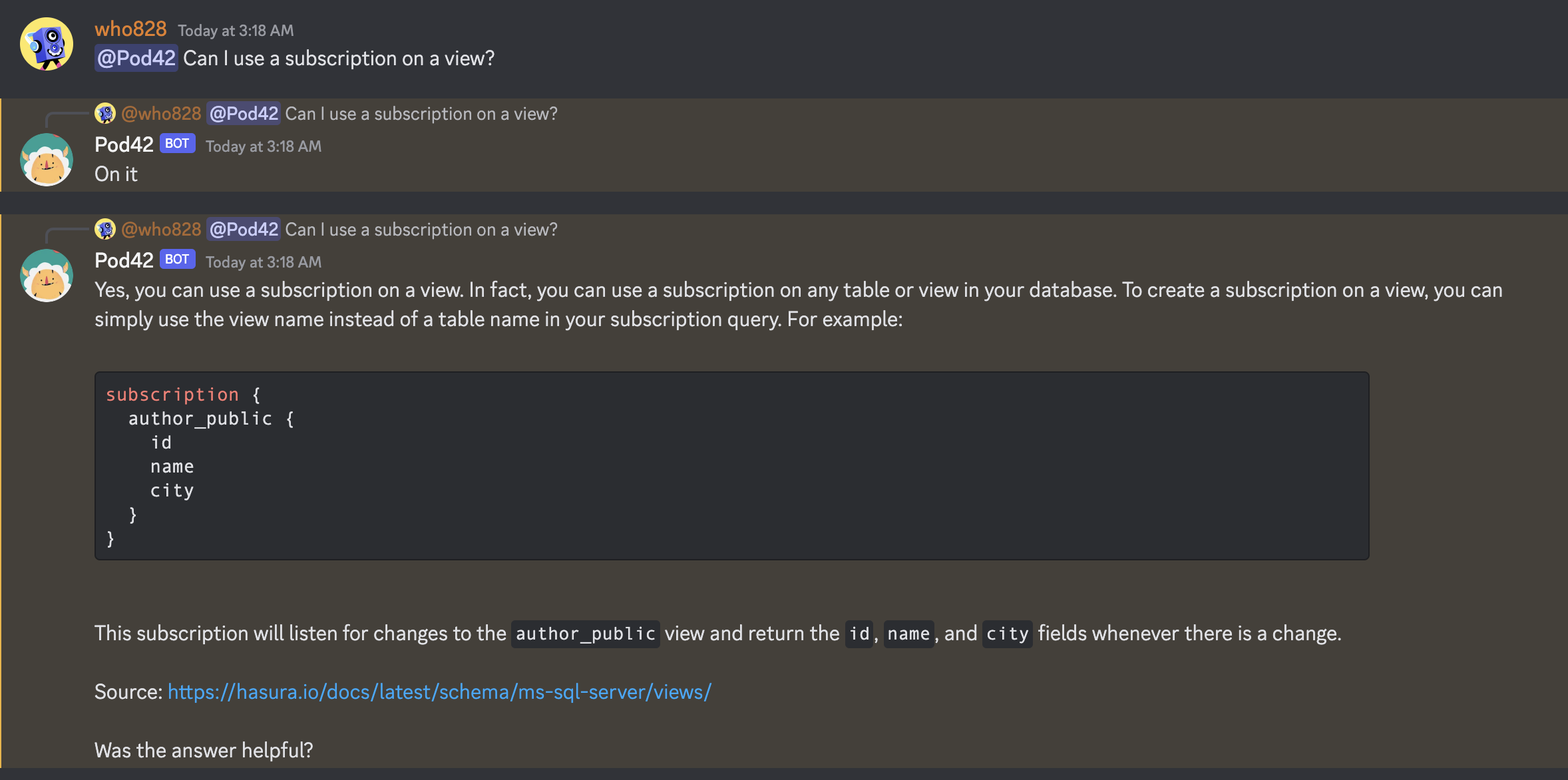

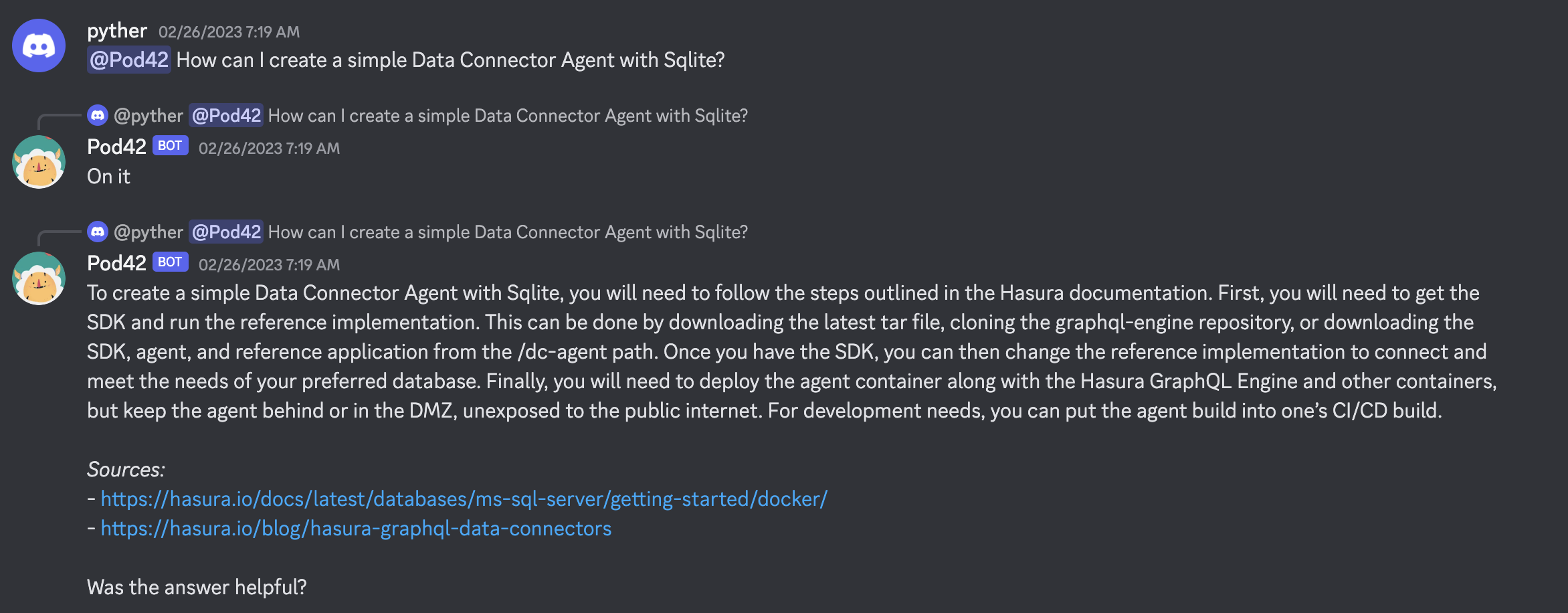

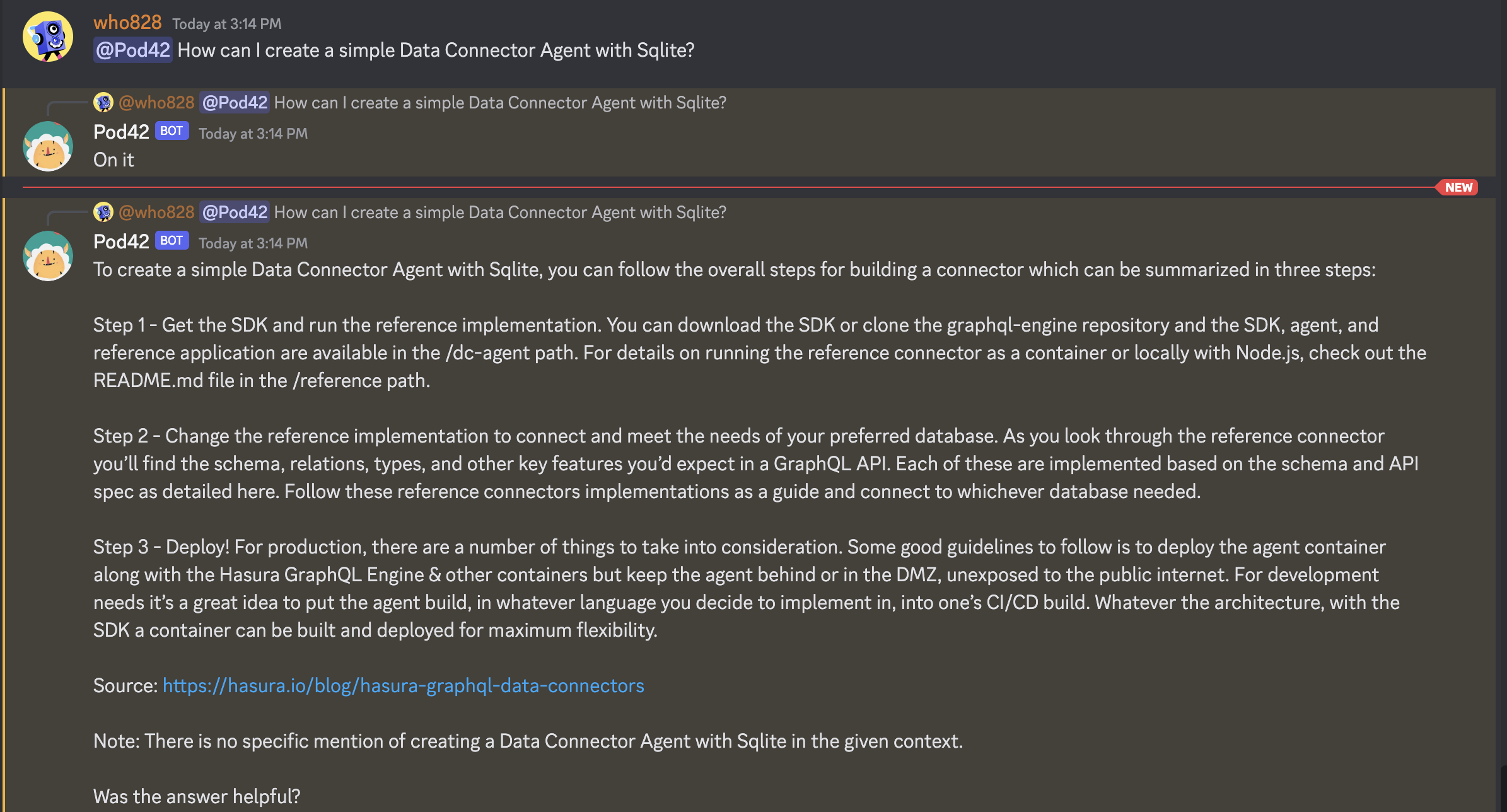

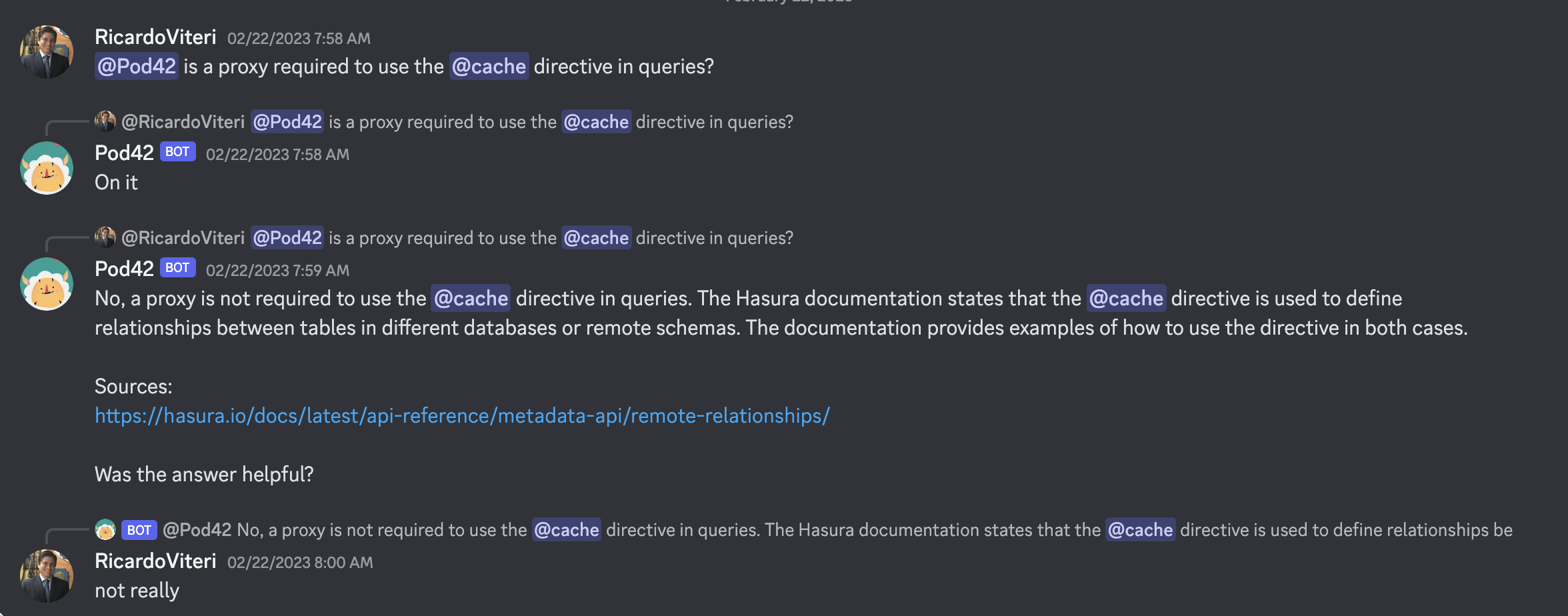

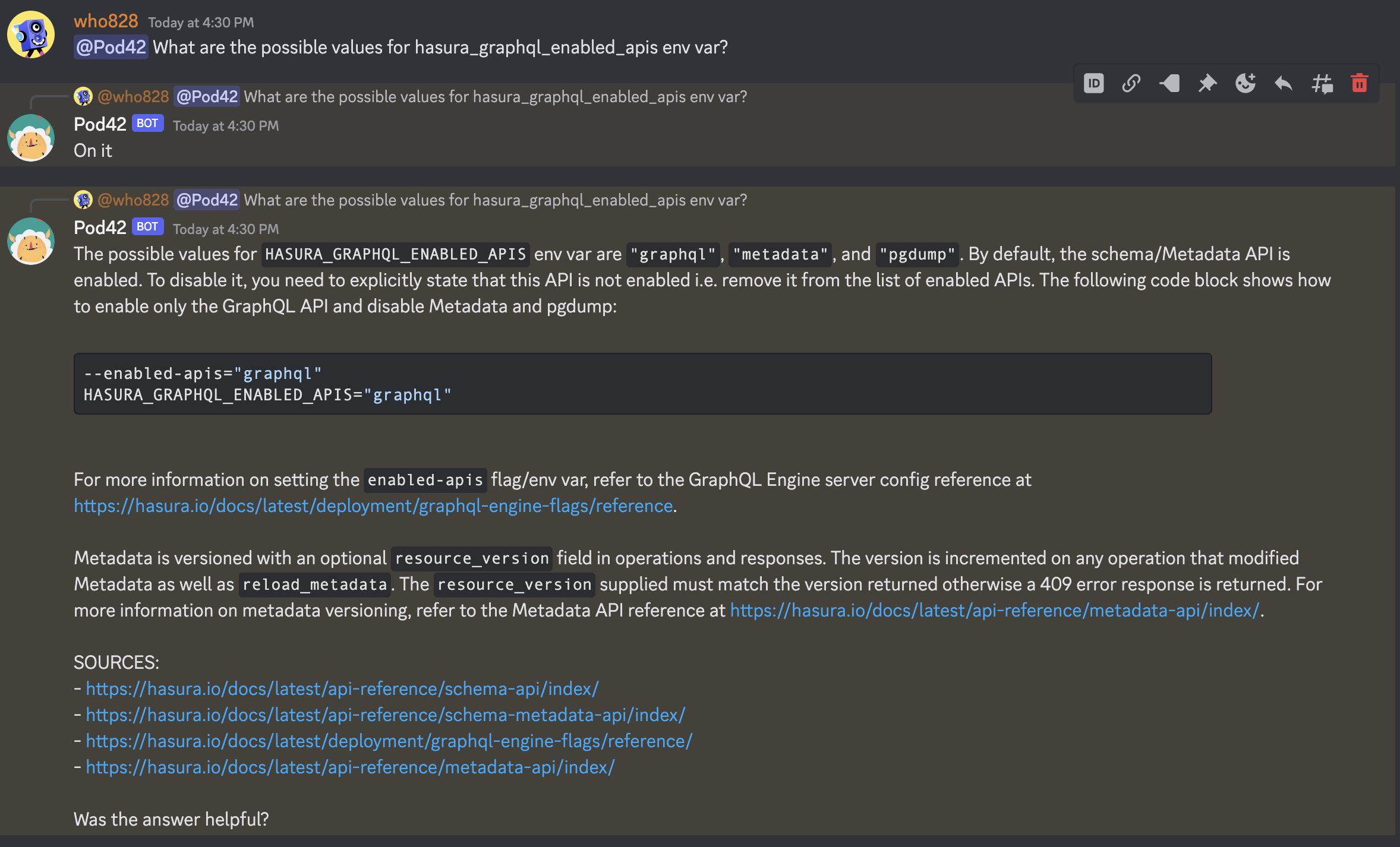

All the examples use the same prompt and vector store data.

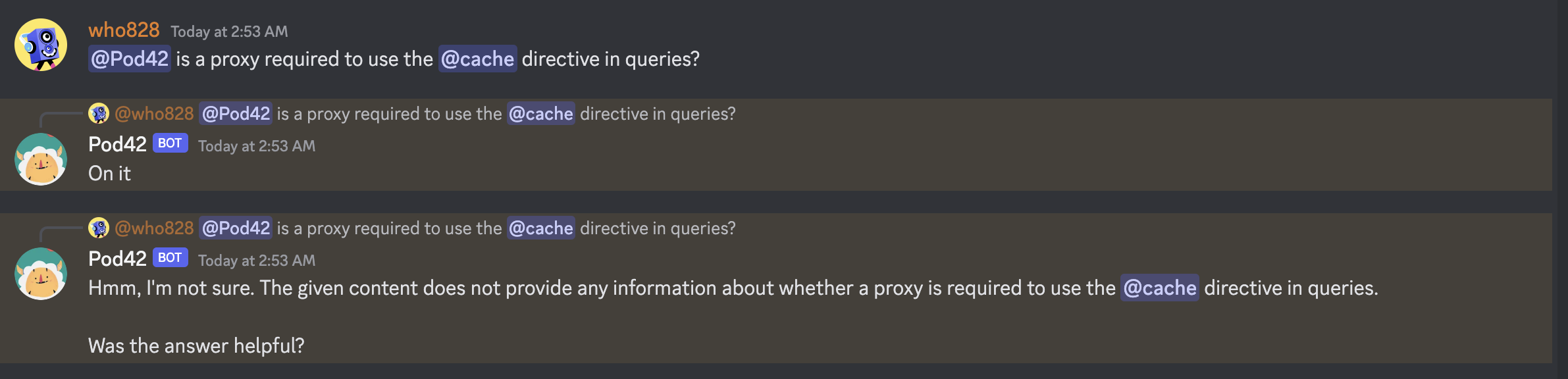

The above answer is entirely false; it misunderstood the question as Discord passed a user-id instead of the text @Cache.

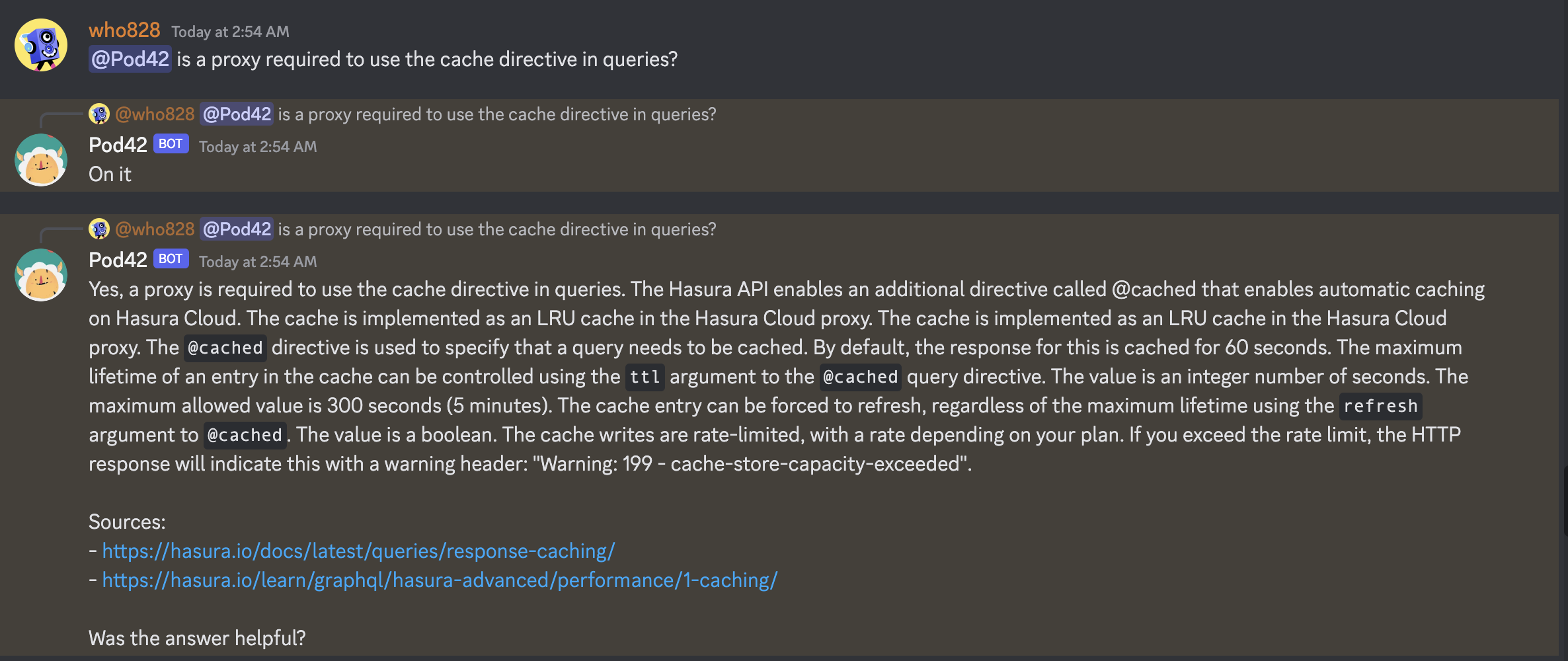

The above is a much better answer; it might prompt the user to ask the question better and at which point you get the following outcome.

Maintained with ❤️ by Hasura