Meta released open source Llama2 as an LLM model, this repository attempts to utilize it for question answering over a pdf/word/CSV file instead of using ChatGPT API

There are two files- download_version.py and using_llama.py

- In the former a quantized Llama2 model is downloaded and implemented using CTransformer and LangChain

- In the later Llama2 model from Meta repository and implemented as HuggingFaceLLM using Llama_index framework

- Upload document

- Create embeddings

- Store in vector store (FAISS/ SimpleDirectoryReader)

- User query

- Create embeddings

- Fetch documents

- Send this to Llama

- Langchain (designing prompts, history retention, and chains)

- llama_index (for HuggingFace implementation)

- FAISS (vector store)

- Transformer for embeddings (HuggingFace

Sentence Transformer) - Llama2 (serves as LLM)

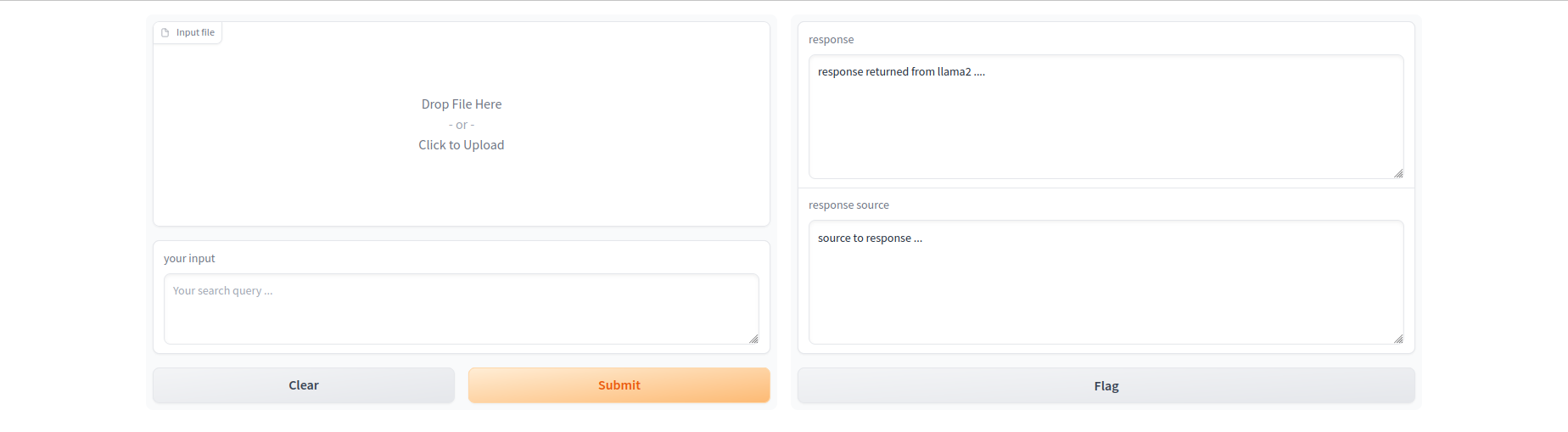

- Gradio (UI)

pip install -r requirements.txt

For using a downloaded llama2 quantized model (downloaded_version.py):

- Download a Llama2 model from- https://huggingface.co/TheBloke/Llama-2-7B-Chat-GGML/tree/main

- update model code in line 29:

self.llama_model = "<'downloaded_model_name'>" python3 download_version.py- Upload a .pdf/.csv/.docx file and type in your question on the Gradio UI

Note: there are many available models, you can choose any as per the model card

For using Llama as HuggingFaceLLm (using_llama.py):

- get access to

meta-llama - create a HuggingFace access token to access the repo

- shortlist any llama2 model offered, and update line 28:

self.model_name = "<'llama2_model_name'>" export HF_KEY = <'huggingface_access_token'>python3 using_llama.py- Upload a .pdf/.csv/.docx file and type in your question on the Gradio UI

Note: currently implemented model, Llama-2-7b-chat-hf

A word of caution: this implementation consumes time and is memory expensive