Attention: The code in this repository is intended for experimental use only and is not fully tested, documented, or supported by SingleStore. Visit the SingleStore Forums to ask questions about this repository.

This sample app demonstrates using Geography data with SingleStore.

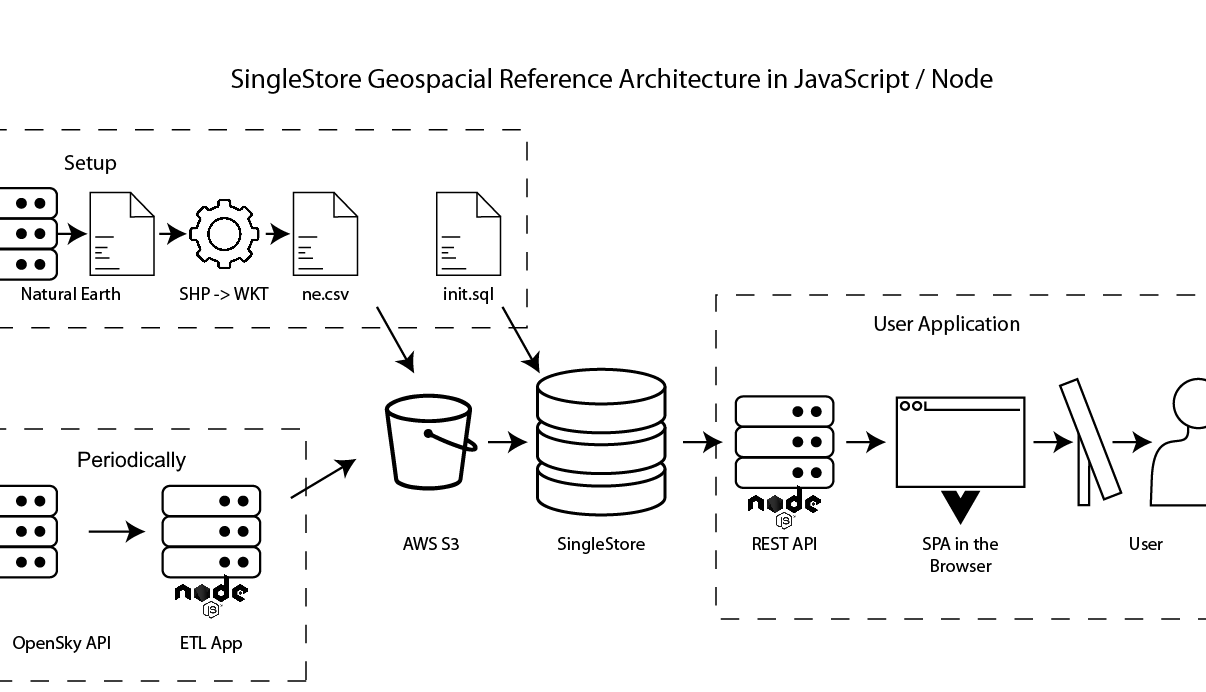

This application is broken into 3 main groups:

-

Setup: these steps are done once to create tables and ingest pipelines in SingleStore, and to load Country data from a CSV file.

-

Periodically: data is queried from the OpenSky API and written to an S3 bucket. A SingleStore ingest pipeline automatically loads this data as soon as the S3 file is created.

-

User Application: an Express API queries data and surfaces it to a Single Page Application (SPA) written in Vue.

Choose an installation mechanism from the sections below to install:

-

Managed Service: a fully managed database in the cloud

-

Self-Managed with Containers: run the data stores and application on your local development machine

-

Sign up for SingleStore Managed Service.

-

Load data into AWS S3:

Create an S3 bucket for

countries. From thes3-data/countriesfolder, loadnatural_earth_countries_110m.csvinto the s3 bucket. This file includes polygon data for country boundaries in WKT format. This data was downloaded from https://www.naturalearthdata.com/downloads/ and converted from SHP to WKT.Create an S3 bucket named

flights. Flight data from https://opensky-network.org/apidoc/rest.html will get loaded here by the data-load app. -

Open

init.sqland adjust the S3 credentials to match your S3 bucket in both queries.Remove

, "endpoint_url":"http://minio:9000/". "endpoint_url" is only needed if you're using an S3-compatible blob store.Set

aws_access_key_idandaws_secret_access_keyto match your AWS bucket.Set

regionto your AWS region.If you named your buckets differently or you have a folder inside your bucket, you may need to change the bucket name to the bucket name and folder name. E.g. if I had a bucket named 'plane-app' and a folder inside named 'countries', I'd change the bucket from

countriestoS3_BUCKET=plane-app/countries -

Spin up a SingleStore Managed Service trial cluster.

-

Copy

init.sqlinto the query editor and run all queries to create the two tables and start the two data ingest pipelines. -

Clone this GitHub repository or download the zip file and unzip it.

-

Adjust connection details in

docker-compose-managed-service.yamlto point to your managed service cluster and S3 bucket.Set

S3_REGION,S3_BUCKET,S3_ACCESSKEY, andS3_SECRETKEYto match your AWS S3 details for the flights bucket.If you have a folder inside your bucket, set

S3_FOLDER. E.g. if I have a bucket namedplanes-appand a folder inside namedflights, thenS3_BUCKET=planes-appandS3_FOLDER=flights. Unfortunately we can't use the shorthand'planes-app/flights'used by piplines.Set

SINGLESTORE_HOST,SINGLESTORE_USER, andSINGLESTORE_PASSWORDto the SingleStore cluster details. -

Run

docker-compose -f docker-compose-managed-service.yaml buildto build all the containers. -

Run

docker-compose -f docker-compose-managed-service.yaml upto start the apps. -

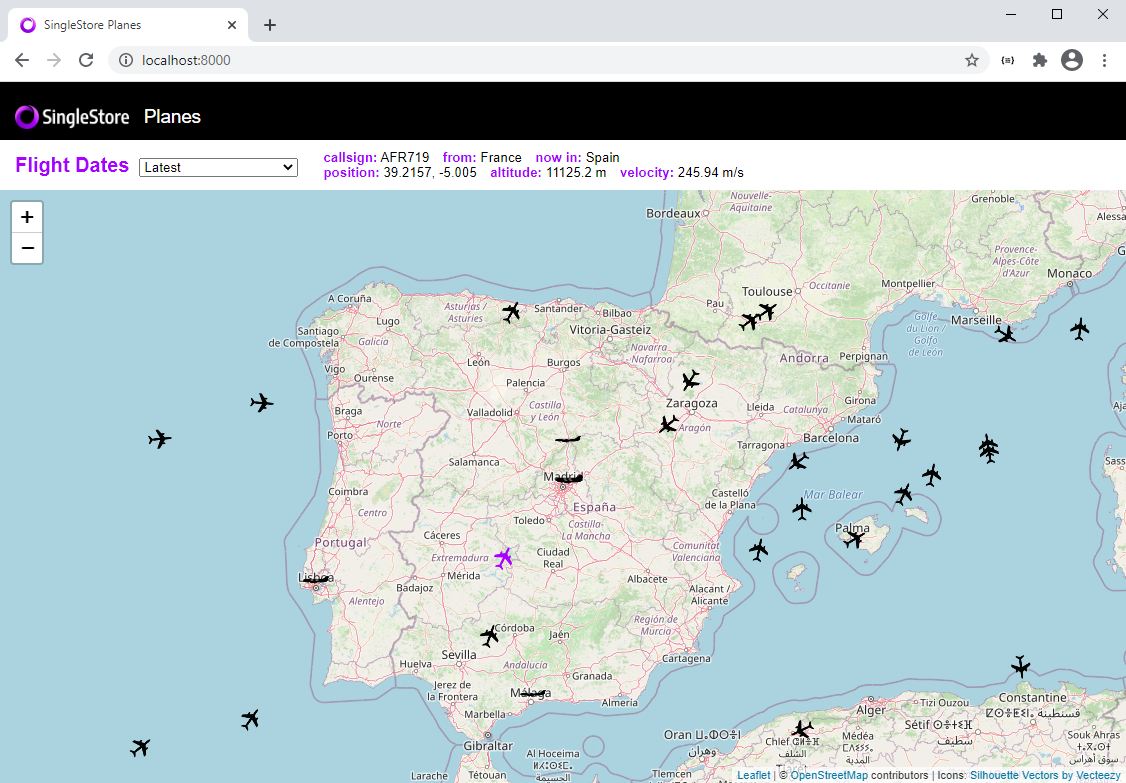

Browse to http://localhost:8000/ and watch the planes move. Click on a plane to see the details, and choose a load date to see historical data.

-

When you're done, hit cntrl-c and run

docker-compose -f docker-compose-managed-service.yaml downto stop all the containers.

-

Sign up for a free SingleStore license. This allows you to run up to 4 nodes up to 32 gigs each for free.

-

Grab your license key from SingleStore portal and set it into

docker-compose.yaml. -

Install Docker Desktop if it isn't installed already.

-

Run

docker-compose upfrom your favorite terminal.This will spin up both a SingleStore cluster including SingleStore Studio, the web-based SQL query and administration tool.

This will spin up MinIO, an S3-compatible blob store.

The S3 data is automatically mapped into the MinIO container. Two buckets are created automatically:

countriesandflights.The

init.sqlfile is automatically run. This creates themapsdatabase with a table forcountriesand a table forflights. It also creates a pipeline to ingest country and flight data from MinIO.This will also build and deploy the data loading app that pulls flight data from https://opensky-network.org/apidoc/rest.html and loads it into MinIO every 10 seconds.

This will also build and start the Node.js app on http://localhost:8000. This app consists of an Express API that queries the database and a Vue.js app that shows planes on a Leaflet map.

-

Browse to http://localhost:8000/ and watch the planes move. Click on a plane to see the details, and choose a load date to see historical data.

-

When you're done, hit cntrl-c and run

docker-compose downto stop all the containers.

MIT