Implementation of Perceptual Losses for Real-Time Style Transfer and Super-Resolution using pytorch.

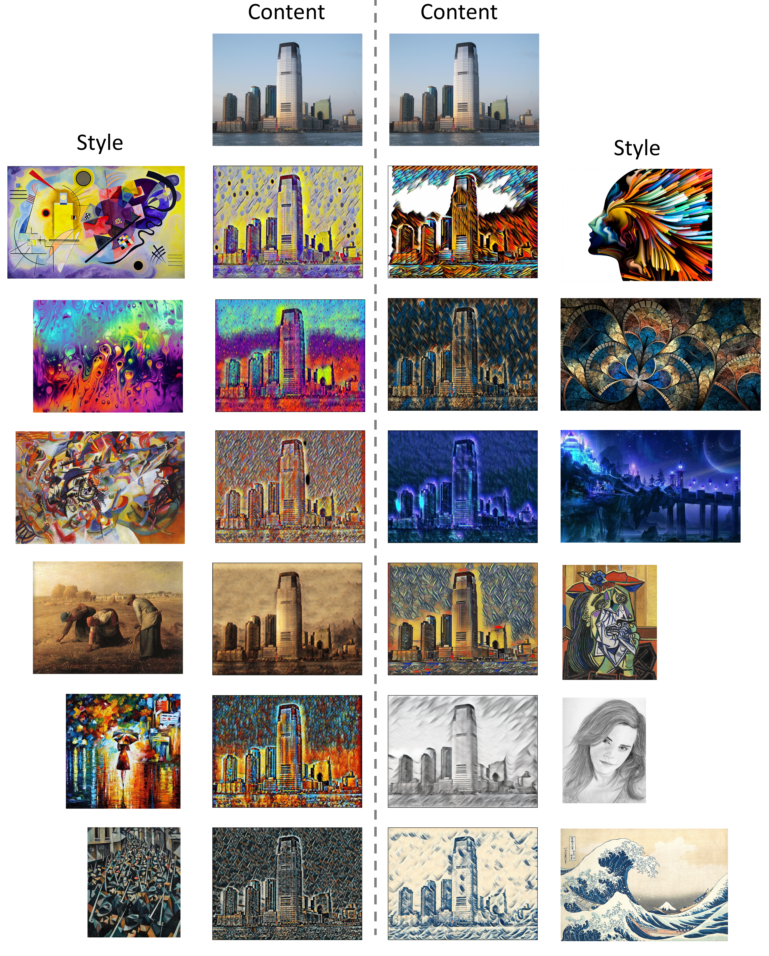

You can see larger result images in result/city

python train.py --trn_dir='data/train' --style_path='style/abstract_1.png', --lambda_s=500000

python test.py --weight_path='weight/abstract_1.weight', --content_path='content/city.png' --output_path='result/abstract_1.png'

The loss function is loss = lambda_c * content_loss + lambda_s * style_loss

lambda_s has a heavy influence on the result images. Following is the list of value I used.

| Style image | lambda_s |

|---|---|

| abstract_1 | 1e+5 |

| abstract_2 | 1e+5 |

| abstract_3 | 1e+5 |

| abstract_4 | 3e+5 |

| composition | 2e+5 |

| fantasy | 5e+5 |

| monet | 5e+5 |

| picaso | 1e+5 |

| rain_princess | 1e+5 |

| sketch | 5e+5 |

| war | 3e+5 |

| wave | 5e+5 |

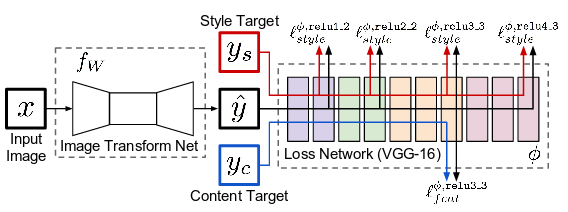

In this implementation, pretrained VGG is used. You can use it easily because pytorch provides it. Normalization with mean and std which were used in training VGG is necessary to get a best result. All images(training images, content images, style images and result images of TransformationNet before going into VGG) are normalized with mean = (0.485, 0.456, 0.406) and std = (0.229, 0.224, 0.225).