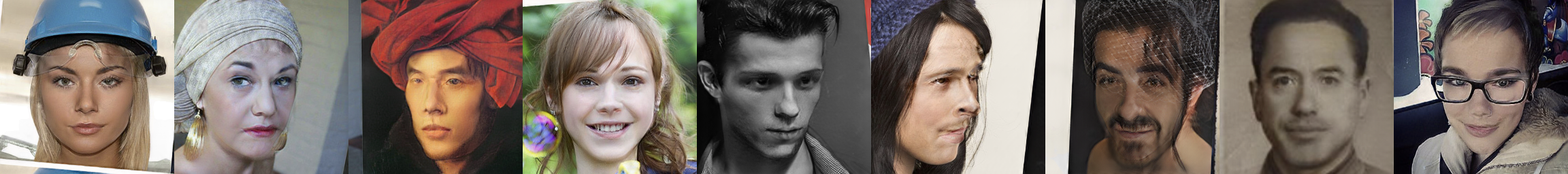

Unofficial Implementation of FaceShifter: Towards High Fidelity And Occlusion Aware Face Swapping with Pytorch-Lightning. In the paper, there are two networks for full pipe-line, AEI-Net and HEAR-Net. We only implement the AEI-Net, which is main network for face swapping.

You need to download and unzip:

- FFHQ

- CelebA-HQ (Unofficial Download Script)

- VGGFace (Unofficial Download Script)

Preprocessing code is mainly based on Nvidia's FFHQ preprocessing code. You may modify our preprocess with multi-processing functions to finish pre-processing step much faster.

# build docker image from Dockerfile

docker build -t dlib:0.0 ./preprocess

# run docker container from image

docker run -itd --ipc host -v /PATH_TO_THIS_FOLDER/preprocess:/workspace -v /PATH_TO_THE_DATA:/DATA -v /PATH_TO_SAVE_DATASET:/RESULT --name dlib --tag dlib:0.0

# attach

docker attach dlib

# preprocess with dlib

python preprocess.py --root /DATA --output_dir /RESULTThere is yaml file in the config folder.

They must be edited to match your training requirements (dataset, metadata, etc.).

config/train.yaml: Configs for training AEI-Net.- Fill in the blanks of:

dataset_dir,valset_dir - You may want to change:

batch_sizefor GPUs other than 32GB V100, orchkpt_dirto save checkpoints in other disk.

- Fill in the blanks of:

We provide a Dockerfile for easier training environment setup.

docker build -t faceshifter:0.0 .

docker run -itd --ipc host --gpus all -v /PATH_TO_THIS_FOLDER:/workspace -v /PATH_TO_DATASET:/DATA --name FS --tag faceshifter:0.0

docker attach FSDuring the training process, pre-trained Arcface is required. We provide our pre-trained Arcface model; you can download at this link

To train the AEI-Net, run this command:

python aei_trainer.py -c <path_to_config_yaml> -g <gpus> -n <run_name>

# example command that might help you understand the arguments:

# train from scratch with name "my_runname"

python aei_trainer.py -c config/train.yaml -g 0 -n my_runnameOptionally, you can resume the training from previously saved checkpoint by adding -p <checkpoint_path> argument.

The progress of training with loss values and validation output can be monitored with Tensorboard.

By default, the logs will be stored at log, which can be modified by editing log.log_dir parameter at config yaml file.

tensorboard --log_dir log --bind_all # Scalars, Images, Hparams, Projector will be shown.To inference the AEI-Net, run this command:

python aei_inference.py --checkpoint_path <path_to_pre_trained_file> --target_image <path_to_target_image_file> --source_image <path_to_source_image_file> --output_path <path_to_output_image_file> --gpu_num <number of gpu>

# example command that might help you understand the arguments:

# train from scratch with name "my_runname"

python aei_inference.py --checkpoint_path chkpt/my_runname/epoch=0.ckpt --target_image target.png --source_image source.png --output_path output.png --gpu_num 0We probived colab example. You can use it with your own trained weight.

Reminds you that we only implement the AEI-Net, and the results in the original paper were generated by AEI-Net and HEAR-Net.

We will soon release the FaceShifter in our cloud API service, maum.ai

Changho Choi @ MINDs Lab, Inc. (changho@mindslab.ai)

@article{li2019faceshifter,

title={Faceshifter: Towards high fidelity and occlusion aware face swapping},

author={Li, Lingzhi and Bao, Jianmin and Yang, Hao and Chen, Dong and Wen, Fang},

journal={arXiv preprint arXiv:1912.13457},

year={2019}

}