Track Every Thing in the Wild [ECCV 2022].

This is the official implementation of TETA metric describe in the paper. This repo is an updated version of the original TET repo for TETA metric.

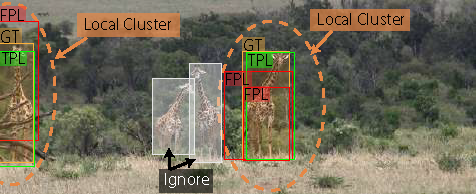

TETA is a new metric for tracking evaluation that breaks tracking measurement into three sub-factors: localization, association, and classification, allowing comprehensive benchmarking of tracking performance even under inaccurate classification. TETA also deals with the challenging incomplete annotation problem in large-scale tracking datasets. Instead of using the predicted class labels to group per-class tracking results, we use location with the help of local cluster evaluation. We treat each ground truth bounding box of the target class as the anchor of each cluster and group prediction results inside each cluster to evaluate the localization and association performance. Our local clusters enable us to evaluate tracks even when the class prediction is wrong.

TETA is designed to evaluate multiple object tracking (MOT) and segmentation (MOTS) in large-scale multiple classes and open-vocabulary scenarios. It has been widely used for evaluate trackers performance on BDD100K and TAO datasets. Some key features of TETA are:

- Disentangle classification from tracking: TETA disentangles classification from tracking, allowing comprehensive benchmarking of tracking performance. Don't worry if your tracker is not the strongest in classification. TETA can still gives you credits for good localization and association.

- Comprehensive evaluation: TETA consists of three parts: a localization score, an association score, and a classification score, which enable us to evaluate the different aspects of each tracker properly.

- Dealing with incomplete annotations: TETA evaluates trackers based on a novel local cluster design. TETA deals with the challenging incomplete annotation problem. You can even evaluate your MOT tracker on a single object tracking dataset!

- Easy to use: TETA is easy to use and support COCO-VID style format for most tracking datasets and Scalabel format for BDD100K. You just need to prepare your results json in the right format and run the evaluation script.

- Support evaluation with both mask and box format: TETA can evaluate tracking results in both mask and box format. The key differences are whether we use mask IoU or box IoU for localization evaluation.

Install the TETA environment using pip.

pip install -r requirements.txt

Go to the root of the teta folder and quick install by

pip install -e .

Result format follows COCO-VID format. We describe the format in detail here

For evaluate MOT and MOTS on BDD100K, we support Scalabel format. We describe the format in detail here

Overall you can run following command to evaluate your tracker on TAO TETA benchmark, given the ground truth json file and the prediction json file using COCO-VID format.

python scripts/run_tao.py --METRICS TETA --TRACKERS_TO_EVAL $NAME_OF_YOUR_MODEL$ --GT_FOLDER ${GT_JSON_PATH}.json --TRACKER_SUB_FOLDER ${RESULT_JSON_PATH}.json

Please note, TAO benchmark initially aligns its class names with LVISv0.5 which has 1230 classes. For example, the initial TETA benchmark on TET paper is using v0.5 class names.

Example Run:

-

Download GT: If your model use LVIS v0.5 class names, you can evaluate your model on TAO TETA v0.5 benchmark by using the corresponding ground truth json file. We provide TAO Val Ground Truth json file in v0.5 format tao_val_lvis_v05_classes.json

-

Download Example Pred: You can download an example prediction file from the TETer-swinL model's result json file: teter-swinL-tao-val.json.

-

Run Command, assume you put your downloaded files in the

./jsons/under the root folder:

python scripts/run_tao.py --METRICS TETA --TRACKERS_TO_EVAL my_tracker --GT_FOLDER ./jsons/tao_val_lvis_v05_classes.json --TRACKER_SUB_FOLDER ./jsons/teter-swinL-tao-val.json

Since LVIS update the class names to v1.0, we also provide TAO Val Ground Truth json file in v1.0 format tao_val_lvis_v1_classes.json The conversion script is provided in the scripts if you want to convert the v0.5 class names to v1.0 class names by yourself.

Example Run:

-

Download GT: tao_val_lvis_v1_classes.json

-

Download Example Pred: You can download an example prediction file from MASA-GroundingDINO's results json file tested on TAO val: masa-gdino-detic-dets-tao-val-preds.json.

-

Run Command, assume you put your downloaded files in the

./jsons/under the root folder:

python scripts/run_tao.py --METRICS TETA --TRACKERS_TO_EVAL my_tracker --GT_FOLDER ./jsons/tao_val_lvis_v1_classes.json --TRACKER_SUB_FOLDER ./jsons/masa-gdino-detic-dets-tao-val-preds.json

Open-Vocabulary MOT benchmark is first introduced by OVTrack. Here we provide the evaluation script for Open-Vocabulary MOT benchmark. Open-Vocabulary MOT benchmark uses TAO dataset as the evaluation dataset and use LVIS v1.0 class names.

Overall, you can use follow command to evaluate your trackers on Open-Vocabulary MOT benchmark.

python scripts/run_ovmot.py --METRICS TETA --TRACKERS_TO_EVAL $NAME_OF_YOUR_MODEL$ --GT_FOLDER ${GT_JSON_PATH}.json --TRACKER_SUB_FOLDER ${RESULT_JSON_PATH}.json

- Download GT: tao_val_lvis_v1_classes.json

- Download Example Pred: You can download an example prediction file from MASA-GroundingDINO's results json file tested on Open-Vocabulary MOT: masa-gdino-detic-dets-tao-val-preds.json.

- Run Command, assume you put your downloaded files in the

./jsons/under the root folder:

python scripts/run_ovmot.py --METRICS TETA --TRACKERS_TO_EVAL my_tracker --GT_FOLDER ./jsons/tao_val_lvis_v1_classes.json --TRACKER_SUB_FOLDER ./jsons/masa-gdino-detic-dets-tao-val-preds.json

- Download GT: tao_test_lvis_v1_classes.json. Then you can evaluate your tracker on Open-Vocabulary MOT test set by using the corresponding ground truth json file like above.

Run on BDD100K MOT val dataset.

- Download GT: Please first download the annotations from the official website. On the download page, the required data and annotations are

motset annotations:MOT 2020 Labels. - Download Example Pred: You can download an example prediction file from the MASA-SAM-ViT-B's results json file tested on BDD100K MOT val: masa_sam_vitb_bdd_mot_val.json

- Run Command, assume you put your downloaded the pred in the

./jsons/under the root folder and the GT in the./data/bdd/annotations/scalabel_gt/box_track_20/val/:

python scripts/run_bdd.py --scalabel_gt data/bdd/annotations/scalabel_gt/box_track_20/val/ --resfile_path ./jsons/masa_sam_vitb_bdd_mot_val.json --metrics TETA HOTA CLEAR

Run on BDD100K MOTS val dataset.

- Download GT: Please first download the annotations from the official website. On the download page, the required data and annotations are

motsset annotations:MOTS 2020 Labels. - Download Example Pred: You can download an example prediction file from the MASA-SAM-ViT-B's results json file tested on BDD100K MOT val: masa_sam_vitb_bdd_mots_val.json

- Run Command, assume you put your downloaded the pred in the

./jsons/under the root folder and the GT in the./data/bdd/annotations/scalabel_gt/seg_track_20/val/:

python scripts/run_bdd.py --scalabel_gt data/bdd/annotations/scalabel_gt/seg_track_20/val/ --resfile_path ./jsons/masa_sam_vitb_bdd_mots_val.json --metrics TETA HOTA CLEAR --with_mask

@InProceedings{trackeverything,

title = {Tracking Every Thing in the Wild},

author = {Li, Siyuan and Danelljan, Martin and Ding, Henghui and Huang, Thomas E. and Yu, Fisher},

booktitle = {Proceedings of the European Conference on Computer Vision (ECCV)},

month = {Oct},

year = {2022}

}